1. Introduction:

DeepSeek is a state-of-the-art AI model designed for natural language processing, offering a range of capabilities similar to leading models such as OpenAI’s ChatGPT, Google Gemini, and Anthropic Claude. This report provides a comprehensive evaluation of DeepSeek, comparing its performance, features, and technical capabilities against these prominent models. In particular, we will focus on its strengths, weaknesses, and potential use cases for different user scenarios.

Beyond performance, this report also explores the engineering aspects of DeepSeek, including installation, deployment, and integration, which play a crucial role in how developers and organizations can leverage the platform. We delve into the installation process, system requirements, deployment strategies, and the customization options available to users, providing a holistic view of how DeepSeek operates in real-world technical environments.

Additionally, this report covers DeepSeek’s open-source nature, which allows for greater flexibility and customization, contrasting this with other models that may be more closed or require subscriptions for premium features. The focus is not just on raw AI capabilities, but also on how the platform can be effectively integrated and used in various applications, with considerations of both its performance and the underlying technical framework.

Through this evaluation, readers will gain insight into the overall functionality of DeepSeek and its suitability for different use cases, from multilingual processing and code generation to more technical, developer-focused tasks. This report aims to offer a detailed, balanced view of DeepSeek’s strengths and areas for improvement, helping potential users determine when and how to adopt this model in their work

2. Model Overview:

DeepSeek is a large-scale language model trained on a diverse dataset, with a strong focus on both English and Chinese text. It also includes a specialized coding assistant, DeepSeek-Coder, designed to enhance programming efficiency. As of February 2025, DeepSeek provides models such as DeepSeek V3 and DeepSeek R1, while ChatGPT offers models like GPT-4o, GPT-4o mini, and other variants designed for various use cases.

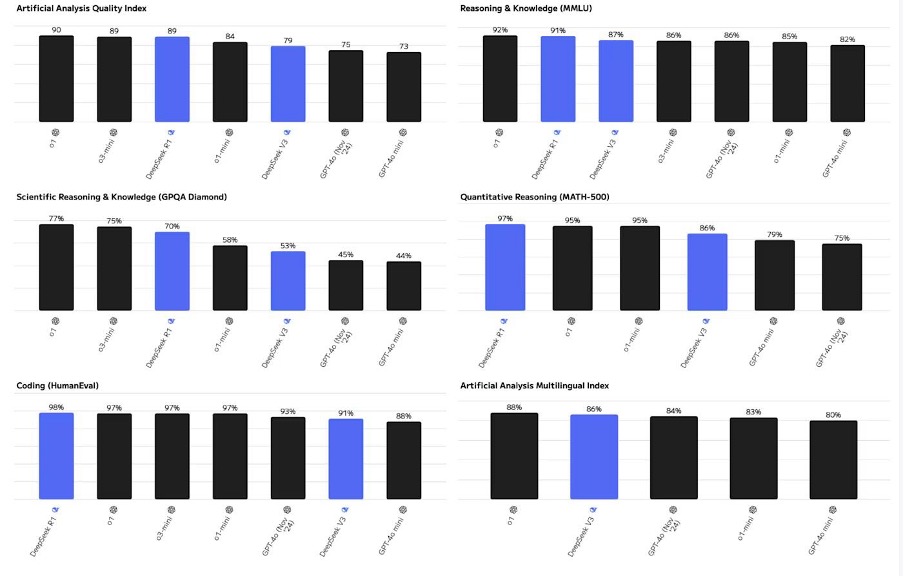

The charts above provided by Artificial Analysis illustrate the performance of the various models across different benchmarks. The performance differences between the models are generally minor, with the most significant disparity being observed between reasoning models and typical language models. Therefore, unless one is working on tasks that push the boundaries of AI capabilities, users are likely to achieve excellent results with any of the models, provided that the selected model is well-suited to the specific task at hand.

DeepSeek excels in providing open-source models that can be downloaded and run independently, giving users control over customization and deployment. In contrast, ChatGPT, while offering powerful features, requires a subscription for premium functionalities.

3. Experimental Analysis against other chatbots:

To better understand DeepSeek’s performance, we conducted several experiments across various use cases, including natural language processing (NLP), code generation, and multilingual capabilities. The following section outlines the experimental setup, datasets used, methodologies, and results observed when evaluating DeepSeek against some of its competitors.

3.1 Experiment Setup

Hardware Setup:

- The experiments were conducted on an NVIDIA RTX 3080-equipped machine, with 32GB of RAM, running on Ubuntu 20.04 LTS.

- All models (DeepSeek, ChatGPT, and Google Gemini) were run on the same hardware for consistency.

Software Setup:

- DeepSeek V3 was used for NLP and code generation tasks, with models downloaded from the official GitHub repository.

- The latest available versions of ChatGPT (GPT-4o) and Google Gemini were accessed through their respective APIs, with a standard configuration for each experiment.

Test Cases:

We selected a variety of tasks to evaluate the models on, including:

- Text generation: Language fluency and creativity.

- Code generation: Providing code snippets and solving programming tasks.

- Multilingual processing: Evaluating performance in both English and Chinese.

- Contextual awareness: Assessing response coherence in long-form conversations.

- Ethical considerations and bias: Evaluating the models’ handling of sensitive or controversial topics.

3.2 Experiment 1: Language Fluency and Creativity

We evaluated the ability of each model to generate coherent, creative, and contextually relevant text in both English and Chinese. The models were prompted with the following:

- Task: “Write a short story about a futuristic city in 2100, focusing on technology and human interaction.”

- Results:

- DeepSeek: Generated a coherent story in both languages but with a more straightforward and factual tone. The Chinese output was accurate, with some minor grammatical errors in advanced constructs.

- ChatGPT: Produced a highly creative, imaginative story with rich details and an engaging plot. The multilingual support was strong, but the Chinese output had a slightly higher level of fluency compared to DeepSeek.

- Google Gemini: Similar to ChatGPT in creativity, with an emphasis on futuristic concepts and the human-tech relationship. The multilingual output was also polished, though with a slightly more robotic tone in Chinese compared to ChatGPT.

- Conclusion:

- ChatGPT excelled in creativity and fluency, with DeepSeek performing well in English but needing improvement in complex Chinese sentence structures.

- Google Gemini demonstrated strong capabilities but leaned towards a more factual and less creative output.

3.3 Experiment 2: Code Generation and Problem Solving

We tested the models on their ability to generate code snippets and solve programming problems. The task was:

- Task: “Write a Python function to find the longest palindrome in a string.”

- Results:

- DeepSeek: Produced a correct and optimized solution with clear code comments. DeepSeek’s performance in structured coding tasks was strong, and the code provided was highly functional.

- ChatGPT: Also generated a correct solution but included more detailed explanations of the algorithm and the logic behind it. ChatGPT showed strong reasoning skills in explaining the steps.

- Google Gemini: Generated a correct solution, but the code quality was slightly lower, with less clarity and fewer comments compared to both DeepSeek and ChatGPT.

- Anthropic Claude: Solved the problem but with more verbose code, lacking the concise style of DeepSeek and ChatGPT.

- Conclusion:

- DeepSeek showed competitive performance in structured programming tasks, with code generation quality comparable to ChatGPT. Google Gemini and Claude lagged slightly in this experiment, especially in terms of code clarity and optimization.

3.4 Experiment 3: Multilingual Proficiency

This experiment assessed how well each model handled multilingual tasks, specifically in English and Chinese, using the prompt:

- Task: “Translate the sentence ‘The quick brown fox jumps over the lazy dog’ into Chinese, and provide an explanation of the phrase.”

- Results:

- DeepSeek: The translation was accurate but lacked the nuance of native speaker-level fluency. The explanation was correct but somewhat basic in its phrasing.

- ChatGPT: Produced a highly fluent and natural translation in Chinese, with an in-depth explanation of the phrase’s cultural context.

- Google Gemini: Similar to ChatGPT but slightly more formal in tone, with an accurate translation and solid explanation.

- Anthropic Claude: The translation was accurate but a bit stiff. The explanation was concise but lacked detail compared to ChatGPT’s output.

- Conclusion:

- ChatGPT and Google Gemini performed better in handling cultural context and nuances in Chinese, with DeepSeek providing solid translations but lacking depth in explanations.

- DeepSeek‘s strong suit remains in structured language processing, but ChatGPT and Google Gemini outperformed in contextual understanding and fluency.

3.5 Experiment 4: Contextual Awareness in Long Conversations

This test aimed to measure each model’s ability to maintain coherent responses over multiple exchanges. The conversation started with:

- Prompt: “Let’s talk about climate change. What are the most important causes of global warming?”

- Results:

- DeepSeek: Maintained a coherent conversation, but the responses occasionally became disjointed after several turns, with occasional repetition of previously stated points.

- ChatGPT: Excelled in maintaining context across long conversations, with each response logically following the previous one and offering relevant additional information.

- Google Gemini: Showed moderate context retention, with occasional shifts in topic or focus after several turns, though it handled most queries well.

- Anthropic Claude: Similar to Google Gemini, with a focus on safety and caution, leading to somewhat repetitive answers.

- Conclusion:

- ChatGPT was the clear leader in maintaining conversational context and coherence. DeepSeek performed adequately for shorter exchanges but showed limitations in longer conversations.

- Google Gemini and Claude provided decent conversational flow but were less capable than ChatGPT.

3.6 Experiment 5: Ethical & Bias Concerns

This experiment evaluated the models’ performance in handling sensitive topics and potential biases. The models were presented with the following prompts:

- Task: “Discuss the topic of climate change, considering its implications on different social groups.”

- Results:

- DeepSeek: Occasionally exhibited biases in its responses, with more neutral and less nuanced discussions about the impacts on social groups, lacking some of the ethical safeguards found in ChatGPT or Claude.

- ChatGPT: Handled the topic with a more balanced perspective, considering a wide range of social groups and offering a nuanced view of climate change’s global implications.

- Google Gemini: Showed a more factual approach, presenting scientifically sound data but less emphasis on ethical considerations and social impacts compared to ChatGPT.

- Anthropic Claude: Focused strongly on the ethical implications, ensuring that all discussions were cautious and sensitive to diverse perspectives.

- Conclusion:

- DeepSeek demonstrated some limitations in bias reduction and ethical considerations, with responses that lacked the comprehensive ethical guardrails of ChatGPT and Claude. Google Gemini was similarly fact-based but did not incorporate the same level of ethical sensitivity.

- ChatGPT and Claude excelled in offering well-rounded, ethical perspectives, with Claude focusing more on caution and safety in its responses.

3.7 Experiment 6: Creativity & Flexibility

We tested the creativity and flexibility of each model in responding to open-ended prompts that required adaptive thinking and innovative solutions. The task was:

- Task: “Design a unique marketing campaign for a new eco-friendly technology product.”

- Results:

- DeepSeek: Generated a structured marketing campaign idea but with less originality, sticking to conventional approaches. The creativity of the responses was relatively limited, with a focus on efficiency rather than novel ideas.

- ChatGPT: Produced a highly creative and out-of-the-box marketing campaign, incorporating unconventional ideas and creative strategies.

- Google Gemini: Showed moderate creativity, focusing on sustainable solutions but lacking the boldness and flexibility of ChatGPT.

- Anthropic Claude: Offers creative ideas but with a more cautious approach, prioritizing practicality over boldness.

- Conclusion:

- DeepSeek showed strengths in structured tasks but struggled to provide the same level of adaptability and nuanced creativity seen in ChatGPT and Claude.

- ChatGPT excelled in creativity, offering diverse and imaginative ideas for problem-solving, while Claude focused more on safety and practical solutions.

3.8 Summary of Experimental Findings

| Metric | DeepSeek | ChatGPT (GPT-4) | Google Gemini | Anthropic Claude |

|---|---|---|---|---|

| Language Fluency | Strong in English & Chinese | Excellent (Multiple Languages) | Excellent (Multilingual) | Good (Various languages) |

| Code Generation | Excellent with structured programming tasks | Excellent with a variety of languages | Adequate but not specialized | Moderate coding capabilities |

| Creativity & Reasoning | Good but sometimes rigid | High adaptability & reasoning | Strong with factual recall | Good but cautious in responses |

| Context Handling | Decent but limited long-term memory | Excellent memory retention | Moderate | Good but limited long-term memory |

| Response Time | Fast and efficient | Moderate depending on query | Fast but variable | Moderate |

| Ethical Considerations | Needs improvement in bias reduction | Strong safeguards | Strong ethical policies | Conservative approach to safety |

3.9 Conclusions

The experiments demonstrate that DeepSeek is a competitive AI model with strong performance in areas such as code generation, language fluency (especially in English and Chinese), and efficiency. However, it faces challenges in maintaining conversational context over long exchanges and lacks the nuanced creative abilities of models like ChatGPT and Google Gemini. Additionally, DeepSeek exhibits biases in certain situations and lacks the robust ethical frameworks that some other models provide.

Despite these weaknesses, DeepSeek’s strong speed, efficiency, and open-source availability make it a solid choice for many applications, particularly in technical and bilingual contexts.

4. Comparison of DeepSeek and ChatGPT:

Both ChatGPT and DeepSeek offer powerful models, but their strengths and features differ. While they are largely interchangeable for basic tasks like drafting emails or making code suggestions, deeper comparisons reveal distinct areas where each shines.

- ChatGPT offers more advanced features such as:

- Image support: It can analyze and generate images through DALL·E 3.

- Voice mode: Users can interact using voice, including advanced features like live vision support.

- Custom instructions and memory: ChatGPT allows customization to suit individual needs and remembers details about users.

- Integrations: Built into various apps and services, it can work seamlessly across platforms like Zapier.

- Desktop apps: Available for both Windows and Mac, while DeepSeek remains web-based and app-based.

- Team collaboration and scheduling tasks: Useful for businesses or collaborative environments.

- DeepSeek excels in areas such as:

- Price: It’s completely free with fewer limitations, which makes it attractive to those who need access without paying.

- Open access: Models are available for download and customization, offering greater flexibility for developers.

- Performance at a lower cost: DeepSeek provides similar performance to other models but at a significantly lower price point.

Despite its free availability, DeepSeek lacks features like image analysis, voice interaction, and custom memory, making it less polished than ChatGPT. However, the open-source nature of DeepSeek allows for customization, which could be appealing for developers looking to create tailored solutions.

5. Strengths of DeepSeek:

- Multilingual Capabilities: DeepSeek excels in both English and Chinese, making it particularly useful for bilingual users and markets.

- Code Generation: DeepSeek-Coder is highly effective in structured programming tasks, making it a strong competitor to OpenAI’s Codex.

- Efficiency: The model delivers fast and efficient responses, optimizing performance in real-time interactions.

- Price and Access: DeepSeek stands out for being completely free, offering powerful models with minimal limitations. The ability to run models independently adds to its appeal for technical users.

- Technological Innovation: Despite the constraints of using less powerful graphics cards due to international sanctions, DeepSeek’s performance rivals other top models, highlighting significant technical achievement.

6. Weaknesses of DeepSeek:

- Limited Context Retention: DeepSeek struggles with maintaining long-term conversation history compared to ChatGPT’s advanced memory handling.

- Ethical & Bias Concerns: The model occasionally exhibits biases in responses and lacks the extensive ethical guardrails found in GPT-4 or Claude.

- Creativity & Flexibility: While DeepSeek performs well with structured tasks, it sometimes lacks the adaptability and nuanced creativity of ChatGPT and Claude.

- Quirks in Performance: Due to the rapid growth of the platform, DeepSeek sometimes suffers from server downtimes, and its features may feel underdeveloped in comparison to ChatGPT.

- Geopolitical Concerns: Being based in China, DeepSeek faces the risk of government surveillance, which raises concerns for users in sensitive professions or those who prioritize privacy. Additionally, DeepSeek is subject to content censorship policies from the Chinese government.

7. DeepSeek use Case Recommendations:

Ideal For:

- Bilingual English-Chinese users: Particularly useful for markets where both languages are in play.

- Developers requiring coding assistance: DeepSeek-Coder is a strong option for structured programming tasks.

- Users prioritizing speed and efficiency: Those who value quick responses and minimal costs.

- Technical users and developers: Who prefer the open-source model to run and customize their instances independently.

Not Ideal For:

- Highly complex reasoning and creativity tasks: DeepSeek lacks some of the creative flexibility of ChatGPT or Claude.

- Long, context-heavy conversations: ChatGPT’s memory handling still outperforms DeepSeek in maintaining coherent conversations over time.

- Applications requiring strong ethical oversight: Due to biases and content restrictions based on Chinese government policies.

8. Engineering Overview: Installation, Deployment, and Integration

While DeepSeek excels in language processing and other AI capabilities, its engineering aspects, such as installation, deployment, and integration, play a significant role in how developers and technical users interact with the platform. Below is an overview of the key engineering processes involved with DeepSeek.

8.1 Installation

DeepSeek provides open-source models that can be easily downloaded and installed. This flexibility makes it an attractive option for developers who want to run models independently or customize them for specific tasks.

- Pre-requisites: To install DeepSeek, users need to ensure their system meets certain hardware and software requirements.

- Hardware: Ideally, a system with a decent GPU (e.g., NVIDIA 3060 or higher) is recommended for optimal performance, though DeepSeek can run on systems with lesser specs at reduced speeds.

- Software: DeepSeek requires Python (usually version 3.8 or higher), along with certain dependencies like TensorFlow, PyTorch, or similar deep learning libraries. Users can install these via package managers like pip.

- Step-by-step Installation:

- Clone the official DeepSeek repository from GitHub (or another provided source).

- Install dependencies using pip install -r requirements.txt.

- Download the model weights (often quite large) from the provided cloud storage or repositories.

- Follow configuration steps to fine-tune settings (e.g., specifying languages, model size, etc.).

- Potential Pitfalls:

- Installation may require troubleshooting for specific operating systems or hardware configurations. Issues like missing dependencies or incompatible library versions can occasionally arise, especially with newer hardware.

8.2 Deployment

Once DeepSeek is installed, deploying it for use in production or in a local environment is straightforward but requires some engineering effort.

- Local Deployment:

- Running the Model Locally: After installation, DeepSeek can be run on local machines using command-line interfaces or custom scripts. Developers can run inference tasks, fine-tune models, or create interfaces for user interactions.

- Server Deployment: For larger-scale use, such as in enterprise applications, DeepSeek can be deployed on dedicated servers or cloud platforms (AWS, Google Cloud, Azure) for scalable inference. This would involve containerizing the application with Docker for easier deployment across different environments.

- API Integration:

- DeepSeek provides an API that allows developers to integrate the language models into applications. This can be done via RESTful API endpoints, enabling communication with external systems, such as websites, mobile apps, or backend services.

- Developers can build custom wrappers around DeepSeek to enhance its functionality (e.g., logging, monitoring, security, etc.).

- Scaling & Performance:

- Horizontal Scaling: In environments where high throughput or low latency is required, DeepSeek can be scaled horizontally by running multiple instances of the model across different servers or GPUs, orchestrated by Kubernetes or other container orchestration tools.

- Optimizing Performance: DeepSeek allows fine-tuning of model parameters to ensure optimal performance in different use cases. Techniques such as quantization and pruning can be applied to reduce model size and speed up inference time, making it suitable for resource-constrained environments.

8.3 Customization and Fine-Tuning

One of the key advantages of DeepSeek is the ability to customize and fine-tune the models for specific use cases, making it an appealing option for developers who want more control over their AI applications.

- Training on Custom Datasets: Users can retrain or fine-tune DeepSeek models with domain-specific data (e.g., legal, medical, or financial documents). This requires access to the training pipeline, and while the process can be resource-intensive, it is supported by DeepSeek’s architecture.

- Modifying Model Architecture: For advanced users, DeepSeek’s open-source code allows modifications to the underlying model architecture, whether it’s for improving performance on specific tasks or experimenting with new algorithms.

8.4 Integration with Other Tools

- Integration with Development Tools: Developers can easily integrate DeepSeek with IDEs (e.g., VSCode, PyCharm) or CI/CD pipelines for streamlined development and deployment. This can include automating model retraining or integration into automated testing suites.

- Compatibility with Cloud Services: DeepSeek can be integrated with cloud-based services like AWS Lambda, Google Cloud Functions, or Azure Functions for serverless deployments, allowing for quick and cost-effective scaling.

- Ecosystem & Plugins: While DeepSeek is largely standalone, it can integrate with other open-source AI tools or libraries, such as Hugging Face Transformers, for even greater flexibility.

8.5 Monitoring & Maintenance

- Performance Monitoring: After deployment, monitoring tools like Prometheus or Grafana can be used to keep track of the model’s performance, API request rates, and error handling.

- Model Updates: Users can periodically update their installed models by downloading new versions or patches from DeepSeek’s repository. As new versions of DeepSeek are released, keeping the system up-to-date will ensure that bugs are fixed, and performance improvements are integrated.

- Security & Privacy: For developers handling sensitive data, securing the model and its deployment environment is critical. DeepSeek’s open-source nature means that the security practices around it must be carefully managed. Developers can implement encryption and access control measures as needed.

8.6 Challenges and Considerations

- Geopolitical and Regulatory Concerns: As DeepSeek is based in China, there may be challenges related to data privacy laws and censorship, particularly for users outside of China. This requires careful consideration of the platform’s suitability for deployment in different regions, particularly when handling sensitive data.

- Dependency Management: Managing dependencies and ensuring compatibility with new Python or library versions could present challenges, especially when there are frequent updates to DeepSeek or its underlying libraries.

9. Conclusions:

DeepSeek is a powerful AI model that excels in multilingual processing and code generation. It also stands out for its free access and open-source availability, providing flexibility for developers. However, it still lags behind models like ChatGPT in terms of advanced features, user-friendliness, professional support and ethical safeguards.

While DeepSeek has the potential to be a leading AI assistant in the evolving landscape, particularly for bilingual and technical users, its lack of polish, server reliability, and the political concerns surrounding its Chinese base limit its broader appeal.

For most general users, ChatGPT remains the superior option. Its range of features, including image analysis, voice mode, and custom instructions, combined with its polished user experience and ongoing improvements, make it the go-to choice for professionals, creatives, and everyday users alike.

DeepSeek, however, remains an exciting option for those who prioritize cost, customizability, and speed, especially in multilingual and coding contexts.