Why Cost Per Insight and the Data-to-Decision Lifecycle Are Key

In today’s data-driven world, organizations are awash in raw information but starving for actionable insights. Traditional metrics like “cost per terabyte” or “number of reports generated” no longer capture the true value—and expense—of analytics. Cost Per Insight (CPI) measures the total resources (labor, infrastructure, software) required to produce a single, actionable insight. The Data-to-Decision Lifecycle (D2D) tracks the end-to-end journey—from data ingestion and cleaning through analysis, visualization, and finally decision-making. Optimizing both CPI and D2D is critical because faster, cheaper insights translate directly into competitive advantage and improved ROI.

Defining “Cost Per Insight” and the “Data-to-Decision Lifecycle”

- Cost Per Insight (CPI): Total cost of data collection, preparation, storage, modelling, and human analysis divided by the number of insights delivered over a period.

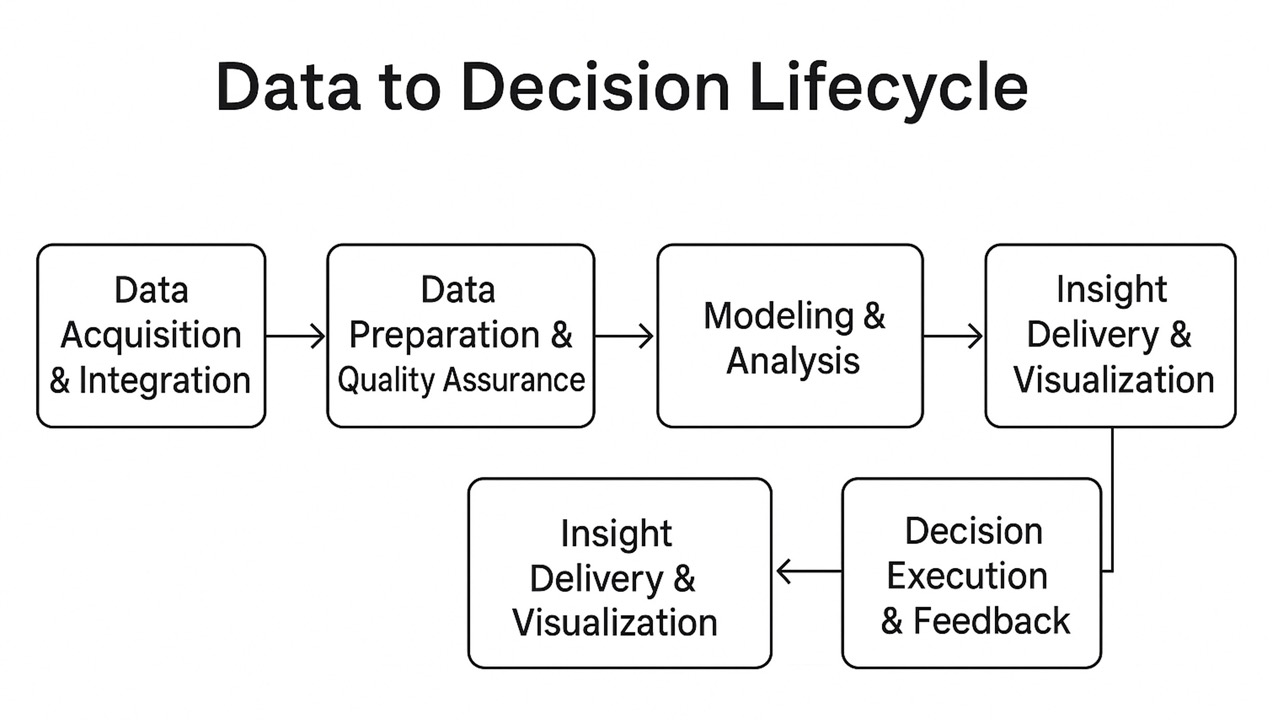

- Data-to-Decision Lifecycle (D2D):

- Data Acquisition & Integration

- Data Preparation & Quality Assurance

- Modelling & Analysis

- Insight Delivery & Visualization

- Decision Execution & Feedback

Short D2D cycles mean more timely decisions; low CPI means more insights for the same budget.

The High Cost of Data Centralization: Real-World Examples

Centralizing data into a single lake or warehouse may seem efficient, but in practice:

- Siloed Ingestion Pipelines: Multiple teams build redundant ETL processes, inflating costs and maintenance overhead.

- Data Quality Bottlenecks: 45 % of data professionals report spending the majority of their time on data preparation rather than analysis bigdatawire.com.

- Underutilized Compute: Idle capacity in centralized clusters leads to wasted spend on cloud VMs or on-prem hardware.

Consider a Fortune 500 retailer that built a monolithic data warehouse with 50 TB of historical sales and inventory data. The cost to run nightly batch jobs and manage the cluster exceeded $200,000/month—and yet only 15 % of that data ever powered timely marketing or supply-chain decisions.

Quantifying the Bleeding: How Enterprises Suffer

- Time → Delay: Enterprises can lose $3–5 million per year due to delayed insights that miss market windows.

- Labor → Waste: At 10 FTEs devoted to data prep and analysis at $170,000 each, a three-year cost can exceed $5 million tei.forrester.com.

- Opportunity Cost: Missed cross-sell or up-sell opportunities can erode 2–4 % of revenue annually in competitive industries.

The Case for Decentralization: Benefits Explained

Decentralizing analytics workloads (e.g., via edge compute, departmental analytics, or federated approaches) brings:

- Localized Processing: Insights generated close to the source reduce data movement and latency.

- Customized Models: Teams tailor analytics to their unique data characteristics.

- Elastic Scaling: Resources allocated dynamically where needed, minimizing idle capacity.

Federated Machine Learning: A Simple Conceptual Architecture

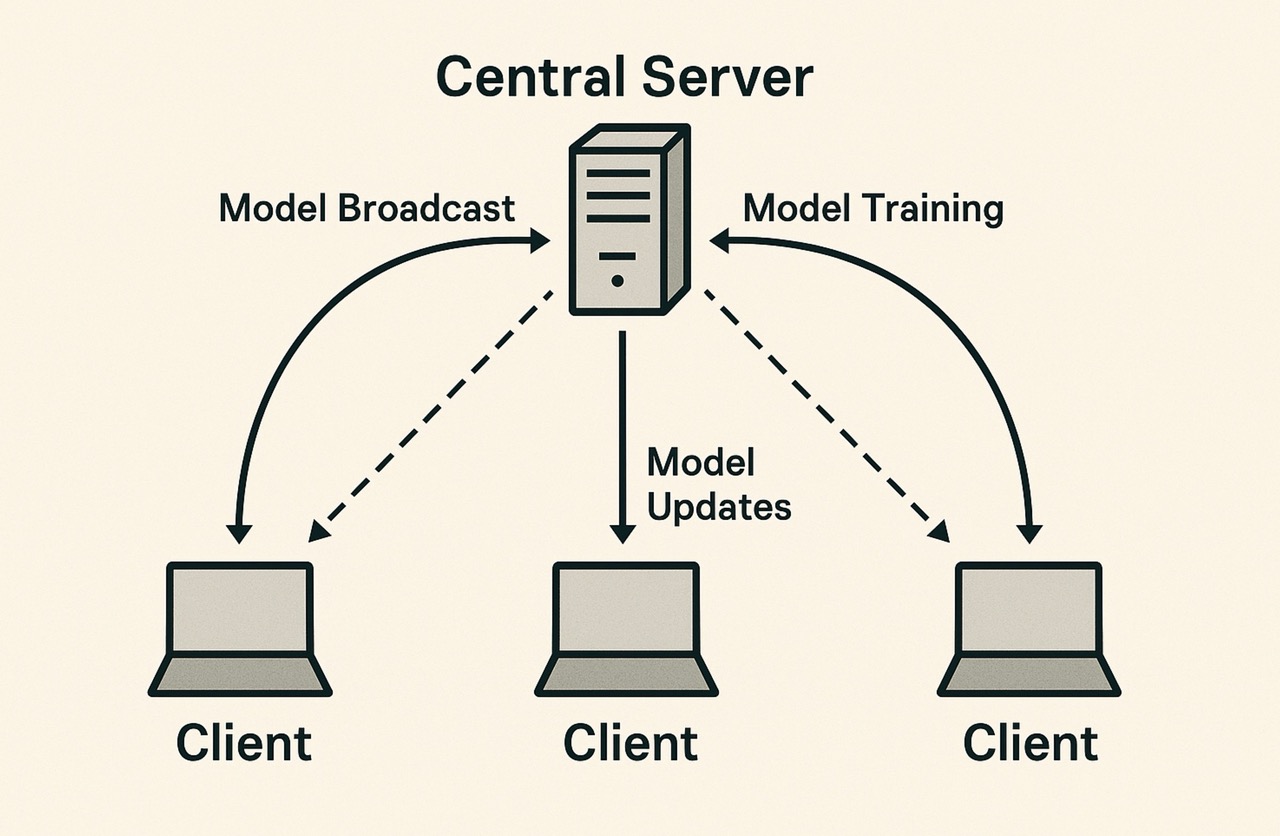

Federated Learning (FL) distributes model training across multiple clients without moving raw data to a central server.

-

- Central Server initializes a global model.

- Clients (e.g., edge devices, branch servers) receive the model, train locally on private data, and send only updates back.

- Server aggregates updates (e.g., via FedAvg) to refine the global model.

- Iterations continue until convergence.

Refer to the conceptual diagram above for a visual guide.

Opportunities with Decentralized Machine Learning

- Cost Efficiency: SciDirect reports FL approaches can cut training communication costs by 5 % and energy consumption by 4.6 % in real-world deployments

- Regulatory Compliance: Data stays on-premises or in region, aiding GDPR, HIPAA, and other mandates.

- Scalable Collaboration: Partnerships across institutions (e.g., healthcare, finance) can jointly train models without sharing sensitive data.

Production Challenges in Federated Learning

- System Heterogeneity: Varying hardware, network, and availability across clients can slow training rounds.

- Statistical Heterogeneity: Non-i.i.d. data distributions across clients may degrade model convergence.

- Security Risks: Model updates can leak information if not secured (necessitating techniques like differential privacy or secure aggregation).

- Operational Complexity: Orchestrating rounds, monitoring client participation, and handling dropouts add engineering overhead.

Benchmarking Cost Per Insight: Federated vs. Centralized

Below is an enriched comparison between Centralized and Federated approaches, illustrating not only Cost Per Insight (CPI) but also other critical attributes that impact the overall Data-to-Decision performance.

| Attribute | Centralized ML | Federated Learning | Notes / Example |

|---|---|---|---|

| Cost Per Insight (CPI) | $10,000 | $8,500 (–15%) | Savings from reduced data transfer and compliance overhead |

| Average Latency | 48 hrs from data arrival → insight | 12 hrs | Local training cuts ingestion-to-insight time by ~75% |

| Data Transfer Volume | 100 TB/month | 10 TB/month (–90%) | Only model updates sent vs. full datasets |

| Privacy & Compliance | Moderate (requires DLP tools) | RedHigh (data never leaves source)uces | Easier GDPR/HIPAA adherence |

| Scalability | Limited by central cluster capacity | Near-linear with additional clients | Each new client brings its own compute |

| Model Accuracy Impact | +2% (global view) | –1% (local heterogeneity) | May require more rounds to converge but preserves privacy |

| Operational Complexity | Medium (standard MLOps pipelines) | High (orchestration, client management) | Needs monitoring of client availability and secure aggregation |

| Security Requirements | TLS encryption, access control | Secure aggregation, differential privacy layers | Federated setups demand cryptographic protocols to prevent update leakage |

| Typical Use Case | Cross-department BI dashboards | Multi-branch medical imaging, mobile device AI | Centralized for unified reporting; federated for sensitive or distributed datasets |

Example Claim: In a pilot with a large bank network, shifting anti-fraud model training to a federated setup reduced monthly data egress by 85 %, cutting CPI by 18 % while slashing insights latency from 36 hrs to under 8 hrs.

This richer view highlights that while federated learning often brings significant cost, privacy, and latency advantages, it also introduces extra complexity and sometimes a small trade-off in model accuracy that must be managed through technique (e.g., personalized fine-tuning, advanced aggregation).

Data Sovereignty and Privacy Preservation

Federated Learning inherently aligns with Data Sovereignty principles:

- No Raw Data Transfer: Personal, sensitive, or proprietary data remains local.

- Privacy-Preserving Techniques: Incorporate homomorphic encryption, differential privacy, or secure multi-party computation to guard updates.

A key advantage of Federated Learning is data never leaves its source enclave—crucial for regulated industries. For example:

- Healthcare:

- King’s College London & Nvidia implemented FL across multiple NHS Trusts, training on local imaging data without sharing patient records

- Brain Tumour Segmentation Studies applied differential privacy to protect patients during FL training for MRI analysis

- Finance:

- IBM Research built a privacy-preserving FL system to detect anomalies in transactional data across banks without exposing raw records

- Lucinity’s FinCrime Platform uses FL to share model insights for anti-money laundering across institutions, maintaining GDPR compliance

The Future of Federated Learning

FL is poised to grow as:

- Edge AI proliferates in IoT, automotive, and mobile.

- Privacy Regulations tighten globally, mandating decentralized data handling.

- Standardization Efforts (e.g., OpenFL, FATE) lower barriers to adoption.

- Hybrid Architectures combine centralized pre-training with federated fine-tuning for best of both worlds.

By embracing decentralization and FL, enterprises can drive down Cost Per Insight, accelerate the Data-to-Decision Lifecycle, and unlock new collaboration—and competitive—opportunities.

Conclusion

As data volumes surge and regulatory demands tighten, organizations can no longer afford high Cost Per Insight or slow Data-to-Decision cycles. Centralized architectures, while familiar, incur steep data-movement, latency, and compliance costs. Federated Learning—and broader decentralization—offers a compelling alternative:

- Reduced CPI & Latency: By keeping data local, model updates shrink transfer volumes and speed up insight delivery.

- Enhanced Privacy & Sovereignty: Sensitive data stays behind organizational boundaries, simplifying GDPR, HIPAA, and other mandates.

- Scalable Collaboration: Institutions can share learnings without exposing raw records, unlocking cross-enterprise innovation.

- Future-Ready AI: Edge proliferation, open FL frameworks, and hybrid pre-training/fine-tuning architectures will continue to expand federated use cases.

While federated approaches introduce orchestration and security complexities, the trade-offs are rapidly narrowing through standardized toolkits, cryptographic techniques, and MLOps integrations. By embracing decentralization today, enterprises can achieve faster, cheaper, and more compliant analytics—transforming raw data into actionable insights with unprecedented speed and trust.

References

- Gil Press, Data scientists spend 60% of their time on cleaning and organizing data, Forbes (2016)

- Survey Shows Data Scientists Spend Time Cleaning Data, DATAVERSITY (2015)

- Federated learning may provide a solution for future digital health challenges, King’s College London (2019)

- First Privacy-Preserving Federated Learning System, NVIDIA Developer (2018)

- Privacy-preserving Federated Brain Tumour Segmentation, PMC (2020)

- Privacy preservation for federated learning in health care, PMC (2005)

- Privacy-Preserving Federated Learning for Finance, IBM Research Blog (2023)

- Federated Learning in FinCrime, Lucinity (2024)

- Turning Machine Learning to Federated Learning in Minutes with NVIDIA FLARE, NVIDIA Developer (2023)

- The future of digital health with federated learning, Nature Digital Medicine (2020)