Understanding Fine-Tuning in the AI Era

In the rapidly evolving landscape of artificial intelligence and generative AI, fine-tuning has emerged as a critical technique that transforms generic AI models into specialized, enterprise-ready solutions. But what exactly is fine-tuning?

Fine-tuning is the process of taking a pre-trained foundation model—such as GPT, LLaMA, or BERT—and further training it on domain-specific data to adapt its behavior and knowledge to particular use cases. Think of it as taking a brilliant generalist and teaching them to become an expert in your specific field.

When OpenAI releases GPT-4, it’s trained on vast amounts of general internet data, making it knowledgeable about many topics but not necessarily expert in your company’s products, policies, or industry-specific terminology. Fine-tuning bridges this gap by continuing the model’s training on your proprietary data, teaching it to understand your business context, speak your company’s language, and follow your specific guidelines.

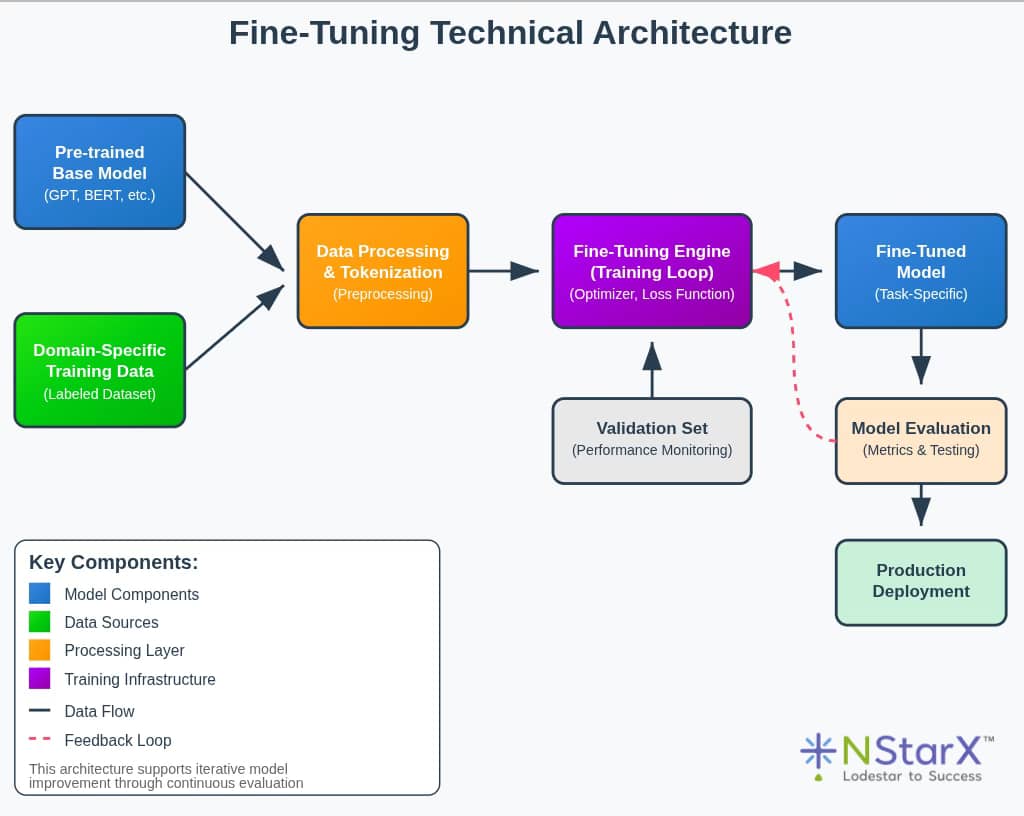

The process involves several key steps:

- Data Preparation: Curating high-quality, domain-specific training data

- Model Selection: Choosing the appropriate base model for your use case

- Training Configuration: Setting hyperparameters and training strategies

- Evaluation: Testing the fine-tuned model’s performance

- Deployment: Integrating the customized model into production systems

This isn’t just academic theory—it’s becoming a business imperative for organizations serious about AI adoption.

The Critical Importance of Fine-Tuning

Fine-tuning has evolved from a nice-to-have optimization to a mandatory requirement for enterprise AI success. Here’s why:

1. Domain Specificity and Accuracy

Generic AI models often struggle with industry-specific terminology, context, and nuances. A base model might confuse “Basel III” with a Swiss city rather than understanding it as crucial banking regulation. Fine-tuning eliminates these gaps, dramatically improving accuracy for domain-specific tasks.

2. Compliance and Risk Management

In regulated industries like finance, healthcare, and legal services, AI outputs must adhere to strict compliance requirements. Fine-tuning allows organizations to embed regulatory knowledge and approved language patterns directly into their models, reducing compliance risks.

3. Competitive Differentiation

As McKinsey observed, “If you have [a generative model], your competitor probably has it as well… The likely moat will be customization.” While everyone can access the same base models, fine-tuning on proprietary data creates unique competitive advantages.

4. Cost Efficiency

Counter-intuitively, fine-tuning often reduces operational costs. A smaller, fine-tuned model can outperform larger generic models for specific tasks while requiring less computational resources for inference.

5. Data Privacy and Security

Fine-tuning enables organizations to train models on sensitive data within secure environments, ensuring proprietary information doesn’t leak to third-party services while still benefiting from AI capabilities.

Figure 1 illustrates simple example of Fine Tuning Technical Architecture:

Figure 1: Fine Tuning Technical Architecture (Sample Representation)

Real-World Success Stories: The Financial Impact of Fine-Tuning

The business impact of fine-tuning extends far beyond theoretical improvements. Here are compelling examples of enterprises achieving measurable success:

American Express: Fraud Detection Revolution

American Express implemented fine-tuning for fraud detection using generative adversarial networks (GANs) to create synthetic fraud data, then fine-tuned their detection models on this augmented dataset. The results were remarkable:

- 30% reduction in false positives

- Improved detection of emerging fraud patterns

- Enhanced customer experience through fewer legitimate transactions being declined

H2O.ai Customer Success Stories

H2O.ai’s Enterprise LLM Studio has delivered quantifiable benefits across multiple clients:

- 70% reduction in AI expenses by fine-tuning open-source models instead of using expensive API calls

- 75% improvement in inference latency

- 5x increase in request handling capacity with the same infrastructure

American Express: Operational Efficiency Gains

Beyond fraud detection, American Express achieved operational improvements through fine-tuned AI assistants:

- Travel counselors: Average call times reduced by 1 minute through AI-assisted travel information retrieval

- Software engineering: 10% reduction in developer workload through fine-tuned coding assistants

Bloomberg: Domain Expertise at Scale

Bloomberg’s creation of BloombergGPT—a 50-billion parameter model pre-trained on financial data—demonstrated the power of domain-specific training:

- 20% improvement in volatility prediction over traditional methods

- Superior performance on finance-specific NLP tasks

- Enhanced named entity recognition for financial terms

Enterprise-Wide Impact

According to industry surveys:

- 92% of enterprises reported improved model accuracy after fine-tuning

- 74% of C-suite executives plan to customize foundation models with proprietary data

- Organizations using fine-tuning report significant ROI in efficiency and competitive advantage

When Fine-Tuning Goes Wrong: Cautionary Tales

Not all fine-tuning initiatives succeed. Understanding failure modes is crucial for avoiding costly mistakes:

The Privacy Leak Disaster

Research experiments have shown that improperly fine-tuned models can leak sensitive training data. In one study, fine-tuning on datasets containing personal information led to 19% PII leakage in model outputs. For financial institutions, this could mean inadvertently exposing customer account numbers or personal details—a compliance nightmare.

Meta’s Galactica: The Hallucination Problem

Meta’s Galactica model, designed for scientific knowledge, was fine-tuned on scientific texts but failed to prevent authoritative-sounding hallucinations. The public backlash was so severe that Meta pulled the demo after just 3 days. This highlights the critical need for robust evaluation and alignment processes.

The Overfitting Trap

Some enterprises have fine-tuned models on small datasets expecting dramatic improvements, only to see minimal gains or even performance degradation. Without sufficient high-quality data, fine-tuning can lead to overfitting, where models memorize training examples rather than learning generalizable patterns.

Bias Amplification Issues

Companies have discovered that fine-tuning on biased historical data can amplify discriminatory patterns. For instance, an insurance company fine-tuning on historical claims data might inadvertently learn to discriminate against certain neighborhoods or demographics, creating both ethical and legal problems.

The Cost Overrun Reality

One analysis suggested that hosting a fine-tuned model can cost 10-100x more than using managed APIs due to infrastructure requirements. Some organizations have abandoned fine-tuning projects after discovering the true total cost of ownership.

Typical Enterprise Fine-Tuning Challenges

Organizations embarking on fine-tuning initiatives commonly encounter several significant challenges:

1. Data Quality and Availability

- Challenge: Obtaining high-quality, labeled, domain-specific training data

- Impact: Poor data quality leads to suboptimal model performance

- Reality: Data preparation often represents the most burdensome cost in fine-tuning projects

2. Technical Complexity

- Challenge: Fine-tuning requires specialized ML expertise and computational resources

- Impact: Organizations without skilled teams struggle to implement effective solutions

- Reality: The technical barrier often prevents smaller organizations from attempting fine-tuning

3. Privacy and Security Concerns

- Challenge: Ensuring sensitive data doesn’t leak during training or inference

- Impact: Regulatory violations and competitive intelligence exposure

- Reality: Financial institutions must navigate complex data protection requirements

4. Computational Costs

- Challenge: Training large models requires significant GPU resources

- Impact: High infrastructure costs can exceed project budgets

- Reality: Fine-tuning a large model can cost tens of thousands of dollars

5. Evaluation and Validation

- Challenge: Measuring fine-tuned model quality for generative tasks

- Impact: Deploying poorly performing models can damage business outcomes

- Reality: Human evaluation is often required, adding time and cost

6. Integration Complexity

- Challenge: Incorporating fine-tuned models into existing enterprise systems

- Impact: Deployment delays and system incompatibilities

- Reality: Integration can be as challenging as the fine-tuning itself

7. Regulatory Compliance

- Challenge: Ensuring fine-tuned models meet industry regulations

- Impact: Compliance violations and audit failures

- Reality: Financial services require extensive documentation and validation

Introducing Fine-Tuning as a Service (FTAAS): The Enterprise Solution

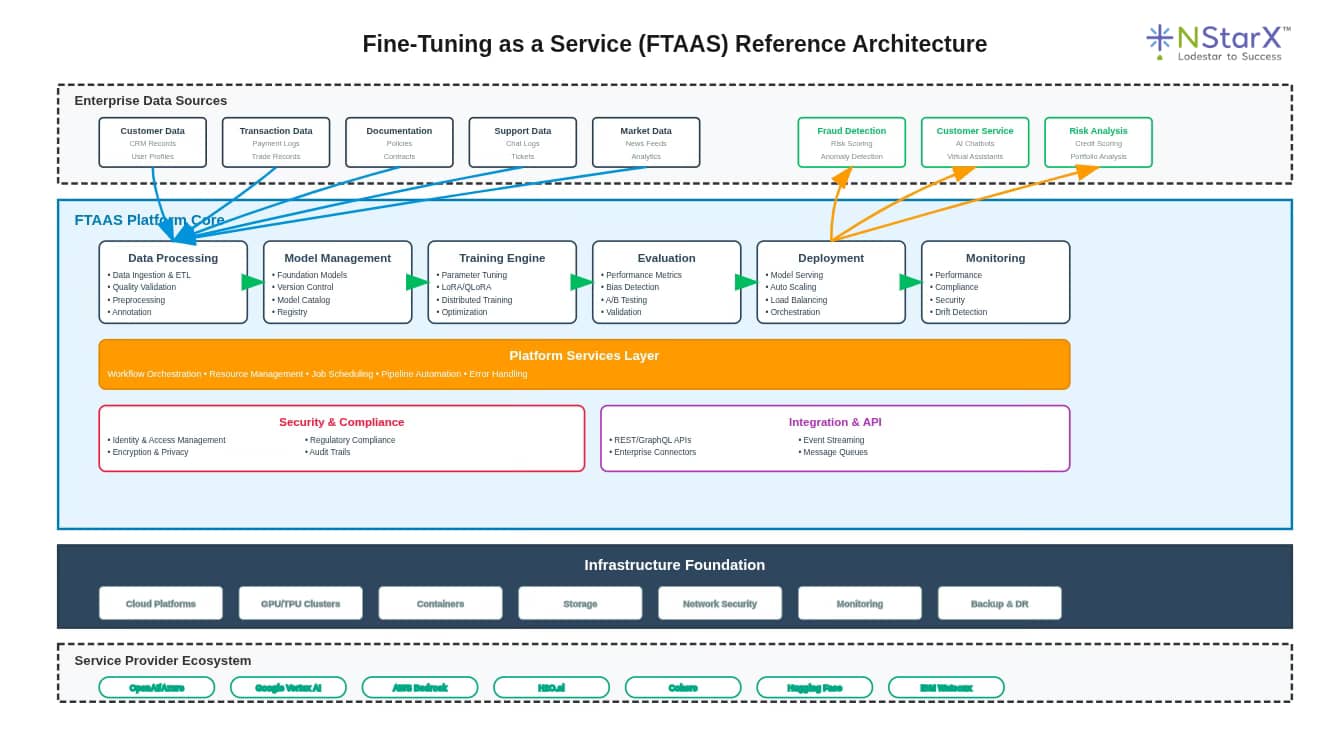

Fine-Tuning as a Service (FTAAS) emerges as the solution to these challenges, offering managed platforms that handle the complexity of fine-tuning while providing enterprise-grade security, scalability, and support.

What is FTAAS?

FTAAS is a managed service that handles the end-to-end process of customizing large language models for specific enterprise needs—from data preparation and model training to validation, deployment, and ongoing monitoring.

Key FTAAS Providers and Examples:

OpenAI Fine-Tuning API

Allows customers to fine-tune GPT models via simple API calls. Enterprises upload training data, and OpenAI handles the infrastructure and training process.

Azure OpenAI Service

Provides OpenAI’s models with enterprise security, allowing fine-tuning within Azure’s compliance framework—particularly attractive to financial institutions.

Google Vertex AI

Offers fine-tuning capabilities for Google’s models with integrated ML infrastructure and security controls.

H2O.ai Enterprise LLM Studio

Provides on-premises fine-tuning solutions with no-code interfaces, supporting distributed training and model compression.

Cohere’s Enterprise Fine-Tuning Suite

Offers API-driven fine-tuning with emphasis on efficiency and enterprise integration.

FTAAS Success Examples:

Morgan Stanley’s Wealth Management AI

While using retrieval-augmented generation initially, Morgan Stanley’s partnership with OpenAI demonstrates how managed services enable large financial institutions to deploy AI safely and effectively.

The Hartford Insurance

Working with Accenture, The Hartford leveraged managed fine-tuning services to customize generative AI models for document processing, enhancing employee efficiency across their value chain.

Enterprise Consulting Integrations

Major consulting firms like Accenture, Deloitte, and Infosys are delivering FTAAS solutions, combining their domain expertise with managed platforms to accelerate enterprise adoption.

In the Figure 2, you can view the digrammatic representation of Fine Tuning As a Service (FTAAS) architecture:

Figure 2: Fine Tuning As a Service Reference Architecture

Financial Metrics: How Fine-Tuning Drives Business Value

Fine-tuning delivers measurable financial benefits across multiple dimensions:

| Metric Category | Specific Metrics | Typical Improvements | Business Impact |

|---|---|---|---|

| Operational Efficiency | Processing time reduction | 30-75% faster task completion | Lower operational costs, higher throughput |

| Call/interaction duration | 1-5 minutes saved per interaction | Increased agent productivity | |

| Automation rate | 20-50% increase in automated tasks | Reduced labor costs | |

| Cost Optimization | Infrastructure costs | 70% reduction vs. large model APIs | Direct cost savings |

| False positive rates | 30% reduction in fraud false positives | Reduced investigation costs | |

| Training/onboarding time | 40% reduction in new employee training | Faster time-to-productivity | |

| Revenue Enhancement | Customer satisfaction | 15-25% improvement in satisfaction scores | Higher retention and NPS |

| Response accuracy | 20-40% improvement in correct responses | Better customer experience | |

| Time to market | 50% faster model deployment | Accelerated innovation | |

| Risk Mitigation | Compliance adherence | 90%+ reduction in policy violations | Reduced regulatory risk |

| Data privacy protection | Zero data leakage in controlled environments | Avoided regulatory penalties | |

| Model reliability | 95%+ uptime for fine-tuned models | Business continuity | |

| Competitive Advantage | Market differentiation | Unique AI capabilities vs. competitors | Market share growth |

| Innovation speed | 2-3x faster AI solution development | First-mover advantages | |

| Customer personalization | 60% improvement in personalized responses | Increased customer loyalty |

Current Challenges and Barriers to FTAAS Deployment

Despite its benefits, enterprises face several obstacles when implementing FTAAS:

1. Organizational Readiness

- Skills Gap: Limited internal expertise in AI and ML

- Change Management: Resistance to AI-driven process changes

- Budget Constraints: Difficulty justifying AI investments

2. Technical Barriers

- Data Silos: Fragmented data across enterprise systems

- Legacy Integration: Challenges connecting AI to existing infrastructure

- Performance Requirements: Meeting real-time processing demands

3. Governance and Compliance

- Regulatory Uncertainty: Evolving AI governance requirements

- Risk Assessment: Difficulty quantifying AI-related risks

- Audit Requirements: Complex documentation and validation needs

4. Vendor Selection

- Platform Proliferation: Too many FTAAS options without clear differentiation

- Vendor Lock-in: Concerns about platform dependencies

- Service Quality: Inconsistent performance across providers

5. Strategic Alignment

- Use Case Identification: Difficulty selecting optimal initial applications

- ROI Measurement: Challenges in quantifying business value

- Scaling Strategy: Unclear path from pilot to enterprise-wide deployment

Best Practices for FTAAS Implementation

Successful FTAAS deployment requires adherence to proven best practices:

1. Strategic Planning

- Start with Clear Use Cases: Focus on specific business problems with measurable outcomes

- Pilot First: Begin with low-risk, high-value applications

- Build Internal Champions: Identify and train AI advocates across the organization

2. Data Excellence

- Invest in Data Quality: Clean, curate, and organize training data before fine-tuning

- Implement Data Governance: Establish clear policies for data usage and protection

- Create Feedback Loops: Continuously improve data quality based on model performance

3. Technical Implementation

- Choose the Right Platform: Select FTAAS providers based on security, compliance, and integration capabilities

- Design for Scale: Plan infrastructure to support growing AI workloads

- Implement Monitoring: Deploy comprehensive model performance tracking

4. Risk Management

- Conduct Bias Audits: Regularly test models for discriminatory behavior

- Implement Privacy Controls: Use techniques like differential privacy for sensitive data

- Plan for Failures: Develop fallback procedures and human oversight mechanisms

5. Organizational Change

- Train Teams: Invest in AI literacy across the organization

- Establish Governance: Create cross-functional AI steering committees

- Communicate Benefits: Clearly articulate AI value to stakeholders

6. Continuous Improvement

- Monitor Performance: Track model accuracy and business metrics

- Iterate Regularly: Update models with new data and feedback

- Scale Gradually: Expand successful pilots to broader applications

The Future of Fine-Tuning as a Service

The FTAAS landscape is rapidly evolving, with several emerging trends shaping its future:

Emerging Innovations

Real-Time Fine-Tuning

Future FTAAS platforms may enable continuous model updates as new data becomes available, allowing models to adapt to changing business conditions in real-time.

Automated Model Selection

AI-powered systems will automatically select optimal base models and fine-tuning strategies based on data characteristics and business requirements.

Federated Fine-Tuning

Organizations will collaborate on model training without sharing sensitive data, enabling industry-wide AI improvements while maintaining privacy.

Hybrid Approaches

Integration of fine-tuning with retrieval-augmented generation (RAG) and other techniques will create more powerful and flexible AI systems.

Alternative and Complementary Approaches

Retrieval-Augmented Generation (RAG)

RAG provides dynamic access to current information without retraining models, offering advantages for frequently changing data.

Prompt Engineering

Advanced prompting techniques can achieve some benefits of fine-tuning without the complexity of model retraining.

Model Distillation

Creating smaller, specialized models from larger ones offers deployment efficiency while maintaining performance.

Multi-Agent Systems

Orchestrating multiple specialized models may provide more flexibility than single fine-tuned models for complex enterprise workflows.

Market Evolution

The FTAAS market is expected to consolidate around a few major platforms while specialized providers serve niche industries. Integration with existing enterprise software will become seamless, making AI adoption as simple as installing traditional business applications.

Regulatory Landscape

As AI governance frameworks mature, FTAAS providers will need to embed compliance capabilities directly into their platforms, making regulatory adherence automatic rather than manual.

Conclusion

Fine-Tuning as a Service represents a pivotal evolution in enterprise AI adoption, transforming the complex challenge of model customization into an accessible, managed capability. As we’ve seen through real-world examples from American Express, Bloomberg, and numerous other organizations, the financial and operational benefits of fine-tuning are both measurable and substantial.

The evidence is compelling: organizations that embrace FTAAS gain significant competitive advantages through improved accuracy, reduced costs, enhanced customer experiences, and better risk management. The 30% reduction in fraud false positives at American Express, the 70% cost savings achieved by H2O.ai customers, and the 92% of enterprises reporting improved accuracy after fine-tuning all point to the same conclusion—fine-tuning is not optional for serious AI deployment.

However, success requires more than just adopting the technology. Organizations must approach FTAAS strategically, starting with clear use cases, investing in data quality, choosing appropriate platforms, and implementing robust governance frameworks. The failures we’ve examined—from privacy leaks to bias amplification—serve as important reminders that fine-tuning must be done thoughtfully and responsibly.

Looking ahead, FTAAS will continue to evolve, becoming more automated, more secure, and more integrated with existing enterprise systems. While alternative approaches like RAG and prompt engineering offer valuable complementary capabilities, fine-tuning remains the gold standard for creating truly specialized, high-performing AI systems.

For organizations like those served by NStarX, the message is clear: FTAAS is not just a technological capability—it’s a strategic imperative for competing in an AI-driven future. The question is not whether to adopt fine-tuning, but how quickly and effectively you can implement it to drive business value while managing risks.

The enterprises that master FTAAS today will be the ones defining the competitive landscape tomorrow.

References

- Rafay Systems – “Fine-Tuning AI Models with Tuning-as-a-Service Platforms”

- Oracle – “Fine-Tuning LLMs: A Comprehensive Guide”

- Acorn Labs – “Fine-Tuning LLM: A Comprehensive Learning Center Guide”

- Cohere – “Fine-Tuning for Enterprises”

- SuperAnnotate – “Best GenAI Fine-Tuning Tools for Enterprises”

- Accenture Newsroom – “Accenture Launches Specialized Services for Foundation Models”

- BusinessWire – “H2O.ai Launches Enterprise LLM Studio”

- American Express Engineering Blog – “Generative AI Meets Open Source at American Express”

- LinkedIn – “American Express Unveils Its Approach to Generative AI”

- AiInvest – “AI Revolution in Finance: Generative Tools Rewriting Investment Rules”

- Invisible Technologies – “Fine-Tuning: Too Costly and Complex”

- Galileo AI – “Optimizing LLM Performance: RAG vs Fine-tune vs Both”

- Medium – “Fine-Tuning LLM on Sensitive Data Lead to 19% PII Leakage”

- Source Allies – “What Enterprise GenAI Really Needs”

- LinkedIn – “92% Enterprises Report Improved Model Accuracy Fine-Tuning”

- Infosys – “AI Imperatives 2024: Specialized Tasks”

- RWS – “Fine-Tuning Generative AI”

- Microsoft Tech Community – “Enterprise Best Practices for Fine-Tuning Azure OpenAI Models”

- Medium – “Fine-Tune Any Large Language Model with Axolotl”

- Axolotl AI – “Scale and Customize LLMs”

- Dynamiq – “Generative AI and LLMs in Banking”

- Toolify AI – “Automating 8 Billion Decisions: How American Express Uses AI”

- AI Superior – “LLM Fine-Tuning Companies”

- Monte Carlo Data – “The Moat for Enterprise AI is RAG + Fine-Tuning”

- Slashdot – “AI Fine-Tuning for Enterprise”