NStarX Engineering team works on another article on how enterprises can safeguard their competitive advantage in an era of aggressive AI platform expansion.

The artificial intelligence landscape has fundamentally shifted. What began as infrastructure providers offering API access has evolved into a complex ecosystem where the very platforms enterprises depend on are increasingly becoming their competitors. As model providers like OpenAI, Anthropic, and Google expand beyond foundational AI services into application-layer products, enterprises face an unprecedented challenge: how to leverage powerful AI capabilities without surrendering their competitive edge.

This isn’t just a theoretical concern. The data flowing through AI APIs today is tomorrow’s training ground for competing products. The workflows you optimize using AI models become the blueprints for direct competitors. Understanding this dynamic—and taking proactive steps to protect your intellectual property—has become critical for enterprise survival in the AI age.

The New AI Power Brokers: Who Are Model Providers and What Do They Offer?

Model providers have evolved far beyond their original infrastructure-focused mandates. Today’s leading AI companies—OpenAI, Anthropic, Google (DeepMind), Microsoft, and others—operate across multiple layers of the AI stack:

Foundation Layer: These companies provide the core large language models (LLMs) that power countless applications. Models like GPT-4, Claude, PaLM, and others serve as the computational backbone for everything from chatbots to complex business automation.

API and Integration Layer: Beyond raw model access, these providers offer sophisticated APIs, fine-tuning capabilities, and integration tools that make it easier for enterprises to embed AI into their workflows.

Application Layer: Increasingly, model providers are launching their own end-user applications. OpenAI’s ChatGPT Enterprise, Anthropic’s Claude for Work, Google’s Bard for Business, and Microsoft’s Copilot suite directly compete with enterprise solutions.

Agent and Orchestration Layer: The newest frontier involves autonomous AI agents that can perform complex, multi-step tasks. OpenAI’s Agent Mode and Anthropic’s Claude Code represent direct incursions into territory traditionally occupied by specialized enterprise software.

The strategic challenge for enterprises is clear: the companies providing your AI infrastructure are simultaneously developing products that could replace your internal solutions or compete with your market offerings.

What Constitutes Enterprise IP in the Age of AI?

Understanding what constitutes intellectual property in an AI-driven world requires expanding traditional definitions. For enterprises, IP now encompasses several critical categories:

Process Intelligence: How your organization actually gets work done—the sequence of decisions, exceptions handling, approval workflows, and coordination patterns that make your business unique. This procedural knowledge often represents decades of operational refinement.

Domain-Specific Data Patterns: The relationships between different data points in your industry, customer behavior patterns, market timing insights, and predictive indicators that give you competitive advantage.

Integration Architecture: How your various systems communicate, the custom APIs you’ve built, the data transformation logic that connects disparate platforms, and the automation rules that govern cross-system workflows.

Contextual Decision Making: The institutional knowledge about when to deviate from standard procedures, how to handle edge cases, and what factors influence decision-making in your specific business context.

Customer Interaction Patterns: The nuanced understanding of your customer base—their preferences, pain points, communication styles, and the relationship dynamics that drive loyalty and retention.

Operational Exceptions and Edge Cases: Perhaps most valuably, how your organization handles the scenarios that fall outside standard operating procedures—the judgment calls, creative solutions, and adaptive responses that human expertise provides.

The Pattern of Platform Appropriation: Real Examples of Model Provider Overreach

The history of platform companies absorbing successful third-party innovations is well-documented, and AI model providers are following this same playbook with concerning frequency.

The Coding Assistant Consolidation: GitHub Copilot initially relied heavily on specialized coding models and third-party integrations. As the tool gained traction, Microsoft systematically incorporated features that had been the domain of specialized developer tools, effectively eliminating entire categories of coding productivity startups.

The Windsurf Acquisition Pattern: Foundation Capital’s article references the “recent Windsurf saga,” highlighting how promising startups sometimes receive preferential treatment from model providers—favorable API terms, early feature access, close technical collaboration—only to later find themselves acquired or competed against directly.

Search and Retrieval Integration: Companies that built sophisticated retrieval-augmented generation (RAG) systems found their competitive advantages diminished as model providers began offering native web search, document analysis, and knowledge retrieval capabilities directly within their base models.

Workflow Automation Absorption: Specialized workflow automation tools that relied on AI models for task orchestration discovered that model providers were developing their own agent frameworks, complete with workflow management, task scheduling, and integration capabilities.

Customer Service Displacement: Enterprises that had developed sophisticated AI-powered customer service solutions using model provider APIs found themselves competing directly with native customer service offerings from those same providers.

The pattern is consistent: model providers observe successful use cases through their APIs, identify high-value application patterns, and then build competing solutions that leverage their foundational advantages.

The Dual Harm: Impact on Both Enterprises and Innovation Ecosystem

This platform expansion creates harmful dynamics that extend beyond individual companies:

For Enterprises: Companies that heavily integrate with model provider services find themselves in an increasingly dependent position. Their operational intelligence becomes training data for potential competitors, their process innovations get absorbed into generic offerings, and their switching costs increase as they become more entrenched in provider ecosystems.

For Innovation: The startup and solution provider ecosystem suffers from what economists call “platform risk.” Knowing that successful solutions may be absorbed by model providers creates a chilling effect on investment and innovation. Why fund a specialized AI application when the underlying platform might simply build a competing version?

Market Concentration: As model providers expand vertically, they consolidate power across multiple layers of the AI stack, reducing competition and limiting customer choice. This concentration can lead to decreased innovation, higher prices, and reduced customization options for enterprises with specific needs.

Knowledge Drain: Perhaps most concerningly, the flow of operational intelligence from enterprises to model providers represents a systematic transfer of institutional knowledge from the organizations that developed it to platforms that can then commercialize it broadly.

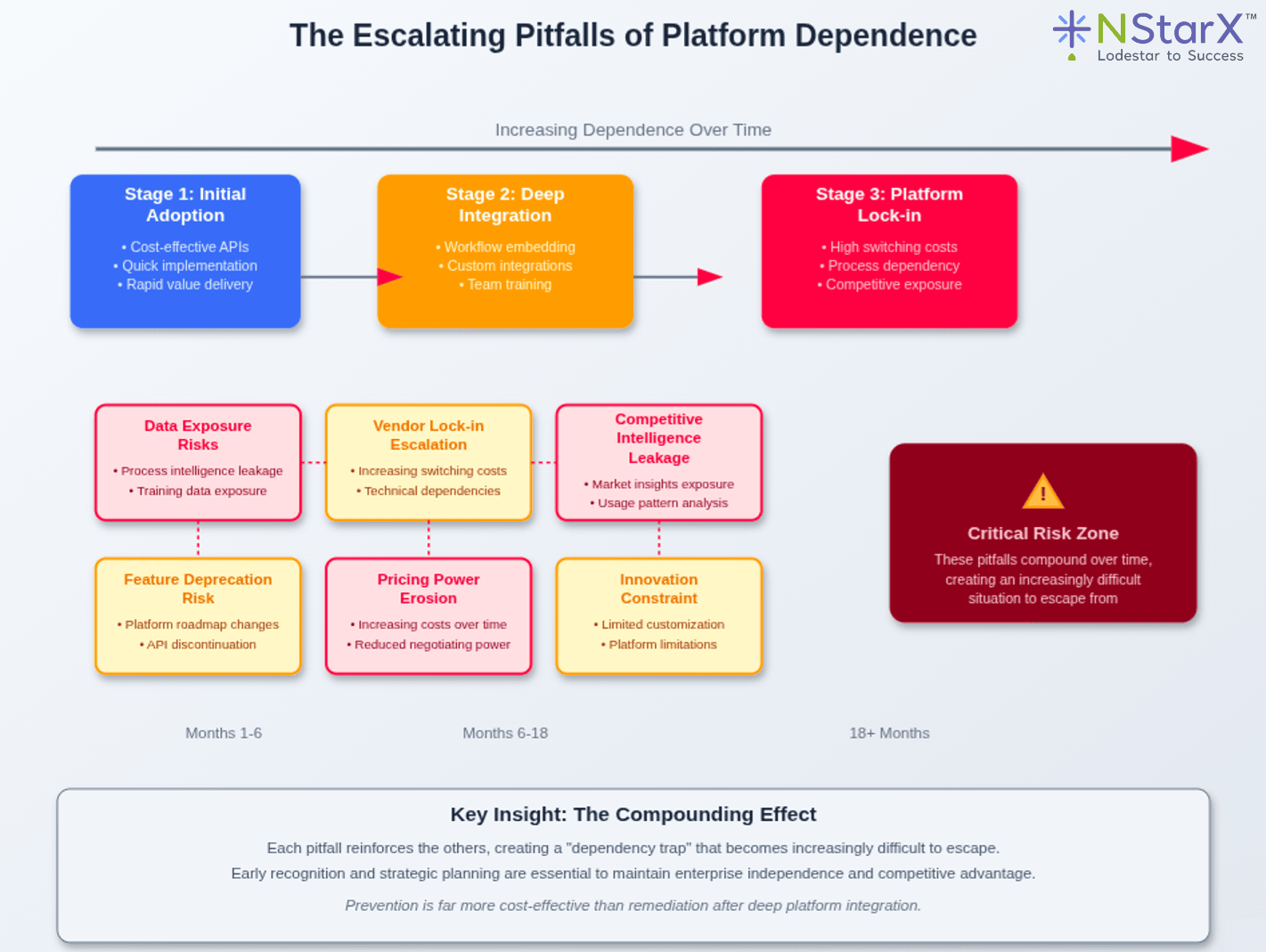

The Pitfalls of Platform Dependence

Heavy reliance on model providers creates several strategic vulnerabilities for enterprises:

Data Exposure Risks: Every API call potentially exposes your operational patterns, decision-making logic, and business processes to platform providers. This data can inform competitive product development, even when covered by privacy policies.

Vendor Lock-in Escalation: As enterprises integrate deeper with model provider ecosystems, switching costs increase exponentially. Custom integrations, trained workflows, and embedded processes become difficult to migrate.

Competitive Intelligence Leakage: Model providers gain panoramic views of how different industries operate, which solutions are gaining traction, and where market opportunities exist. This intelligence can guide their product development in ways that directly compete with their customers.

Feature Deprecation Risk: Enterprises that build on provider-specific features face the risk of those capabilities being discontinued, modified, or repositioned as competitors to their own offerings.

Pricing Power Erosion: As dependence increases, model providers gain pricing power over their enterprise customers. What begins as cost-effective API access can evolve into expensive platform fees as switching becomes impractical.

Innovation Constraint: Heavy platform dependence can limit an enterprise’s ability to pursue innovative AI applications that might conflict with provider interests or roadmaps.

Figure 1 is the visual representation of the several core constructs of the pitfalls. There are multiple layers within the each core that needs to be peeled and evaluated.

Figure 1: Escalating Problem of Platform Dependence with Model Providers

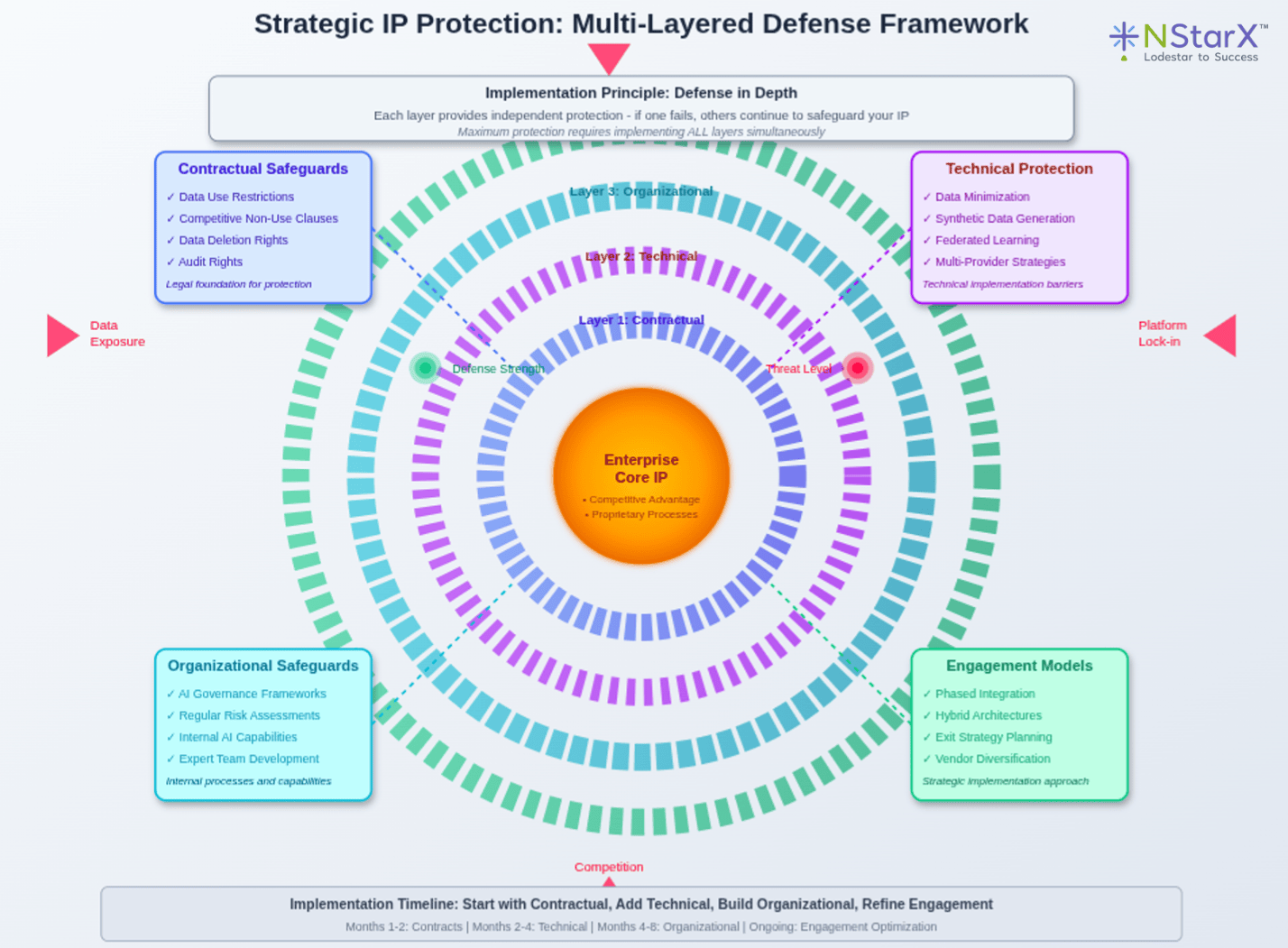

Best Practices for IP Protection: Building Strategic Guardrails

Protecting enterprise intellectual property in an AI-driven world requires a multi-layered approach:

Contractual Safeguards

Data Use Restrictions: Ensure contracts explicitly prohibit the use of your data for training competing models or developing competing products. Standard terms often allow broad data usage that enterprises don’t fully understand.

Competitive Non-Use Clauses: Include specific language preventing model providers from using insights gained from your usage patterns to develop products that compete in your market segments.

Data Deletion Rights: Maintain the contractual right to have your data purged from provider systems, including any derivative training data or model improvements based on your usage.

Audit Rights: Reserve the right to audit how your data is being used, stored, and potentially incorporated into provider products or services.

Technical Protection Measures

Data Minimization: Only send the minimum necessary data to external AI services. Implement preprocessing that removes sensitive context while preserving functionality.

Synthetic Data Generation: Use synthetic data that preserves statistical properties while protecting actual operational details. This maintains AI effectiveness while reducing IP exposure.

Federated Learning Approaches: Where possible, keep sensitive data on-premises and only share model updates or aggregated insights rather than raw operational data.

Multi-Provider Strategies: Avoid single points of dependency by architecting solutions that can work across multiple model providers, reducing platform-specific lock-in.

Organizational Safeguards

AI Governance Frameworks: Establish clear policies about what data can be shared with external AI services and under what circumstances.

Regular Risk Assessments: Continuously evaluate the competitive risks associated with your AI provider relationships as their product offerings evolve.

Internal AI Capabilities: Develop internal AI expertise to reduce dependence on external providers for critical applications and to better evaluate alternative solutions.

Engagement Model Design

Phased Integration: Start with non-critical applications and gradually expand AI integration as you better understand the competitive implications.

Hybrid Architectures: Design systems that combine external AI capabilities with proprietary logic, keeping your unique competitive advantages in-house.

Exit Strategy Planning: Always maintain viable paths to migrate away from provider platforms if competitive conflicts emerge.

Figure 2 provides a simplified way to explore how to safeguard an enterprise’s interest. Your data is IP. Your workflows are your IP. How do you protect your core.

Figure 2: How can Enterprise protect their IP when working with Model Providers

The Build vs. Buy Strategic Advantage: How NStarX Enables Enterprise Independence

In this environment, companies like NStarX represent a crucial alternative approach that prioritizes enterprise independence and IP protection. Rather than relying heavily on external model providers, NStarX advocates for a “build over buy” strategy that keeps critical AI capabilities under enterprise control.

Sovereign AI Architecture: NStarX’s approach emphasizes building AI solutions that enterprises fully own and control, eliminating the data exposure risks inherent in cloud-based model services. This architectural choice ensures that your operational intelligence remains proprietary.

Domain-Specific Optimization: Instead of using general-purpose models that may later compete with your applications, NStarX helps enterprises develop AI capabilities specifically tailored to their unique requirements and business contexts.

Competitive Moat Preservation: By keeping AI development in-house, enterprises maintain their competitive advantages rather than contributing to the training data that might eventually power competing solutions.

Long-term Cost Control: While the initial investment in building internal AI capabilities may be higher, NStarX’s approach can provide better long-term cost predictability compared to increasing dependency on external platforms with growing pricing power.

Innovation Freedom: Internal AI capabilities provide enterprises with the freedom to pursue innovative applications without worrying about conflicts with provider interests or roadmaps.

The NStarX documentation emphasizes that enterprises need to think strategically about where they source their AI capabilities, particularly for applications that touch their core competitive advantages. Their framework helps organizations identify which AI capabilities should be built internally versus procured externally.

Charting the Future: Coexistence Strategies for the AI Ecosystem

The future relationship between enterprises and model providers doesn’t have to be zero-sum, but it will require new frameworks for coexistence:

For Model Providers

Clear Boundaries: Model providers should establish and communicate clear boundaries about which markets they will and won’t enter, providing enterprises with confidence to invest in complementary solutions.

Partnership Models: Rather than competing directly with enterprise customers, model providers could focus on enabling their success through improved infrastructure and tools rather than replacement applications.

Data Governance Transparency: Providers should offer greater transparency about how customer data influences their product development and provide stronger guarantees against competitive use.

For Enterprises

Strategic AI Portfolio Management: Enterprises should develop sophisticated frameworks for deciding which AI capabilities to build internally versus source externally, based on competitive sensitivity and strategic importance.

Hybrid Approaches: The most resilient strategy likely involves combining internal AI capabilities for competitively sensitive applications with external providers for commodity AI functions.

Industry Collaboration: Enterprises should consider collaborating within industries to develop shared AI capabilities that reduce collective dependence on individual providers while maintaining competitive differentiation.

Regulatory Engagement: Enterprises should engage with regulators to establish frameworks that prevent anti-competitive behavior in AI markets while preserving innovation incentives.

Future Enterprise Strategy Framework

The most successful enterprises will likely adopt a “concentric circles” approach to AI strategy:

Core Circle: Critical competitive advantages remain in-house using solutions like those provided by NStarX, ensuring complete control over the most valuable IP and processes.

Collaborative Circle: Industry-standard processes leverage shared AI capabilities developed through industry consortiums or specialized providers that don’t compete directly with enterprise customers.

Commodity Circle: Generic AI tasks use the most cost-effective providers available, with minimal competitive sensitivity and strong contractual protections.

This framework allows enterprises to benefit from AI advances while protecting their most valuable intellectual property and maintaining strategic independence.

Conclusion

The AI revolution has created unprecedented opportunities for enterprise innovation, but it has also introduced new forms of competitive risk. As model providers expand beyond infrastructure into applications and services, enterprises must become more strategic about protecting their intellectual property and competitive advantages.

The key is not to avoid AI adoption—that would be competitively suicidal in today’s market—but rather to approach it with sophisticated risk management and strategic planning. This means understanding what constitutes your most valuable IP, implementing technical and contractual safeguards, and making thoughtful decisions about which AI capabilities to build internally versus source externally.

Companies like NStarX offer valuable alternatives that prioritize enterprise independence and IP protection. Their build-over-buy approach provides a pathway for organizations to capture AI benefits while maintaining control over their competitive advantages.

The enterprises that thrive in the AI age will be those that can skillfully navigate between leveraging external AI capabilities and protecting their proprietary advantages. This requires new forms of strategic thinking, careful contract negotiation, technical architecture planning, and organizational governance.

The future of enterprise AI isn’t about choosing sides between internal development and external providers—it’s about making sophisticated decisions about where to draw the boundaries and how to manage the relationships in ways that preserve competitive advantage while enabling innovation.

As the AI landscape continues to evolve, enterprises that invest in understanding these dynamics and building appropriate safeguards will be best positioned to capture the benefits of AI while avoiding the pitfalls of platform dependence and IP erosion.

References

- Gupta, J., & Garg, A. (2025). When model providers eat everything: A survival guide for Service-as-Software startups. Foundation Capital.Retrieved from https://foundationcapital.com/when-model-providers-eat-everything-a-survival-guide-for-service-as-software-startups/

- Zuboff, S. (2019). The Age of Surveillance Capitalism: The Fight for a Human Future at the New Frontier of Power. PublicAffairs.

- Parker, G. G., Van Alstyne, M. W., & Choudary, S. P. (2016). Platform Revolution: How Networked Markets Are Transforming the Economy and How to Make Them Work for You. W. W. Norton & Company.

- European Commission. (2024). AI Act: Artificial Intelligence Regulation. Official Journal of the European Union.

- Federal Trade Commission. (2024). Competition and Consumer Protection in the 21st Century: AI and Algorithms. FTC Technology Report.

- Brynjolfsson, E., & McAfee, A. (2014). The Second Machine Age: Work, Progress, and Prosperity in a Time of Brilliant Technologies. W. W. Norton & Company.

- Acemoglu, D., & Restrepo, P. (2020). The Race between Man and Machine: Implications of Technology for Growth, Factor Shares, and Employment. American Economic Review, 108(6), 1488-1542.

- Harvard Business Review. (2024). The Strategic Risks of AI Platform Dependence. Harvard Business Review Press.

- MIT Technology Review. (2024). Enterprise AI: Building vs. Buying in the Age of Foundation Models. MIT Technology Review Insights.