AUTHOR: NSTARX team that has been shipping high velocity GenAI applications into production. The blog below is captured through the lens of NStarX Engineering working with enterprises in their GenAI journey.

The Promise Versus the Reality: Where GenAI Stands Today

The generative AI revolution has captivated boardrooms worldwide, yet a troubling gap persists between pilot programs and production deployments. While adoption has surged dramatically—with GenAI usage doubling from 33% to 65% between 2023 and 2024—the journey from proof of concept to production remains fraught with challenges.

The statistics paint a sobering picture. Gartner predicts that at least 30% of generative AI projects will be abandoned after proof of concept by the end of 2025, citing poor data quality, inadequate risk controls, escalating costs, and unclear business value. Despite organizations investing an average of $110 million in GenAI initiatives in 2024 and global spending projected to reach $644 billion in 2025, only 10% of companies with revenues between $1-5 billion have fully integrated GenAI into their operations.

The POC-to-production chasm reveals a fundamental truth: technical capability alone doesn’t guarantee successful deployment. While 92% of Fortune 500 firms have adopted AI technology, most organizations are pursuing 20 or fewer experiments, and over two-thirds indicate that 30% or fewer of their experiments will be fully scaled in the next three to six months. The bottleneck isn’t innovation—it’s governance that can move at the speed of AI development.

When Governance Becomes a Cautionary Tale: The Deloitte Australia Case

Recent headlines delivered a stark reminder of what happens when governance fails. In October 2025, Deloitte Australia was forced to partially refund the $290,000 paid by the Australian government for a 237-page report riddled with AI hallucinations. The report, commissioned to review the Department of Employment and Workplace Relations’ welfare penalty automation system, contained fabricated academic references, non-existent book citations, and a completely invented quote attributed to a federal court judge.

University of Sydney researcher Dr. Christopher Rudge identified up to 20 errors in the original report, including references to imaginary works by real academics and misquoted legal judgments. The revised version eventually disclosed that Azure OpenAI’s GPT-4o had been used in the report’s creation—a detail conspicuously absent from the initial submission.

Why did this failure occur? The incident reveals several critical governance gaps:

- No human verification process for AI-generated content before client delivery

- Absence of mandatory disclosure requirements for GenAI usage

- Lack of output validation mechanisms to catch hallucinations

- Missing quality control checkpoints between AI generation and final submission

- Inadequate training on responsible AI use for consultants

Senator Barbara Pocock captured the severity perfectly: “Deloitte misused AI and used it very inappropriately: misquoted a judge, used references that are non-existent. I mean, the kinds of things that a first-year university student would be in deep trouble for.”

Success Stories: When Governance Enab-les Rather Than Inhibits

Contrast Deloitte’s failure with organizations that have successfully deployed GenAI at scale by implementing robust governance frameworks:

Whataburger’s Customer Intelligence Revolution: The restaurant chain with over 1,000 locations processes more than 10,000 online reviews weekly. By implementing GenAI through Dataiku with appropriate governance controls, they nearly doubled their topic discovery—uncovering 280,000 review topics compared to the previous 144,000—while maintaining data quality and accuracy standards.

Renault Group’s Ampere: This EV subsidiary deployed an enterprise version of Gemini Code Assist with governance frameworks that understand the company’s code base, standards, and conventions. The implementation included version control, code review processes, and human oversight mechanisms that ensured both innovation velocity and code quality.

Thomson Reuters: By adding Gemini Pro to their suite of approved large language models with comprehensive governance, they achieved 10x faster processing for certain tasks while maintaining their stringent legal and compliance requirements. Their success stemmed from clearly defined approval workflows and usage policies.

The common thread among successful implementations:

- Clear approval processes that don’t create bottlenecks

- Comprehensive but streamlined documentation requirements

- Real-time monitoring with human oversight

- Phased rollouts with continuous evaluation

- Cross-functional governance teams empowered to make decisions

The High Cost of Governance Gaps Across Development Stages

When governance is absent or inadequate across development, staging, and production environments, enterprises face cascading risks that compound with each deployment stage:

Development Environment Risks

Shadow AI proliferation: Without governance, developers use unapproved models and APIs, creating security vulnerabilities and compliance blind spots. Organizations report that 53% of employees don’t know how to get the most value from GenAI, leading to improvised solutions that bypass controls.

Training data contamination: Absence of data governance allows sensitive information to leak into training datasets. The financial impact can be severe—one study found that just five poisoned documents in a RAG system could steer model responses 90% of the time.

Bias embedding: Without diverse review processes during development, models inherit and amplify biases from training data. Research shows foundational LLMs frequently associate “engineer” or “CEO” with men and “nurse” or “teacher” with women, perpetuating harmful stereotypes.

Staging Environment Challenges

Inadequate testing protocols: Without structured red teaming and adversarial testing, vulnerabilities remain undiscovered until production. Microsoft’s analysis of 100+ GenAI products revealed that application security risks often stem from outdated dependencies and improper security engineering.

Version control chaos: Lack of model registries and versioning systems makes it impossible to track which model version produced specific outputs, eliminating accountability and reproducibility.

Configuration drift: Staging environments diverge from production settings without proper governance, creating the illusion of readiness while masking critical issues.

Production Deployment Catastrophes

Reputational damage: Public failures like Deloitte’s erode customer trust and brand value. The $290,000 direct cost pales compared to the reputational impact across their entire client base.

Regulatory penalties: The EU AI Act, effective August 2024, mandates risk management, human oversight, and transparency for high-risk AI systems. Non-compliance can result in fines up to 6% of global annual revenue.

Operational failures: Without monitoring and audit trails, models drift undetected, producing increasingly erroneous outputs. Organizations report that 47% have experienced at least one negative consequence from GenAI use.

Legal liability: Fabricated citations and erroneous outputs create legal exposure, particularly in regulated industries like finance, healthcare, and legal services.

Financial losses: Failed implementations waste investment—with average enterprise spending exceeding $110 million annually on GenAI initiatives, governance failures represent massive capital destruction.

Best Practices: The Essential Pillars of GenAI Governance

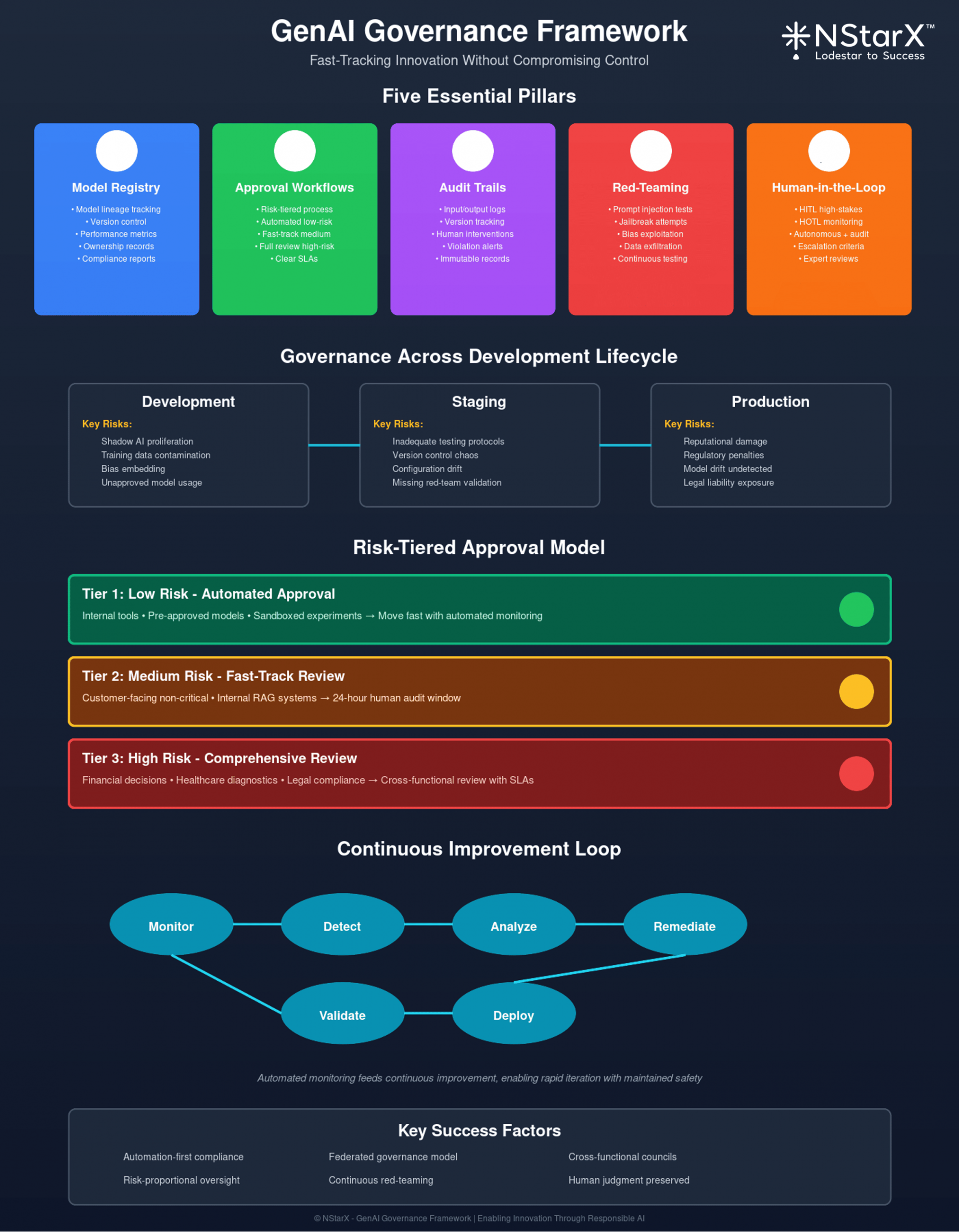

Let us visually represent the NStarX pillars of AI governance:

FIGURE 1: PILLARS OF NSTARX GENAI GOVERNANCE

Building governance that enables rather than inhibits requires implementing five interconnected pillars:

1. Model Registries: Your Source of Truth

A comprehensive model registry serves as the central catalog for all AI models across the organization, tracking:

- Model lineage: Training data sources, preprocessing steps, and feature engineering

- Performance metrics: Accuracy, latency, resource consumption, and business KPIs

- Version history: Complete audit trail of changes, with rollback capabilities

- Deployment status: Which environments host which versions

- Ownership and accountability: Clear designation of responsible parties

Implementation best practices:

- Mandate registry entry before any model moves to staging or production

- Automate metadata capture during training and deployment

- Integrate with CI/CD pipelines for seamless workflow

- Implement role-based access controls

- Generate automated compliance reports from registry data

Example architecture: Credo AI’s GenAI Vendor Registry launched with pre-populated data for OpenAI, Microsoft, IBM, Anthropic, and others, enabling enterprises to consolidate risk and compliance data while providing Responsible AI benchmarks for specific vendors.

2. Approval Workflows: Speed Without Sacrifice

Traditional approval processes create bottlenecks that stifle innovation. Modern GenAI governance requires risk-tiered approval workflows:

Tier 1: Low Risk (Automated Approval)

- Internal productivity tools

- Non-customer-facing applications

- Sandboxed experimentation environments

- Pre-approved model/vendor combinations

Tier 2: Medium Risk (Fast-Track Review)

- Customer-facing but non-critical applications

- RAG systems with internal knowledge bases

- Automated approval with 24-hour human audit window

- Standardized risk assessment checklists

Tier 3: High Risk (Comprehensive Review)

- Financial decision systems

- Healthcare diagnostics

- Legal compliance tools

- Regulatory filings

- Cross-functional review board with defined SLAs

Key principles:

- Default to approval with monitoring rather than blanket restrictions

- Create clear escalation paths with maximum response times

- Empower working-level governance teams to make decisions

- Implement exception processes for innovation sprints

- Document decisions with rationale for audit purposes

3. Audit Trails: Accountability Through Transparency

Comprehensive audit trails provide the foundation for trust, compliance, and continuous improvement:

What to log:

- Model version, parameters, and configuration

- Timestamp and user identity

- Retrieval sources for RAG systems

- Human interventions and overrides

- Policy violations and remediation actions

- Performance metrics and drift indicators

Architecture considerations:

- Immutable, append-only logging systems

- Encrypted storage with access controls

- Automated retention policies aligned with regulations

- Real-time alerting for anomalies

- Queryable interfaces for investigations

- Integration with broader security information and event management (SIEM) systems

Critical for compliance: GDPR, HIPAA, and the EU AI Act all require explainability and documentation of AI decision-making processes. Organizations without robust audit trails face both regulatory penalties and inability to defend against challenges.

4. Red-Teaming: Adversarial Testing for Resilience

Red-teaming applies offensive security methodologies to GenAI systems, proactively identifying vulnerabilities before attackers exploit them:

Core vulnerability categories:

- Prompt injection: Crafting inputs that manipulate system behavior

- Jailbreaking: Bypassing safety guardrails through multi-turn dialogue

- Data exfiltration: Tricking models into revealing training data

- Bias exploitation: Triggering discriminatory outputs

- Hallucination amplification: Forcing fabrication of authoritative-sounding falsehoods

Red-teaming workflow:

- Scoping: Define risk surface, critical use cases, and success criteria

- Reconnaissance: Map system architecture, data flows, and integration points

- Exploitation: Execute attacks across vulnerability categories

- Documentation: Record successful attacks with reproduction steps

- Remediation: Develop and implement fixes

- Retesting: Validate that mitigations work without breaking functionality

Best practices:

- Combine automated tools (PyRIT, Garak, LLMFuzzer) with human red teamers

- Include domain experts who understand business context

- Rotate red team assignments to maintain fresh perspectives

- Test throughout lifecycle, not just pre-production

- Share findings across organization to prevent repeated vulnerabilities

Microsoft’s AI Red Team has tested over 100 generative AI products, discovering that even with comprehensive testing, novel attack vectors constantly emerge. Organizations must treat red-teaming as continuous rather than one-time validation.

5. Human-in-the-Loop (HITL): The Judgment Layer

HITL ensures that human expertise, ethics, and accountability remain central to AI-driven decisions:

Implementation models:

Human-in-the-Loop (HITL): For high-stakes, irreversible decisions

- Clinical diagnoses and treatment recommendations

- Credit approvals and loan decisions

- Legal document review

- Strategic business decisions

- Humans review and approve before execution

Human-on-the-Loop (HOTL): For medium-risk, high-volume automation

- Customer service routing

- Document triage and classification

- Marketing campaign management

- Humans monitor dashboards with defined intervention triggers

Autonomous with Audit: For low-risk, reversible tasks

- Spell-check and grammar correction

- Data deduplication

- Draft content generation

- Post-hoc human review of samples

Design principles:

- Clear escalation criteria defining when human review is mandatory

- Intuitive interfaces that surface rationale for AI recommendations

- Audit trails capturing all human interventions

- Training programs for effective human-AI collaboration

- Performance metrics balancing speed with accuracy

Example: In regulated industries like finance, organizations designate compliance officers who review outputs before deployment, maintain documentation for audits, and embed policy-based checks that automatically flag rule violations for human review.

Additional Essential Practices

Continuous monitoring: Implement automated systems tracking model drift, performance degradation, and anomalous outputs with real-time alerting.

Bias mitigation: Regular audits using diverse review panels, feedback mechanisms for users to report issues, and corrective action workflows.

Vendor management: Contracts requiring transparency, audit rights, and governance commitments from third-party AI providers.

Cross-functional governance councils: Representatives from legal, security, compliance, engineering, and business units sharing decision-making authority.

Balancing Velocity and Control: The NStarX Approach

At NStarX, we’ve developed a framework that treats governance not as a speed bump but as an accelerant for responsible AI innovation. Our approach rests on three foundational principles:

Principle 1: Risk-Proportional Governance

Not all GenAI applications carry equal risk. A code completion tool used by developers differs fundamentally from a system generating regulatory filings. Our tiered governance model matches oversight intensity to potential impact:

Acceleration tracks:

- Pre-approved model/use case combinations move directly to production with automated monitoring

- Standardized risk assessments replace lengthy reviews for common scenarios

- Governance as code embeds controls in development workflows, eliminating manual bottlenecks

Example workflow: When a team wants to deploy a customer service chatbot using Claude 4 with RAG from internal documentation, they complete a 15-minute risk assessment. If the use case matches pre-approved patterns, automated validation checks security, privacy, and bias controls, provisioning production access within hours while logging everything for audit.

Principle 2: Federated Governance with Centralized Standards

Innovation happens at the edges, but standards must remain consistent. We advocate for a federated model where:

Central team sets:

- Core policies and risk frameworks

- Approved model/vendor lists with security assessments

- Standard monitoring and audit trail requirements

- Escalation paths for novel use cases

- Training and certification programs

Business units own:

- Implementation decisions within guardrails

- Use case prioritization and resource allocation

- Day-to-day operational governance

- Domain-specific risk assessments

- Innovation experimentation within sandbox environments

This structure empowers teams while ensuring enterprise-wide consistency. Domain experts make context-aware decisions without waiting for centralized approval on every detail.

Principle 3: Automation-First Compliance

Manual governance doesn’t scale with AI velocity. We embed controls directly into development and deployment pipelines:

Automated guardrails:

- Model registry integration enforced at deployment

- Continuous security scanning of dependencies

- Automated bias testing on representative data slices

- Real-time content filtering for prohibited outputs

- Performance monitoring with automatic alerting

Human review reserved for:

- Novel use cases outside approved patterns

- High-risk applications with significant business impact

- Escalated issues flagged by automated systems

- Quarterly governance audits and framework updates

Implementation approach: Organizations should invest in platforms that treat AI governance as a first-class product feature, not an afterthought. Tools like watsonx.governance, Azure AI Foundry’s responsible AI dashboard, and specialized solutions from Credo AI integrate governance into existing workflows rather than creating parallel approval processes.

Making It Work: The Five-Phase Rollout

Phase 1: Foundation (Months 1-2)

- Establish governance council with clear mandate

- Define risk tiers and corresponding approval paths

- Deploy model registry and audit logging infrastructure

- Create initial approved model/vendor list

Phase 2: Enablement (Months 2-4)

- Train teams on governance frameworks and tools

- Launch pilot programs with intensive monitoring

- Develop use case templates for common scenarios

- Build feedback loops for framework refinement

Phase 3: Scale (Months 4-8)

- Expand approved patterns based on pilot learnings

- Automate risk assessments for standard use cases

- Deploy monitoring dashboards for all stakeholders

- Implement continuous improvement processes

Phase 4: Optimization (Months 8-12)

- Analyze bottlenecks and streamline workflows

- Expand pre-approved combinations

- Enhance automation of compliance verification

- Develop advanced red-teaming capabilities

Phase 5: Innovation (Ongoing)

- Regular framework updates for emerging risks

- Investment in cutting-edge governance technologies

- Industry collaboration on best practices

- Continuous adaptation to regulatory evolution

The Future State: Adaptive Governance for Evolving AI

As foundational models advance and agentic AI emerges, governance frameworks must evolve from static policies to adaptive systems that learn and adjust alongside the technology they regulate.

Emerging Trends Reshaping Governance

Agentic AI complexity: By 2027, Deloitte forecasts that 50% of GenAI-using companies will deploy intelligent agents capable of autonomous action. These systems will chain multiple tool calls, make decisions, and interact with external services—exponentially expanding the governance surface area.

Traditional checkpoint-based approval won’t scale. Future governance will require:

- Real-time policy enforcement at the agent level

- Behavioral boundaries rather than explicit rules

- Continuous learning from agent interactions

- Dynamic risk assessment based on action patterns

- Automated intervention when agents approach boundary conditions

Model multiplication: Organizations already mix and match multiple models to optimize performance and cost. The average enterprise uses 3-5 different LLMs for various tasks, and this number will grow. Governance must evolve from model-specific policies to platform-level controls that work across any model.

Regulatory harmonization: The EU AI Act, NIST AI RMF, and emerging regulations globally will converge toward common frameworks. Organizations building governance now should design for regulatory interoperability, documenting processes in ways that satisfy multiple jurisdictions simultaneously.

From Reactive to Predictive Governance

The next generation of AI governance will anticipate issues before they manifest:

Predictive risk modeling: AI systems will analyze patterns across deployments to predict which use cases carry elevated risk of bias, hallucination, or security vulnerabilities. Governance frameworks will proactively require enhanced controls for high-risk predictions.

Automated remediation: When monitoring detects drift or performance degradation, systems will automatically trigger corrective actions—rolling back to previous versions, adjusting parameters, or engaging human reviewers—without waiting for manual intervention.

Continuous red-teaming: Rather than periodic security assessments, AI-powered red-teaming tools will constantly probe production systems, generating adversarial inputs and measuring resilience. Successful attacks trigger immediate patching workflows.

Explainability evolution: As models become more complex, governance will increasingly rely on AI-powered explainability tools that can interpret black-box decisions and generate human-readable justifications that satisfy regulatory requirements.

The Human Element Remains Central

Despite technological advancement, human judgment and accountability will become more—not less—critical:

Governance professionals will evolve from gatekeepers to enablers: Rather than saying “no” to AI initiatives, governance teams will partner with developers to find “yes, if” pathways that manage risk while enabling innovation.

Domain expertise becomes paramount: Understanding AI technology alone isn’t sufficient. Effective governance requires deep knowledge of business context, regulatory requirements, ethical considerations, and stakeholder concerns. Organizations will invest heavily in hybrid roles that combine technical AI knowledge with domain specialization.

Trust becomes the competitive advantage: As AI capabilities commoditize, the organizations that win will be those that customers, regulators, and employees trust to use AI responsibly. Governance becomes not a cost center but a source of differentiation and competitive moat.

The NStarX Vision for 2030

By 2030, we envision governance frameworks that are:

Invisible to users: Controls embedded so seamlessly that developers and business users don’t experience governance as friction—it simply ensures their AI systems work as intended.

Adaptive by design: Frameworks that automatically adjust control intensity based on observed risk patterns, reducing oversight for proven low-risk applications while tightening scrutiny for emerging high-risk scenarios.

Globally interoperable: Governance architectures that satisfy regulatory requirements across all major jurisdictions through unified documentation and control frameworks.

Value-generating: Rather than pure risk mitigation, governance systems actively improve AI performance by identifying training data quality issues, detecting drift before user impact, and suggesting optimization opportunities.

Organizations that treat governance as a strategic capability—investing in platforms, processes, and people—will move faster and more confidently than competitors who view it as compliance overhead. The governance gap will separate AI leaders from laggards.

Conclusion: Governance as Innovation Catalyst

The Deloitte Australia failure demonstrates that GenAI without governance creates existential risk. But heavy-handed, bureaucratic control structures stifle the innovation that makes AI valuable in the first place.

The path forward requires reimagining governance not as a barrier but as infrastructure that enables responsible velocity. Model registries provide visibility and control. Risk-tiered approvals match oversight to impact. Comprehensive audit trails create accountability. Red-teaming builds resilience. Human-in-the-loop preserves judgment and ethics.

Organizations that implement these practices thoughtfully—automating what can be automated, streamlining what remains manual, and always optimizing for both speed and safety—will capture GenAI’s transformative potential while avoiding costly failures.

The question isn’t whether to govern AI, but how to govern it in ways that accelerate rather than impede progress. At NStarX, we believe governance done right is the ultimate competitive advantage in the AI era—the foundation upon which trust, innovation, and sustainable value creation rest.

The organizations that will thrive in the AI-driven future are those that recognize this truth today and act on it with urgency. The technology is ready. The question is: Is your governance?

References

- Gartner. (2024). Gartner Predicts 30% of GenAI Projects Will Be Abandoned By 2025. https://www.gartner.com/en/newsroom/press-releases/2024-07-29-gartner-predicts-30-percent-of-generative-ai-projects-will-be-abandoned-after-proof-of-concept-by-end-of-2025

- Gartner. (2025). Gartner Forecasts Worldwide GenAI Spending to Reach $644 Billion in 2025. https://www.gartner.com/en/newsroom/press-releases/2025-03-31-gartner-forecasts-worldwide-genai-spending-to-reach-644-billion-in-2025

- McKinsey. (2025). The state of AI: How organizations are rewiring to capture value. https://www.mckinsey.com/capabilities/quantumblack/our-insights/the-state-of-ai

- McKinsey. (2025). AI in the workplace: A report for 2025. https://www.mckinsey.com/capabilities/mckinsey-digital/our-insights/superagency-in-the-workplace-empowering-people-to-unlock-ais-full-potential-at-work

- Deloitte. (2024). State of Generative AI in the Enterprise 2024. https://www.deloitte.com/us/en/what-we-do/capabilities/applied-artificial-intelligence/content/state-of-generative-ai-in-enterprise.html

- ABC News. (2025). Deloitte to partially refund Australia for report with apparent AI-generated errors. https://abcnews.go.com/Technology/wireStory/deloitte-partially-refund-australian-government-report-apparent-ai-126281611

- Fortune. (2025). Deloitte was caught using AI in $290,000 report to help the Australian government. https://fortune.com/2025/10/07/deloitte-ai-australia-government-report-hallucinations-technology-290000-refund/

- TechCrunch. (2025). Deloitte goes all in on AI — despite having to issue a hefty refund for use of AI. https://techcrunch.com/2025/10/06/deloitte-goes-all-in-on-ai-despite-having-to-issue-a-hefty-refund-for-use-of-ai/

- Google Cloud. (2025). Real-world gen AI use cases from the world’s leading organizations. https://cloud.google.com/transform/101-real-world-generative-ai-use-cases-from-industry-leaders

- Menlo Ventures. (2025). 2024: The State of Generative AI in the Enterprise. https://menlovc.com/2024-the-state-of-generative-ai-in-the-enterprise/

- Andreessen Horowitz. (2025). How 100 Enterprise CIOs Are Building and Buying Gen AI in 2025. https://a16z.com/ai-enterprise-2025/

- AI Verify Foundation. (2024). Model AI Governance Framework for Generative AI. https://aiverifyfoundation.sg/wp-content/uploads/2024/05/Model-AI-Governance-Framework-for-Generative-AI-May-2024-1-1.pdf

- IMDA Singapore. (2024). Model AI Governance Framework 2024 – Press Release. https://www.imda.gov.sg/resources/press-releases-factsheets-and-speeches/press-releases/2024/public-consult-model-ai-governance-framework-genai

- Teqfocus. (2025). GenAI Governance Checklist 2024: Best Practices to Start. https://www.teqfocus.com/blog/the-genai-governance-checklist/

- PwC UK. GenAI: Creating value through governance. https://www.pwc.co.uk/insights/generative-artificial-intelligence-creating-value-through-governance.html

- Datanami. (2024). Credo AI Enhances AI Governance with GenAI Vendor Registry for Scalable Adoption. https://www.datanami.com/this-just-in/credo-ai-enhances-ai-governance-with-genai-vendor-registry-for-scalable-adoption/

- Microsoft Learn. Planning red teaming for large language models (LLMs) and their applications. https://learn.microsoft.com/en-us/azure/ai-foundry/openai/concepts/red-teaming

- Microsoft Security Blog. (2025). 3 takeaways from red teaming 100 generative AI products. https://www.microsoft.com/en-us/security/blog/2025/01/13/3-takeaways-from-red-teaming-100-generative-ai-products/

- Security Magazine. (2024). Red teaming large language models: Enterprise security in the AI era. https://www.securitymagazine.com/articles/101172-red-teaming-large-language-models-enterprise-security-in-the-ai-era

- HiddenLayer. (2025). A Guide to AI Red Teaming. https://hiddenlayer.com/innovation-hub/a-guide-to-ai-red-teaming/

- Knostic. (2025). Red Team, Go! Preventing Oversharing in Enterprise AI. https://www.knostic.ai/blog/ai-red-teaming

- Guidepost Solutions. (2025). AI Governance – The Ultimate Human-in-the-Loop. https://guidepostsolutions.com/insights/blog/ai-governance-the-ultimate-human-in-the-loop/

- IBM. (2025). What is AI Governance?. https://www.ibm.com/think/topics/ai-governance

- Solutions Review. (2025). Human in the Loop Meaning: Approaches & Oversight. https://solutionsreview.com/human-in-the-loop-meaning-approach-oversight/

- RadarFirst. (2025). Human in the Loop is Essential for AI-Driven Compliance. https://www.radarfirst.com/blog/why-a-human-in-the-loop-is-essential-for-ai-driven-privacy-compliance/

- Holistic AI. Human in the Loop AI: Keeping AI Aligned with Human Values. https://www.holisticai.com/blog/human-in-the-loop-ai

- FlowHunt. (2025). Human-in-the-Loop – A Business Leaders Guide to Responsible AI. https://www.flowhunt.io/blog/human-in-the-loop-a-business-leaders-guide-to-responsible-ai/

- Confident AI. (2025). LLM Red Teaming: The Complete Step-By-Step Guide To LLM Safety. https://www.confident-ai.com/blog/red-teaming-llms-a-step-by-step-guide

- Hatchworks. (2025). Generative AI Statistics: Insights and Emerging Trends for 2025. https://hatchworks.com/blog/gen-ai/generative-ai-statistics/

- AmplifAI. (2025). 60+ Generative AI Statistics You Need to Know in 2025. https://www.amplifai.com/blog/generative-ai-statistics