Introduction: Zero Trust and AI Pipeline Security

The convergence of artificial intelligence and enterprise data has created unprecedented opportunities for innovation. Yet, this same convergence has opened new attack vectors that traditional security models cannot adequately address. As organizations deploy increasingly sophisticated AI pipelines that process sensitive information across distributed environments, the castle-and-moat security paradigm has become dangerously obsolete.

Zero Trust Architecture represents a fundamental shift in security philosophy: never trust, always verify. Rather than assuming anything inside the corporate perimeter is safe, Zero Trust treats every user, device, application, and data flow as potentially compromised until continuously validated. This principle becomes critical when applied to AI and data pipelines, where information moves through complex workflows involving data ingestion, transformation, model training, inference, and deployment.

In today’s landscape, AI pipelines handle massive volumes of sensitive data including personally identifiable information, protected health information, financial records, and proprietary business intelligence. Without Zero Trust principles embedded into these workflows, organizations face not only sophisticated cyberattacks but also regulatory penalties that can reach millions of dollars. The stakes have never been higher, and the margin for error has never been smaller.

Real-World Impact: When Zero Trust Fails and When It Succeeds

The Cost of Failure

The 2023 MOVEit data breach serves as a stark reminder of what happens when Zero Trust principles are absent from data pipelines. Exploiting a vulnerability in Progress Software’s file transfer solution, the Russian cybergang Cl0p compromised over 2,700 organizations and exposed the personal data of approximately 93.3 million individuals. The breach affected major entities including the BBC, British Airways, multiple U.S. federal agencies, and countless financial institutions. Financial estimates suggest the total cost could reach $12.15 billion, with individual organizations reporting remediation costs in the tens of millions.

What made this breach particularly devastating was the cascade effect through supply chains. Organizations that believed their data was secure discovered that third-party vendors using MOVEit had become the weak link. The vulnerability existed since 2021 but remained unpatched due to inadequate continuous monitoring and verification practices.

The 2024 IBM Cost of a Data Breach Report revealed that breaches involving AI platforms cost organizations an average of $4.88 million, marking a 10% increase from the previous year. More alarming, 60% of AI platform compromises led to further data store breaches, and organizations practicing “Shadow AI” suffered even higher costs. The most common attack vector was supply chain intrusion through compromised applications, APIs, or plugins, with weak authentication controls being the primary enabler.

T-Mobile’s repeated breaches, including the 2024 Salt Typhoon campaign linked to Chinese state-sponsored actors, demonstrated how telecommunications companies with vast customer data repositories become high-value targets. The breach potentially exposed call logs, text messages, and surveillance request data, with the company’s history of inadequate cybersecurity measures contributing to the recurring pattern of compromises.

Success Through Zero Trust Implementation

While failures dominate headlines, organizations implementing robust Zero Trust architectures for their AI pipelines tell a different story. Financial institutions adopting comprehensive Zero Trust frameworks have reported significant reductions in breach attempts and successful attacks. These organizations implement continuous authentication at every stage of their data pipelines, employ micro-segmentation to isolate AI workloads, and enforce least-privilege access across all system components.

Organizations using Zero Trust principles for their AI systems have demonstrated measurable improvements in threat detection and response times. By implementing behavioral analytics and continuous monitoring, they identify anomalies in real-time, preventing potential breaches before sensitive data is compromised. These systems automatically quarantine suspicious activities, revoke access credentials, and trigger incident response protocols without human intervention.

Companies that have embedded Zero Trust into their AI development lifecycle from the start report not only enhanced security but also improved regulatory compliance. By implementing policy-as-code and automated compliance checking, they maintain audit trails that satisfy regulators while reducing the burden on security teams. These organizations can demonstrate to auditors exactly who accessed what data, when, and for what purpose, significantly reducing regulatory risk.

Enterprise Blind Spots: Where Zero Trust Implementation Falls Short

Common Pitfalls in AI Pipeline Security

Despite growing awareness of Zero Trust principles, most enterprises exhibit critical blind spots in their AI pipeline implementations. The rush to deploy AI capabilities often outpaces security considerations, creating vulnerabilities that attackers eagerly exploit.

Inadequate Data Governance: Many organizations lack comprehensive visibility into where sensitive data exists within their AI pipelines. Data scientists frequently copy production datasets to development environments without proper de-identification, creating shadow repositories that bypass security controls. Without centralized data cataloging and classification, organizations cannot enforce appropriate protections.

Weak Secrets Management: AI pipelines require numerous credentials, API keys, and access tokens to function. Organizations often hardcode these secrets into configuration files, store them in version control systems, or share them across teams through insecure channels. When credentials are compromised, attackers gain unfettered access to data stores, model repositories, and production systems.

Insufficient Access Controls: The principle of least privilege remains more aspirational than operational in most AI environments. Data scientists and ML engineers typically receive broad access permissions that far exceed their actual needs. This over-privileged access creates multiple paths for insider threats and compromised accounts to move laterally through systems.

Neglected Data Lineage: Without rigorous tracking of data movement through pipelines, organizations cannot verify data integrity or detect poisoning attempts. Attackers can inject malicious data at various stages, corrupting model training or manipulating inference results, with organizations remaining unaware until damage is done.

Unmonitored Third-Party Components: Modern AI pipelines depend heavily on open-source libraries, pre-trained models, and external APIs. Organizations rarely scrutinize these dependencies for vulnerabilities or malicious code, creating supply chain risks that bypass perimeter defenses.

Production Consequences Without Zero Trust

In production environments, the absence of Zero Trust architecture manifests in several critical ways:

Data Exfiltration: Without continuous verification and monitoring, attackers who compromise any component of the AI pipeline can extract sensitive training data, model parameters, or inference results. These exfiltration attempts often go undetected for months, allowing adversaries to build comprehensive profiles of the organization’s data and capabilities.

Model Manipulation: Attackers can poison training data, corrupt model weights, or inject backdoors that activate under specific conditions. Without Zero Trust validation at each pipeline stage, these compromised models reach production, generating biased or malicious outputs that damage customer trust and regulatory compliance.

Lateral Movement: Once attackers gain initial access through vulnerable AI components, the lack of network segmentation and continuous authentication allows them to pivot to other systems. What begins as a compromise of a development notebook can escalate to production database breaches.

Compliance Failures: Regulatory frameworks like GDPR, CCPA, and emerging AI-specific regulations require organizations to demonstrate data protection measures. Without Zero Trust controls and audit trails, organizations cannot prove compliance, resulting in substantial fines and legal consequences.

Shadow AI Proliferation: Employees frustrated by security restrictions often deploy unsanctioned AI tools, creating ungoverned pathways for sensitive data to flow to third-party services. These Shadow AI instances operate outside security visibility, multiplying risk exposure.

The Imperative for Zero Trust in AI Pipelines

Why Zero Trust Is Non-Negotiable

The fundamental characteristics of AI workloads make Zero Trust not merely beneficial but essential. AI pipelines process vast quantities of sensitive data across distributed, cloud-based infrastructures. They involve numerous participants including data engineers, scientists, platform engineers, and automated processes. The dynamic nature of AI development means models constantly evolve, data sources change, and new vulnerabilities emerge.

Traditional security models that establish trust boundaries cannot accommodate this fluidity. A user who legitimately accesses data for model training today might be a compromised account tomorrow. An API that safely retrieves information this week might become a vector for adversarial attacks next week. Only continuous verification and adaptive security policies can address this reality.

Best Practices for Zero Trust AI Pipelines

Implement Policy-as-Code: Security policies must be codified, version-controlled, and automatically enforced across the entire AI lifecycle. Define who can access what data, under what conditions, and for what purposes using declarative policy languages. Tools like Open Policy Agent enable organizations to express complex authorization rules that the system enforces consistently. Policy-as-code ensures security keeps pace with rapid AI development cycles while maintaining auditability.

Establish Data Contracts: Every data source and consumer in the pipeline should operate under explicit contracts defining data schema, quality requirements, access permissions, and usage constraints. Data contracts act as formal agreements that both document expectations and enable automated validation. When data fails to meet contractual obligations, the system automatically rejects it, preventing corrupted or unauthorized information from propagating through the pipeline.

Deploy Comprehensive Tokenization: Replace sensitive data elements with tokens throughout the AI pipeline. Tokenization ensures that even if attackers compromise a pipeline component, they cannot extract meaningful sensitive information. Unlike encryption, tokenization provides format-preserving protection that maintains data utility for analytics while eliminating intrinsic value for attackers. Modern tokenization solutions support AI workloads by enabling model training on tokenized data without compromising accuracy.

Implement Robust Secrets Lifecycle Management: Credentials, API keys, and encryption keys require rigorous lifecycle management. Use dedicated secrets management platforms that automatically rotate credentials, enforce least-privilege access, and audit all usage. Never store secrets in code repositories or configuration files. Implement just-in-time access provisioning where credentials are dynamically generated for specific tasks and automatically revoked upon completion.

Enforce Continuous Authentication and Authorization: Verify identity and authorization at every interaction point in the pipeline. Implement multi-factor authentication universally, including for machine identities and service accounts. Use context-aware policies that consider user behavior, device posture, location, and data sensitivity when making access decisions. Continuously reassess authorization throughout sessions rather than granting long-lived permissions.

Apply Micro-Segmentation: Divide the AI infrastructure into isolated segments with explicit, minimal communication pathways between them. Training environments should be strictly separated from production inference systems. Development notebooks should operate in isolated enclaves with no direct production access. Each segment enforces its own security policies and monitoring.

Implement Comprehensive Logging and Monitoring: Maintain detailed audit trails of all data access, model training activities, inference requests, and policy decisions. Use AI-powered security information and event management systems to analyze logs in real-time, detecting anomalies and potential threats. Ensure logs themselves are protected through encryption and immutable storage to prevent tampering.

Secure the Data Supply Chain: Validate all external data sources, libraries, and models before integration into pipelines. Implement software composition analysis to identify vulnerabilities in dependencies. Use cryptographic signatures to verify model provenance. Quarantine and scan all external inputs for malicious content before allowing them into production systems.

Enable Data Encryption Everywhere: Encrypt data at rest, in transit, and increasingly, in use through confidential computing technologies. Use end-to-end encryption that protects data from the point of collection through processing to final storage, ensuring that even infrastructure providers cannot access sensitive information.

Building a Culture of Zero Trust

Technical controls alone cannot secure AI pipelines. Organizations must cultivate a security-first culture across AI and engineering teams. This cultural transformation includes:

Training: Regular education programs that help data scientists and engineers understand threat vectors, recognize suspicious activities, and implement secure coding practices. Training should be hands-on and relevant to AI-specific risks rather than generic security awareness.

Secure by Design: Integrate security considerations from the earliest stages of AI project planning. Security teams should participate in architecture reviews, helping design systems that embed Zero Trust principles rather than retrofitting them later.

Shared Responsibility: Foster understanding that security is everyone’s responsibility, not solely the domain of security teams. Developers, data scientists, and platform engineers must all contribute to maintaining the security posture.

Continuous Improvement: Regularly conduct red team exercises, penetration testing, and security assessments specifically focused on AI pipelines. Use findings to iteratively strengthen defenses and update policies.

Transparent Communication: Create channels for teams to report security concerns without fear of blame. Foster open dialogue about trade-offs between velocity and security, finding pragmatic solutions that maintain both productivity and protection.

Fortifying Against Attacks: How Best Practices Deliver Results

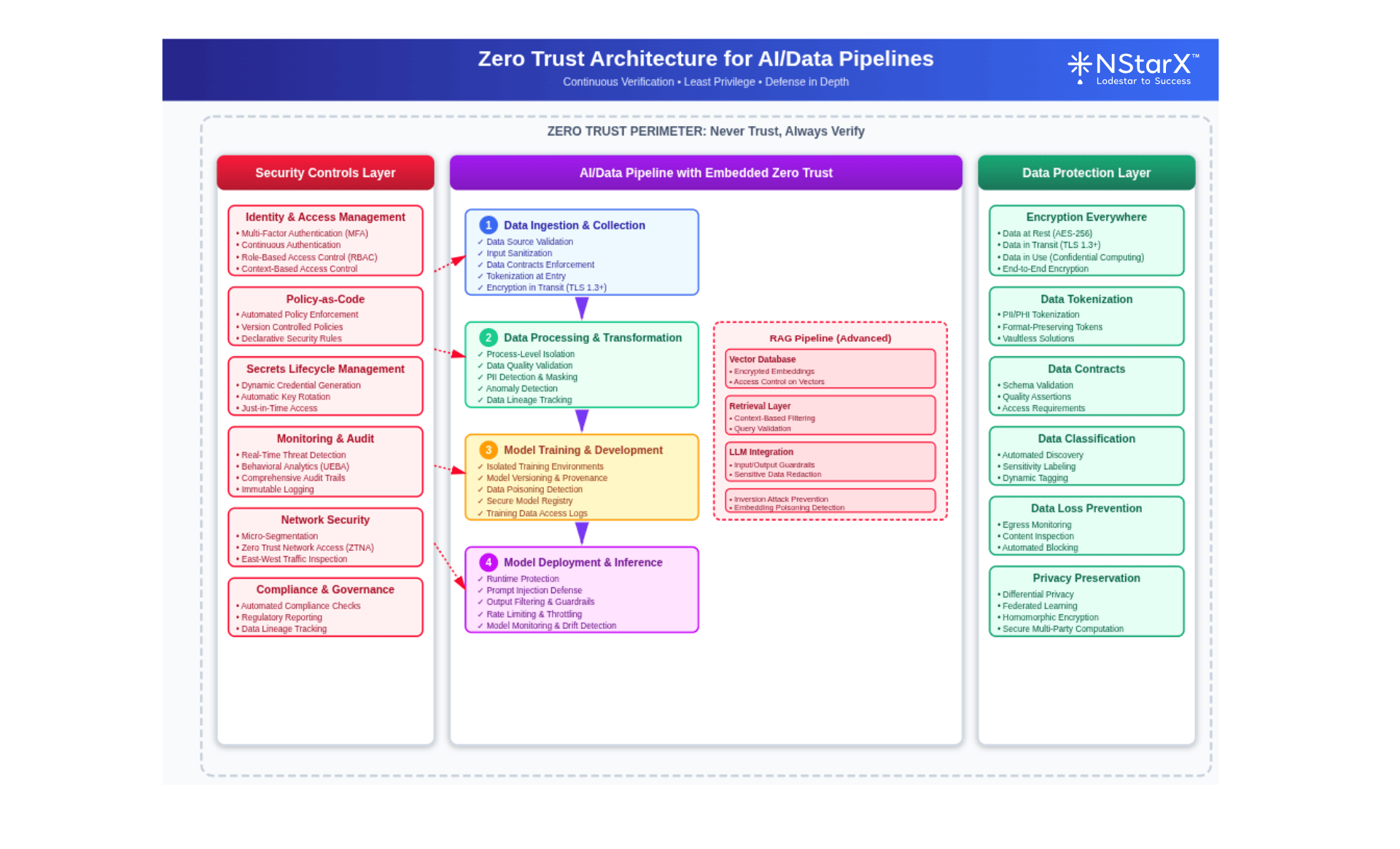

When organizations implement Zero Trust best practices comprehensively, they achieve demonstrable improvements in security posture and resilience against sophisticated attacks. Attached is the simple high level reference architecture for Zero Trust Security for an AI pipeline: Figure 1: Zero Trust Reference Architecture for an AI Pipeline

Figure 1: Zero Trust Reference Architecture for an AI Pipeline

Preventing Data Breaches

Organizations that tokenize sensitive data throughout their AI pipelines dramatically reduce breach impact. Even when attackers compromise components of the system, they cannot extract valuable information. A financial services company implementing comprehensive tokenization reported that an attempted breach of their AI training environment resulted in the exfiltration of only tokens, which provided zero value to the attackers and required no customer notification.

Detecting Insider Threats

Continuous behavioral monitoring enables rapid detection of insider threats or compromised accounts. One healthcare organization identified an employee attempting to export patient data for unauthorized model training within minutes of the attempt. The Zero Trust system automatically revoked access, quarantined the user session, and alerted security teams. The incident was contained before any data left the environment.

Blocking Supply Chain Attacks

Organizations implementing rigorous validation of third-party components have successfully blocked numerous supply chain attacks. By scanning open-source libraries for vulnerabilities and malicious code before integration, they prevent compromised dependencies from entering their environments. Software composition analysis identified a backdoored version of a popular ML library that had been downloaded thousands of times before discovery, protecting organizations from potentially devastating compromise.

Achieving Regulatory Compliance

Companies with comprehensive Zero Trust implementations consistently pass regulatory audits with minimal findings. The detailed audit trails, policy-as-code documentation, and automated compliance checking demonstrate to regulators that appropriate safeguards exist and operate effectively. This reduces regulatory risk and avoids the substantial fines that have become increasingly common for AI-related compliance failures.

Real-World Success: Financial Services

A major financial institution redesigned its AI pipeline architecture around Zero Trust principles after experiencing a near-miss security incident. They implemented micro-segmentation, separating customer-facing AI applications from backend training systems. They deployed comprehensive tokenization of personally identifiable information and financial data. They established data contracts governing all data movement and enforced them through automated validation.

The results were impressive. Attempted lateral movement by an attacker who compromised a development system was immediately blocked by micro-segmentation controls. The attacker gained access to tokenized data that provided no value. Behavioral analytics detected the anomalous access patterns within minutes, triggering automated response protocols that contained the incident before escalation. The entire incident was resolved without customer impact or regulatory notification requirements.

Real-World Success: Healthcare

A healthcare AI company processing protected health information implemented Zero Trust as they scaled from startup to enterprise. They used policy-as-code to enforce HIPAA requirements automatically across all environments. They implemented just-in-time access provisioning, where researchers received temporary credentials for specific datasets only when needed for approved projects. They deployed comprehensive logging that captured every data access for audit purposes.

When audited by regulators, they demonstrated complete data lineage and access control histories. They could show precisely which de-identified datasets were used for which models, proving compliance with data minimization requirements. They passed the audit without findings, while competitors in the same examination cycle faced substantial penalties for inadequate controls.

The Future: Zero Trust Evolution in the Age of LLMs and Sophisticated AI

As AI capabilities advance, particularly with the proliferation of large language models, retrieval-augmented generation systems, and autonomous agents, Zero Trust architectures must evolve to address emerging challenges.

Securing RAG Pipelines and Vector Databases

Retrieval-augmented generation represents one of the most significant architectural trends in AI, but it introduces novel security concerns. RAG systems combine large language models with external knowledge retrieval, typically using vector databases to store embeddings of organizational knowledge. These vector databases present new attack surfaces.

Vector and embedding weaknesses now rank among the top security concerns in LLM applications. Attackers can perform inversion attacks to reconstruct original sensitive data from embeddings. They can poison vector databases by injecting malicious documents that manipulate retrieval results. They can exploit prompt injection vulnerabilities to extract information the system should protect.

Zero Trust architectures for RAG systems must implement several advanced controls. Application-layer encryption that allows vector similarity searches on encrypted embeddings protects data even if the vector database is compromised. Context-based access control evaluates not just who requests information but the context of the request and response, providing granular filtering that prevents unauthorized data exposure. Comprehensive guardrails monitor both inputs to and outputs from LLMs, detecting and blocking prompt injection attempts, data leakage, and policy violations.

Autonomous Agent Security

As AI systems evolve from tools that humans operate to autonomous agents that make decisions and take actions independently, Zero Trust principles become even more critical. These agents can interact with APIs, access databases, and execute code based on their understanding of objectives. Without proper controls, compromised or misbehaving agents could cause significant harm.

Zero Trust for autonomous agents requires treating agents as distinct identities with their own authentication, authorization, and audit trails. Each agent should have minimal permissions necessary for its specific function. Agents must operate in sandboxed environments with explicit limitations on resources they can access and actions they can perform. All agent activities require comprehensive logging and monitoring, with anomaly detection systems watching for unexpected behaviors.

Organizations must implement robust testing frameworks for agents, including red teaming exercises where adversarial agents attempt to manipulate production agents. Agent behaviors should be continuously validated against defined policies, with automatic termination of agents that violate constraints.

Privacy-Preserving AI Technologies

The future of Zero Trust AI includes broader adoption of privacy-enhancing technologies that enable computation on sensitive data without exposing it. Homomorphic encryption allows mathematical operations on encrypted data, producing encrypted results that can be decrypted to reveal the output without ever exposing input data. Federated learning distributes model training across multiple parties without centralizing sensitive data. Differential privacy adds carefully calibrated noise to data or model outputs to prevent identification of individuals while preserving statistical utility.

These technologies align perfectly with Zero Trust principles by eliminating the need to trust any single party with unencrypted sensitive data. As these approaches mature and become more practical for production use, they will become standard components of Zero Trust AI architectures.

Regulatory Evolution and AI Governance

Regulatory frameworks for AI are rapidly evolving. The EU AI Act establishes comprehensive requirements for high-risk AI systems, including mandatory risk assessments, transparency requirements, and human oversight. The U.S. National Security Memorandum on AI and related guidance from agencies like NIST emphasize the importance of secure AI development practices. Individual states are enacting their own AI regulations, creating a complex patchwork of compliance requirements.

Zero Trust architectures that implement policy-as-code and comprehensive audit trails position organizations to adapt to these evolving requirements more easily. As new regulations emerge, organizations can update their policy definitions and enforcement mechanisms without redesigning entire systems. The detailed provenance tracking and audit trails that Zero Trust systems maintain provide the documentation regulators increasingly demand.

Quantum Computing and Post-Quantum Cryptography

The advent of quantum computing poses an existential threat to current encryption methods. Quantum computers will be capable of breaking the mathematical problems that underpin RSA and elliptic curve cryptography, potentially exposing all data encrypted with these methods. Organizations must begin transitioning to post-quantum cryptography standards now, before quantum computers become practical threats.

Zero Trust architectures must incorporate quantum-resistant encryption algorithms for protecting data in AI pipelines. NIST has standardized several post-quantum cryptographic algorithms, and organizations should begin integrating these into their systems. This transition will be complex and time-consuming, making early action essential.

AI-Powered Security

Ironically, AI itself will play an increasingly important role in securing AI pipelines. Machine learning algorithms excel at pattern recognition and anomaly detection, making them ideal for identifying sophisticated attacks that might evade rule-based systems. AI-powered security tools can analyze vast volumes of logs and telemetry data, identifying subtle indicators of compromise that human analysts would miss.

However, this creates a recursive challenge: how do we secure the AI systems that secure our AI systems? This requires establishing trusted baselines, implementing rigorous validation of security AI models, and maintaining human oversight of automated security decisions. Zero Trust principles apply to security AI just as they do to other AI applications.

Collaborative Threat Intelligence

The future of AI security will increasingly depend on collaborative threat intelligence sharing across organizations and industries. Attackers share techniques, tools, and exploits. Defenders must similarly share indicators of compromise, vulnerability information, and attack patterns. Industry consortia focused on AI security are emerging, providing forums for collective defense.

Zero Trust architectures should integrate with threat intelligence platforms, automatically updating policies and detection rules based on the latest intelligence. When one organization identifies a new attack technique targeting AI pipelines, that knowledge should rapidly propagate to protect others.

Conclusion: The Path Forward

Zero Trust Architecture for AI and data pipelines represents a fundamental shift from perimeter-based security to continuous verification and least-privilege access. As organizations increasingly depend on AI for critical functions and handle ever-larger volumes of sensitive data, the risks of inadequate security grow proportionally. The billion-dollar breaches, regulatory penalties, and reputational damage resulting from security failures demonstrate that traditional approaches no longer suffice.

Implementing Zero Trust requires commitment across the organization. It demands technical investments in policy-as-code frameworks, tokenization platforms, secrets management systems, and monitoring infrastructure. It requires cultural transformation, fostering security awareness and shared responsibility among AI practitioners and engineers. It necessitates continuous evolution as threats, technologies, and regulations change.

Yet the investment delivers substantial returns. Organizations with robust Zero Trust implementations experience fewer security incidents, detect and respond to threats more rapidly, and maintain better regulatory compliance. They build trust with customers, partners, and regulators by demonstrating tangible commitment to protecting sensitive information. They create competitive advantages through secure AI capabilities that others cannot match.

As AI systems become more sophisticated, autonomous, and pervasive, the security challenges will only intensify. Retrieval-augmented generation, autonomous agents, and domain-specific models introduce new attack surfaces and vulnerabilities. Quantum computing threatens existing cryptographic protections. Regulatory requirements grow more stringent and complex.

Zero Trust provides a framework for navigating this complexity. By never trusting and always verifying, by enforcing least privilege and continuous authentication, by implementing defense in depth across the entire AI lifecycle, organizations can harness AI’s transformative potential while managing its risks. The question is no longer whether to implement Zero Trust for AI pipelines, but how quickly organizations can complete the transformation before the next breach forces their hand.

The future belongs to organizations that treat security not as an afterthought but as a fundamental architecture principle, embedded from the earliest stages of AI development through production deployment and beyond. For those willing to make the commitment, Zero Trust offers a path to secure, compliant, and trustworthy AI that delivers business value without catastrophic risk.

References

- Futurum Group. (2025, January 22). “Cybersecurity 2025: AI-Powered Threats, Quantum Risks, and the Rise of Zero Trust.” https://futurumgroup.com/press-release/cybersecurity-2025-ai-powered-threats-quantum-risks-and-the-rise-of-zero-trust/

- Cloud Security Alliance. (2025, February 27). “How is AI Strengthening Zero Trust?” https://cloudsecurityalliance.org/blog/2025/02/27/how-is-ai-strengthening-zero-trust

- Trustwave. (2025, January 7). “Trustwave’s 2025 Cybersecurity Predictions: Zero Trust and AI Regulation.” https://www.trustwave.com/en-us/resources/blogs/trustwave-blog/trustwaves-2025-cybersecurity-predictions-zero-trust-and-ai-regulation/

- TechInformed. (2025, January 31). “Data Privacy Week 2025: Trends, AI Risks & Security Strategies.” https://techinformed.com/data-privacy-week-2025-trends-ai-risks-security-take-control/

- Security Info Watch. (2024). “How Not Deploying Zero Trust Data with AI May Risk Team Safety, Mission Failure.” https://www.securityinfowatch.com/access-identity/article/55269646/how-not-deploying-zero-trust-data-with-ai-may-risk-team-safety-mission-failure

- NVIDIA Technical Blog. (2024, October 28). “Harnessing Data with AI to Boost Zero Trust Cyber Defense.” https://developer.nvidia.com/blog/harnessing-data-with-ai-to-boost-zero-trust-cyber-defense/

- Zscaler. (2024). “Cybersecurity Predictions and Trends in 2025.” https://www.zscaler.com/learn/cybersecurity-predictions-2025

- Darktrace. (2024, November 3). “AI and Cybersecurity: Predictions for 2025.” https://www.darktrace.com/blog/ai-and-cybersecurity-predictions-for-2025

- Cybersecurity Dive. (2025, July 30). “‘Shadow AI’ increases cost of data breaches, report finds.” https://www.cybersecuritydive.com/news/artificial-intelligence-security-shadow-ai-ibm-report/754009/

- Wikipedia. (2023). “2023 MOVEit data breach.” https://en.wikipedia.org/wiki/2023_MOVEit_data_breach

- Hadrian. (2025, July 3). “MOVEit Breach: Timeline of the Largest Hack of 2023.” https://hadrian.io/blog/moveit-cyberattacks-timeline-of-the-largest-hack-of-2023

- ORX News. (2023). “MOVEit transfer data breaches Deep Dive.” https://orx.org/resource/moveit-transfer-data-breaches

- Hagens Berman. (2023, August 16). “Multiple Class-Action Lawsuits Filed After 2023 MOVEit Data Breach.” https://www.hbsslaw.com/press/progress-software-moveit-data-breach/multiple-class-action-lawsuits-filed-after-2023-moveit-data-breach-affecting-more-than-40-million-people

- Clyde & Co. (2024, April 30). “Understanding the MOVEit data breach: Navigating long tail liability risks.” https://www.clydeco.com/en/insights/2024/05/understanding-the-moveit-data-breach-navigating-lo

- Trend Micro News. (2024, December 9). “Data Breach 2024: T-Mobile, Amazon, Starbucks, and More.” https://news.trendmicro.com/2024/12/09/data-breach-t-mobile-amazon-starbucks/

- LevelBlue. (2024). “Securing AI: Navigating Risks with the Zero Trust.” https://levelblue.com/blogs/security-essentials/understanding-ai-risks-and-how-to-secure-using-zero-trust

- Kiteworks. (2024, August 9). “Building Trust in Generative AI with a Zero Trust Approach.” https://www.kiteworks.com/cybersecurity-risk-management/zero-trust-generative-ai/

- OAE Publish. (2024, September 26). “Zero trust implementation in the emerging technologies era: a survey.” https://www.oaepublish.com/articles/ces.2024.41

- PilotCore. (2024). “The Role of AI and Machine Learning in Zero Trust Security.” https://pilotcore.io/blog/role-of-ai-and-machine-learning-in-zero-trust-security

- DZone. (2024, December 18). “Zero Trust for AI: Building Security from the Ground Up.” https://dzone.com/articles/zero-trust-for-ai-building-security-from-the-ground-up

- SPK and Associates. (2025, January 3). “Breaking down Zero Trust Architecture for CI/CD Pipelines.” https://www.spkaa.com/blog/breaking-down-zero-trust-architecture-for-ci-cd-pipelines

- Sombra Inc. (2025, March 14). “Zero Trust for Cloud Apps: Security, Benefits & Best Practices.” https://sombrainc.com/blog/zero-trust-architecture-cloud-applications

- Medium. (2024, August 3). “Zero Trust and Data Privacy in Generative AI Security.” https://medium.com/@oracle_43885/zero-trust-and-privacy-a-holistic-approach-to-data-protection-in-the-generative-ai-era-128ba762a39a

- Protecto.ai. (2025, January 20). “Top Data Tokenization Tools Of 2025: A Comprehensive Guide.” https://www.protecto.ai/blog/top-data-tokenization-tools-guide

- VentureBeat. (2025, April 15). “How tokenization is reinventing data security in the age of AI.” https://venturebeat.com/security/how-tokenization-is-reinventing-data-security-in-the-age-of-ai/

- Bank Info Security. (2025). “AI Security Goes Mainstream as Vendors Spend Heavily on M&A.” https://www.bankinfosecurity.com/blogs/ai-security-goes-mainstream-as-vendors-spend-heavily-on-ma-p-3953

- Private-AI. (2024, May 6). “Tokenization and Its Benefits for Data Protection.” https://private-ai.com/en/2024/05/06/tokenization-and-its-benefits-for-data-protection/

- Portal26. (2024, September 23). “Data Tokenization Best Practices: Your Ultimate Guide to Secure Data.” https://portal26.ai/best-practices-in-data-tokenization/

- SecuPi. (2023, December 21). “2024 Data Security Insights, Predictions, and Key Pitfalls to Avoid.” https://secupi.com/2024-data-security-insights-predictions-and-key-pitfalls-to-avoid/

- Airbyte. (2025). “7 Best Data Tokenization Tools Worth Consideration.” https://airbyte.com/top-etl-tools-for-sources/data-tokenization-tools

- Cloud Security Alliance. (2023, November 22). “Mitigating Security Risks in RAG LLM Applications.” https://cloudsecurityalliance.org/blog/2023/11/22/mitigating-security-risks-in-retrieval-augmented-generation-rag-llm-applications

- Lasso Security. (2025). “LLM Security Predictions: What’s Ahead in 2025.” https://www.lasso.security/blog/llm-security-predictions-whats-coming-over-the-horizon-in-2025

- Krishna Gupta. (2025, June 19). “LLM08:2025 – Vector and Embedding Weaknesses.” https://krishnag.ceo/blog/llm082025-vector-and-embedding-weaknesses-a-hidden-threat-to-retrieval-augmented-generation-rag-systems/

- Medium/DataDrivenInvestor. (2025, October). “Protecting RAG Applications with AI Security and Layered Defenses.” https://medium.datadriveninvestor.com/protecting-rag-applications-with-ai-security-and-layered-defenses-5a161b89d2d1

- arXiv. (2025, May 13). “Securing RAG: A Risk Assessment and Mitigation Framework.” https://arxiv.org/html/2505.08728v1

- DHiWise. (2025, May 14). “Complete Guide to Building a Robust RAG Pipeline 2025.” https://www.dhiwise.com/post/build-rag-pipeline-guide

- IronCore Labs. (2024). “Security Risks with RAG Architectures.” https://ironcorelabs.com/security-risks-rag/

- arXiv. (2025, June 3). “RAGOps: Operating and Managing Retrieval-Augmented Generation Pipelines.” https://arxiv.org/html/2506.03401v1

- Medium/Customertimes. (2025, June 13). “2025 Trends: Agentic RAG & SLM.” https://medium.com/customertimes/2025-trands-agentic-rag-slm-1a3393e0c3c9