1. The Rise of LLMOps: Transforming Enterprise AI Operations

The emergence of Large Language Models (LLMs) has fundamentally transformed how enterprises approach artificial intelligence. However, the gap between experimental AI prototypes and production-ready enterprise systems has revealed a critical need for a new operational discipline: LLMOps (Large Language Model Operations).

What is LLMOps?

LLMOps represents the evolution of MLOps (Machine Learning Operations) specifically tailored to address the unique challenges of operating large language models at enterprise scale. While MLOps focuses on traditional machine learning workflows, LLMOps encompasses specialized capabilities required for generative AI systems.

Key components of LLMOps include: prompt engineering and version management, context window orchestration, retrieval-augmented generation pipelines, hallucination detection and mitigation, citation and provenance tracking, and enterprise-grade security and governance.

Why LLMOps is Taking Critical Shape in Enterprises

Organizations are rapidly discovering that deploying LLMs in production environments demands far more than simply accessing a model through an API. Enterprise LLMOps has become essential due to: regulatory and compliance requirements in regulated industries; data security and privacy concerns with proprietary information; quality and reliability assurance for business-critical applications; cost management and ROI optimization at scale; knowledge integration and accuracy with current enterprise data; and scalability and performance requirements for thousands of users.

The convergence of these factors has elevated LLMOps from a nice-to-have technical practice to a strategic imperative for enterprises serious about deploying generative AI.

2. Real-World Enterprise LLMOps Implementations

LLMOps has transitioned from theoretical framework to critical operational capability across diverse industries. The following examples demonstrate how enterprises are implementing LLMOps to solve real business challenges.

Healthcare: Clinical Knowledge Assistant

A large healthcare system deployed a private LLM within their VPC-isolated environment, integrated with a RAG system connected to curated medical knowledge bases. The system provides physicians with instant access to clinical guidelines, drug interaction databases, and treatment protocols while maintaining HIPAA compliance. Critical LLMOps functions include strict data governance ensuring patient data never leaves the private cloud, hallucination prevention through verified medical literature, version control of medical guidelines, and clinical review board validation. Business impact: Physicians reduced research time by 40%, improved treatment protocol adherence by 28%, and the system processed over 50,000 queries monthly with 99.2% accuracy.

Financial Services: Contract Analysis

A multinational investment bank deployed an on-premises LLM with specialized fine-tuning for legal language, integrated with a vector database containing 15 years of contract history. The system analyzes contracts, highlights risk clauses, and provides recommendations with legal citations. LLMOps functions include zero-trust security architecture, provenance tracking for regulatory audits, model versioning with approved prompts, and real-time performance monitoring. Business impact: Contract review time decreased from 5 days to 4 hours, legal team capacity increased 3.5x, and the system identified $47M in unfavorable terms.

Technology: Customer Support Assistant

A global software company deployed a RAG-enhanced LLM connected to technical documentation, support ticket history, and product knowledge bases. LLMOps functions include continuous learning from support engineer feedback, multi-source RAG querying documentation and past tickets, safety guardrails preventing harmful suggestions, and performance optimization through caching. Business impact: First-contact resolution improved from 52% to 78%, average resolution time decreased by 45%, and customer satisfaction increased by 23 points while reducing support costs by $4.2M annually.

These implementations share common patterns demonstrating why LLMOps evolved from optional to essential: reliability requirements for production systems, compliance obligations for regulated industries, security imperatives for sensitive data, quality assurance for business-critical applications, cost management at enterprise scale, and knowledge integration connecting LLMs to proprietary data.

3. The Relationship Between LLMs, RAG, Knowledge Graphs, and LLMOps

Understanding how these technologies interconnect is fundamental to designing effective enterprise AI systems. LLMs serve as the generative intelligence layer but have critical limitations: static knowledge cutoffs, hallucination tendencies, lack of provenance, and domain limitations. RAG addresses these by retrieving relevant external information at query time and injecting it into the model’s context window. Knowledge Graphs provide structured representations of entities and their relationships, enabling semantic reasoning. LLMOps provides the operational framework binding these components together through data pipeline management, retrieval orchestration, prompt engineering, quality assurance, and security enforcement.

The true power emerges from their integration. Consider an enterprise query requiring: Knowledge Graph identification of relevant entities, RAG retrieval of associated documents, LLMOps validation of user permissions, LLMOps assembly of context from multiple sources, LLM generation of grounded analysis, and LLMOps validation of citations and output quality. Without LLMOps, this sophisticated workflow would remain fragmented research components rather than a reliable enterprise capability.

4. Why Enterprise RAG Implementation at Scale Fails Without LLMOps

While RAG architectures appear straightforward in prototypes, scaling to production reveals critical operational complexities. Enterprise RAG differs from prototypes in: document volume scaling from hundreds to millions, user base expanding from pilots to thousands of concurrent users, query diversity becoming unpredictable and complex, data sources multiplying across systems, and performance requirements demanding SLAs. Without systematic LLMOps practices, organizations encounter predictable failure patterns:

- Data Quality Degradation: Vector databases become stale, duplicated, or corrupted without systematic pipeline management.

- Retrieval Accuracy Deterioration: Generic strategies fail as collections grow and diversify.

- Context Window Management Crisis: Naive implementations retrieve too much irrelevant content or truncate critical information.

- Security and Access Control Failures: Users receive information they shouldn’t access, and PII leaks into responses.

- Performance and Cost Unpredictability: Costs become unsustainable, and response times degrade during peak usage.

- Hallucination and Quality Degradation: LLMs misinterpret context or introduce unsourced information.

- Operational Blind Spots: Debugging becomes an archeological investigation without instrumentation.

These failure modes compound and interact, creating cascading system degradation. Organizations attempting to scale RAG without LLMOps eventually face a stark choice: invest in operational excellence or abandon the deployment.

5. Structural Components of LLMOps

Implementing enterprise LLMOps requires comprehensive framework spanning four dimensions:

People: Organizational Structure and Roles

Core roles include: LLM Operations Engineers deploying infrastructure and managing pipelines; Prompt Engineers optimizing prompts and implementing RAG workflows; Data Engineers building ingestion pipelines and managing vector databases; ML/AI Security Specialists implementing controls and ensuring compliance; Quality Assurance Engineers creating test suites and validating outputs; Site Reliability Engineers ensuring system reliability and managing incidents. Supporting roles include AI Governance Leads, Product Owners, Domain Experts, and Legal/Compliance teams.

Process: Operational Workflows

Systematic processes include: Model Lifecycle Management covering selection, deployment, versioning, and deprecation; Data Pipeline Processes for ingestion, quality control, indexing, and updates; Prompt Engineering Workflow for development, testing, review, and deployment; Quality Assurance Processes including automated testing, human evaluation, hallucination detection, and regression monitoring; Security and Compliance Processes for access review, data classification, audit trails, and risk assessment; and Incident Response Procedures for alert triage, investigation, remediation, and post-mortems.

Tooling: Operational Platforms

Specialized tools enable LLMOps practices: Orchestration frameworks like LangChain and LlamaIndex; Model and Prompt Management tools like MLflow and LangSmith; Observability platforms like Arize AI and LangFuse; Evaluation frameworks like RAGAS and DeepEval; Security tools like Guardrails AI and Presidio; and Cost Management solutions like OpenMeter and LiteLLM.

Technology: Infrastructure Components

Technical infrastructure includes: Compute infrastructure across private cloud, public cloud, and hybrid deployments; Model Serving Infrastructure with vLLM, load balancing, and caching; Vector Databases like Pinecone, Weaviate, Milvus, and Qdrant; Data Storage including document stores, metadata databases, and processing engines; Embedding Generation with various models and optimization techniques; API Gateway and Authentication systems; Observability Stack for logging, metrics, and tracing; and Security Infrastructure for network isolation, secrets management, and encryption.

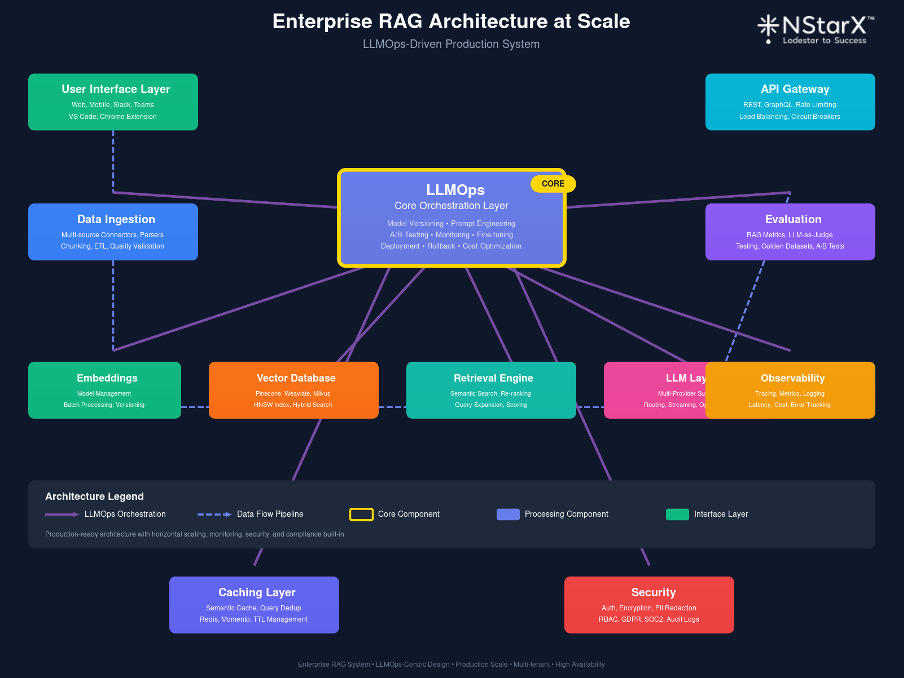

A typical Enterprise RAG reference architecture with LLMOps is shown in the Figure 1 below:

Figure 1: Enterprise RAG Architecture at Production Scale

6. Best Practices for Enterprise LLMOps Implementation

Implementing effective LLMOps requires adherence to proven practices across multiple dimensions:

Data Management

Implement rigorous data quality standards with validation gates and duplicate detection. Design thoughtful chunking strategies adapting to document types while preserving semantic coherence. Maintain temporal awareness through timestamps and version control. Secure and control access from the start with document-level permissions, PII detection, classification tags, and retrieval-time filtering.

Retrieval Architecture

Implement hybrid retrieval combining dense and sparse vectors with metadata filtering. Optimize performance through multi-level caching, approximate nearest neighbor indexes, and query understanding. Continuously evaluate and improve using golden datasets, precision/recall metrics, feedback collection, and negative sampling.

Prompt Engineering

Treat prompts as production code with version control and code review. Design RAG-specific structures clearly separating instructions, context, and queries. Include explicit citation formatting and safety instructions. Systematically test variations using evaluation suites, A/B testing, and quality metrics.

Quality Assurance

Establish multi-layered quality gates spanning pre-deployment testing, production monitoring, and human review. Implement rigorous hallucination detection using automated fact-checking and entailment models. Validate citation accuracy ensuring claims correspond to sources and detecting citation hallucinations.

Security and Compliance

Implement defense-in-depth security with network isolation, encryption, authentication, and authorization. Protect against adversarial inputs through prompt injection detection and content moderation. Maintain comprehensive audit trails logging all operations. Establish privacy protection with PII detection, dynamic redaction, retention policies, and data subject access support.

Monitoring and Observability

Implement comprehensive monitoring of system health, performance, quality, and business metrics. Enable end-to-end tracing capturing the complete query journey. Establish incident response procedures with severity levels, runbooks, automated alerting, and post-incident reviews.

Cost Management

Implement multi-layer cost control through caching, batching, right-sizing, and tiering strategies. Monitor and attribute costs per business unit with token consumption tracking, quotas, and chargeback mechanisms.

Continuous Improvement

Build feedback loops enabling explicit ratings and collecting implicit signals. Iterate based on data by analyzing query logs, using low-rated responses to build datasets, conducting optimization experiments, and refining strategies.

7. The Future of LLMOps in Enterprise RAG at Production Scale

As enterprise RAG implementations mature, LLMOps is evolving from reactive practice to proactive strategic capability shaped by:

Emerging Technological Trends

Agentic RAG and Multi-Step Reasoning will enable autonomous agents decomposing queries, reasoning about gaps, synthesizing across sources, and validating consistency. Hybrid Knowledge Architectures will seamlessly integrate vector databases, knowledge graphs, traditional databases, and code interpreters. Continuous Learning and Adaptation will enable real-time feedback integration, adaptive indexing, personalized retrieval, and automated model selection. Multimodal Knowledge Integration will process multimedia content, enable cross-modal retrieval, and generate multimodal responses.

Architectural Evolution

Enterprises are moving from monolithic to federated RAG architectures where business units maintain domain-specific systems with meta-orchestration routing queries appropriately. Edge and Hybrid Deployments will combine lightweight edge models for low-latency queries with cloud-based systems for complex reasoning.

Operational Maturity

LLMOps will shift from reactive to proactive operations with anomaly prediction, automated remediation, capacity planning, and drift detection. Democratization will make capabilities more accessible through low-code platforms, automated best practices, self-service observability, and standardized governance templates.

Regulatory Landscape

Emerging AI regulations will require mandatory impact assessments, explainability documentation, bias testing, and human review rights. Industry-specific standards will develop for healthcare clinical validation, financial model risk management, legal certification, and government security clearances.

Strategic imperatives for organizations include: investing in foundational capabilities, balancing innovation and stability, fostering collaboration, maintaining flexibility, prioritizing governance, and measuring business value. The future lies in disciplined integration of advancing capabilities with mature operational practices.

8. Conclusion

The journey from experimental LLM prototypes to production-scale enterprise AI systems has revealed that technological capability alone is insufficient. LLMOps has emerged as the critical discipline bridging the gap between the promise of large language models and the reality of reliable, secure, compliant enterprise operations.

Real-world implementations demonstrate that LLMOps delivers measurable business value: contract review time reductions from days to hours, customer support improvements exceeding 70%, compliance report acceleration by 90%, and research time savings of 40% while maintaining accuracy and auditability.

The relationship between LLMs, RAG, Knowledge Graphs, and LLMOps forms a sophisticated ecosystem where LLMOps provides the operational framework binding components together, enabling enterprises to overcome inherent LLM limitations through systematic practices rather than algorithmic silver bullets.

Successfully structuring LLMOps requires balanced investment across people, process, tooling, and technology. The comprehensive best practices outlined represent battle-tested approaches derived from real production deployments. Looking forward, LLMOps will evolve with emerging technologies, architectural innovations, and regulatory developments while maintaining focus on disciplined integration.

Success in enterprise AI requires not just powerful models but the operational discipline to deploy them reliably, securely, and at scale. That discipline is LLMOps. The organizations that will thrive are those that master the operational discipline of systematically deploying, monitoring, securing, and continuously improving their AI systems at enterprise scale.

9. References

The following references provide authoritative sources for the concepts, technologies, and best practices discussed throughout this document.

- OpenAI. “GPT-4 Technical Report.” https://openai.com/research/gpt-4

- NIST. “AI Risk Management Framework.” https://nvlpubs.nist.gov/nistpubs/ai/nist.ai.100-1.pdf

- LangChain. “RAG Tutorial.” https://python.langchain.com/docs/tutorials/rag/

- LlamaIndex. “Documentation.” https://docs.llamaindex.ai/

- Anthropic. “Trust & Security.” https://trust.anthropic.com/

- Weaviate. “Security Documentation.” https://weaviate.io/security

- Pinecone. “Security & Compliance.” https://security.pinecone.io/

- Qdrant. “Security Guide.” https://qdrant.tech/documentation/guides/security/

- Milvus. “Documentation.” https://milvus.io/docs

- Meta AI. “FAISS Library.” https://github.com/facebookresearch/faiss

- AWS. “Amazon Bedrock Data Protection.” https://docs.aws.amazon.com/bedrock/latest/userguide/data-protection.html

- Lewis, P., et al. “Retrieval-Augmented Generation for Knowledge-Intensive NLP Tasks.” https://arxiv.org/abs/2005.11401

- TrueFoundry. “LLMOps Architecture.” https://www.truefoundry.com/blog/llmops-architecture