Building Smarter AI Through Dynamic Context Management

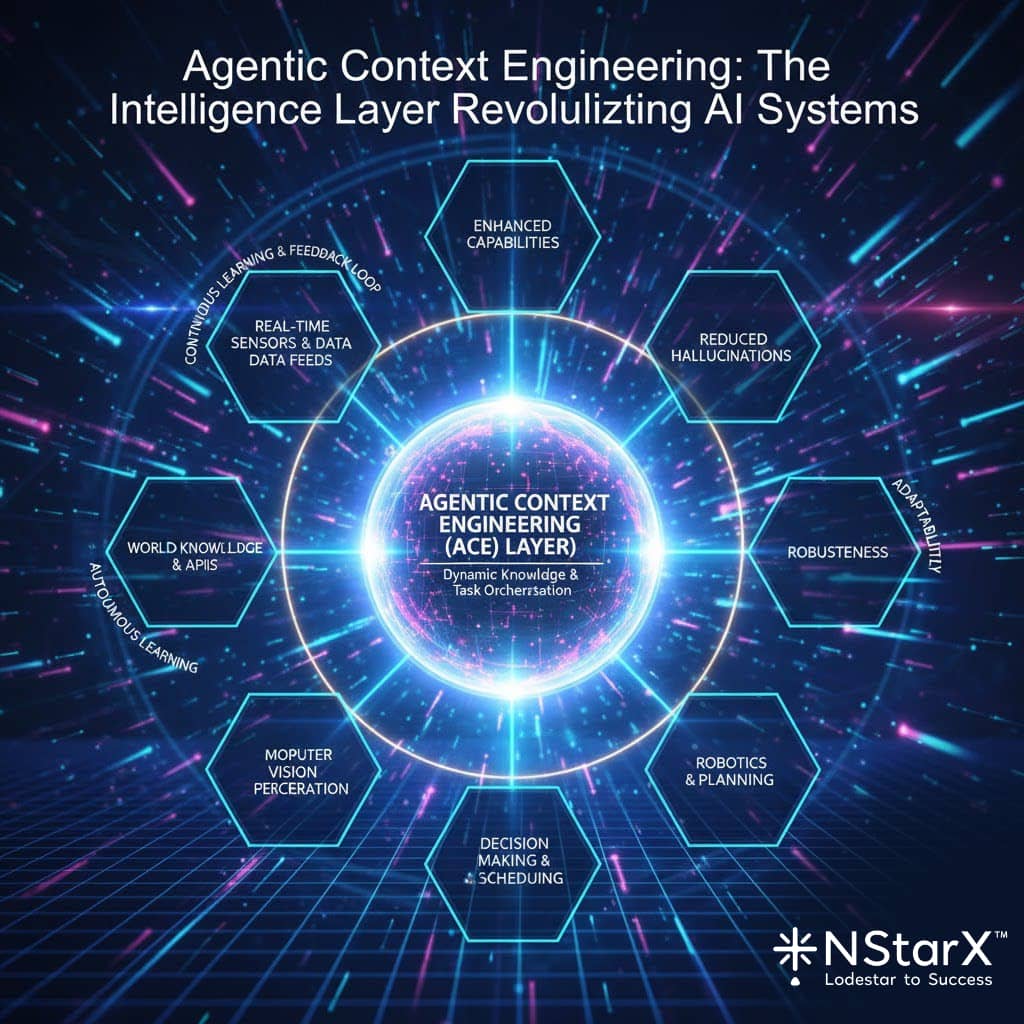

In the evolving world of artificial intelligence, one breakthrough is transforming how organizations deploy intelligent systems: Agentic Context Engineering (ACE). This sophisticated approach doesn’t just improve AI performance—it fundamentally reimagines how AI systems learn, adapt, and deliver accurate, reliable results without the traditional limitations of static models.

Welcome to the future of AI architecture, where intelligence meets adaptability, and hallucinations become a problem of the past.

Understanding Agentic Context Engineering: The Foundation

Agentic Context Engineering is an advanced AI methodology that empowers large language models (LLMs) to access, process, and reason with dynamic information in real-time. Rather than relying solely on static training data, ACE creates an intelligent ecosystem where AI agents actively retrieve, verify, and synthesize information to deliver contextually accurate responses.

Think of it as the difference between giving someone a textbook from five years ago versus giving them access to a constantly updated library with a team of expert researchers. The latter doesn’t just have more information—they have the right information at the right time.

The Architectural Pillars of ACE

1. Intelligent Retrieval Systems

ACE leverages sophisticated vector databases and semantic search engines to access relevant information from massive knowledge repositories in milliseconds. Using embedding models, the system understands the meaning behind queries, not just keywords.

2. Multi-Agent Orchestration

Instead of a single AI handling everything, ACE deploys specialized agents working in concert—research agents, verification agents, synthesis agents—each optimized for specific cognitive tasks.

3. Dynamic Prompt Engineering

Context-aware prompt templates automatically adapt based on user intent, task complexity, and available information, ensuring optimal model performance for each unique situation.

4. Grounding Mechanisms

Every response is anchored to retrievable sources, creating a verifiable chain of reasoning that dramatically reduces hallucinations and increases trustworthiness.

How Agentic Context Engineering “Learns”

One of the most compelling aspects of ACE is its learning paradigm—fundamentally different from traditional machine learning approaches.

Real-Time Knowledge Acquisition

Unlike conventional models that learn during training phases and remain static until retrained, ACE systems demonstrate continuous learning through their retrieval mechanisms:

Knowledge Base Evolution

As your organization’s documentation, databases, and information repositories grow, the ACE system automatically gains access to this new knowledge. No retraining required—the learning happens through retrieval.

Contextual Pattern Recognition

Advanced ACE implementations use feedback loops where successful interaction patterns are identified, codified into prompt strategies, and reused. The system learns which retrieval strategies work best for different query types.

Session Memory and Personalization

Within conversations, ACE maintains sophisticated context windows that remember previous exchanges, building a coherent understanding of user intent that deepens throughout the interaction.

The Three-Phase Learning Cycle

Phase 1: Intent Understanding

The system analyzes the query using natural language understanding techniques, identifying entities, relationships, and the underlying information need. This isn’t pattern matching—it’s semantic comprehension.

Phase 2: Retrieval and Verification

Multiple retrieval strategies run in parallel:

- Semantic vector search for conceptual matches

- Keyword searches for precise terms

- Graph traversal for relational information

- Temporal filtering for time-sensitive data

Phase 3: Synthesis and Reasoning

Retrieved information is synthesized through chain-of-thought reasoning processes. The system doesn’t just concatenate facts—it builds logical arguments, identifies contradictions, and constructs coherent narratives.

Adaptive Retrieval Strategies

Modern ACE systems employ meta-learning at the retrieval level. They learn:

- Which knowledge bases to prioritize for different domains

- How to decompose complex queries into sub-queries

- When to broaden or narrow search parameters

- How to balance precision and recall based on task requirements

This creates a system that becomes more efficient over time, even though the underlying language model remains unchanged.

Conquering the Hallucination Challenge

AI hallucination—when models generate plausible but factually incorrect information—is one of the most significant challenges in enterprise AI deployment. Agentic Context Engineering provides multiple sophisticated mechanisms to address this critical issue.

The Hallucination Problem Explained

Traditional LLMs hallucinate because they:

- Generate text based on statistical patterns in training data

- Lack access to external truth verification

- Cannot distinguish between confident errors and actual knowledge

- Fill gaps with plausible-sounding fabrications

ACE transforms this dynamic fundamentally.

Multi-Layered Hallucination Prevention

1. Source Grounding

Every claim in an ACE-generated response is traceable to specific retrieved documents. If information isn’t found in the knowledge base, the system explicitly states uncertainty rather than fabricating details.

Traditional AI: “The FluxCore 3000 was released in March 2023.”

(Hallucination – this product might not exist)

ACE System: “I couldn’t find information about the FluxCore 3000

release date in our documentation. Could you provide more context?”

2. Cross-Source Verification

Advanced ACE implementations retrieve information from multiple sources and check for consistency. Contradictions trigger additional scrutiny or explicit acknowledgment of uncertainty.

3. Confidence Scoring

Each retrieved piece of information receives a relevance and confidence score. Responses are weighted accordingly, with low-confidence information flagged or excluded.

4. Retrieval Quality Monitoring

The system continuously evaluates whether retrieved context actually supports the generated response. If the semantic similarity drops below threshold, the system reformulates or acknowledges limitations.

The Attribution Chain

One of ACE’s most powerful anti-hallucination features is the attribution chain—the ability to cite specific sources for every substantive claim:

- Document-level attribution: Which file or database entry?

- Section-level precision: Which paragraph or data row?

- Timestamp awareness: When was this information current?

- Confidence indicators: How certain is this information?

This creates transparency that makes hallucinations immediately obvious and correctable.

Prompt-Level Hallucination Safeguards

ACE systems use carefully engineered prompts that include explicit instructions:

- “Only use information from the provided context”

- “If uncertain, say so explicitly”

- “Distinguish between direct information and inferences”

- “Never invent details not present in sources”

Combined with retrieval grounding, this creates multiple defensive layers.

The RAG Advantage in Hallucination Reduction

Retrieval-Augmented Generation (RAG), a core component of ACE, has shown remarkable effectiveness in reducing hallucinations:

Before RAG: Model relies on parametric memory (training data)

- Hallucination rate: 15-30% for specific facts

- No way to verify claims

- Outdated information treated as current

With RAG: Model retrieves and grounds in external sources

- Hallucination rate: 3-8% (60-80% reduction)

- Every claim traceable to source

- Information currency maintained

Handling Edge Cases and Ambiguity

ACE systems implement sophisticated strategies for unclear situations:

Ambiguous Queries

Rather than guessing intent, the system retrieves multiple interpretations and either asks for clarification or presents multiple contextualized answers.

Knowledge Gaps

When information isn’t available, ACE systems explicitly state this rather than attempting to fill the gap with generated content.

Conflicting Information

When sources disagree, advanced implementations present both perspectives with appropriate context rather than arbitrarily choosing one.

The Technical Architecture Deep Dive

Vector Embeddings and Semantic Search

At the heart of ACE lies the embedding model—a neural network that transforms text into high-dimensional vectors capturing semantic meaning.

How It Works:

- Documents are chunked into logical segments

- Each chunk is converted to a dense vector (often 768 or 1,536 dimensions)

- Query text is embedded using the same model

- Similarity calculations identify the most relevant chunks

- Top results are retrieved and passed to the language model

This semantic approach means the system understands that “quarterly earnings report” and “fiscal quarter financial results” are conceptually similar, even with different wording.

The Knowledge Base Architecture

Modern ACE systems typically employ a hybrid knowledge architecture:

Structured Data Layer

- SQL databases for transactional information

- Graph databases for relationship mapping

- Time-series databases for temporal data

Unstructured Data Layer

- Vector databases for document embeddings

- Document stores for original source material

- Metadata indexes for filtering and routing

Integration Layer

- APIs connecting to live systems

- Data pipelines for continuous updates

- Transformation logic for format standardization

Agent Coordination Patterns

In multi-agent ACE systems, coordination happens through several patterns:

Sequential Processing

Agent A (Researcher) → Agent B (Analyst) → Agent C (Writer)

Each agent completes its specialized task before passing results forward.

Parallel Execution

Multiple agents query different knowledge bases simultaneously, with results merged by a coordinator agent.

Recursive Refinement

Agents review each other’s outputs, challenging assumptions and requesting additional evidence until consensus is reached.

Hierarchical Decision Trees

A supervisor agent routes queries to specialized sub-agents based on domain, complexity, and required expertise.

Continuous Improvement and Feedback Loops

While ACE systems don’t learn in the traditional neural network sense, they implement sophisticated improvement mechanisms:

User Feedback Integration

Explicit Feedback

- Thumbs up/down on responses

- Corrections to generated content

- Flagged hallucinations or errors

Implicit Feedback

- Which retrieved documents users click

- How users rephrase queries

- Conversation abandonment patterns

This feedback informs:

- Retrieval strategy refinement

- prompt template optimization

- Knowledge base curation priorities

Retrieval Performance Monitoring

ACE systems track:

- Query-to-document relevance: Are the right sources being retrieved?

- Coverage metrics: What percentage of queries find relevant information?

- Latency analysis: Which retrieval strategies are most efficient?

- Hallucination incidents: When do groundless statements occur?

A/B Testing and Optimization

Leading implementations continuously test:

- Different embedding models

- Alternative chunk sizes for documents

- Various prompt engineering approaches

- Multiple retrieval algorithms

The best-performing strategies are promoted to production, creating systematic improvement.

Real-World Applications and Use Cases

Enterprise Knowledge Management

Challenge: Organizations have knowledge scattered across wikis, documents, emails, and tribal knowledge.

ACE Solution: Unified retrieval across all sources with intelligent routing to subject matter experts when information gaps exist.

Outcome: Employees get accurate answers instantly, with full source attribution for verification.

Customer Support Intelligence

Challenge: Support agents need instant access to product documentation, past tickets, and troubleshooting guides.

ACE Solution: Context-aware retrieval that understands customer issue patterns and surfaces relevant solutions with confidence scores.

Outcome: Faster resolution times, reduced escalations, and consistent answer quality.

Regulatory Compliance and Legal Research

Challenge: Ensuring AI-generated advice complies with current regulations and legal precedents.

ACE Solution: Grounded retrieval from curated legal databases with explicit citations and version tracking.

Outcome: Auditable, compliant responses with full transparency into reasoning and sources.

Technical Documentation and Developer Tools

Challenge: Developers need context-specific code examples and API documentation.

ACE Solution: Code-aware retrieval that understands programming concepts and retrieves relevant examples with working implementations.

Outcome: Accurate technical guidance that developers can trust and implement directly.

Building Robust ACE Systems: Best Practices

1. Invest in Knowledge Base Quality

Your ACE system is only as good as your knowledge base. Prioritize:

- Accuracy: Regular audits and corrections

- Currency: Automated update pipelines

- Coverage: Comprehensive domain documentation

- Structure: Consistent formatting and metadata

2. Design Multi-Modal Retrieval

Don’t rely on a single retrieval strategy:

- Semantic vector search for conceptual queries

- Full-text search for precise term matching

- Graph traversal for relationship exploration

- Metadata filtering for context-specific scoping

3. Implement Robust Monitoring

Continuously track and alert on:

- Retrieval quality metrics

- Response latency distributions

- Hallucination incidents

- User satisfaction scores

4. Engineer Defensive Prompts

Create prompt templates with built-in safety mechanisms:

- Explicit grounding requirements

- Uncertainty expression guidelines

- Citation formatting standards

- Scope limitation boundaries

5. Establish Human-in-the-Loop Workflows

For high-stakes applications, implement:

- Expert review queues for uncertain responses

- Feedback collection mechanisms

- Escalation paths for edge cases

- Continuous quality assessment

The Future of Agentic Context Engineering

The ACE landscape is evolving rapidly with several exciting frontiers:

Adaptive Context Windows

Next-generation systems will dynamically adjust context utilization based on query complexity, automatically compressing less relevant information while preserving critical details.

Multi-Modal Retrieval

Emerging ACE implementations retrieve and reason across text, images, video, audio, and structured data simultaneously, creating richer, more comprehensive responses.

Federated Knowledge Systems

Future ACE architectures will seamlessly query across organizational boundaries while respecting privacy and access controls, creating collaborative intelligence networks.

Autonomous Knowledge Curation

AI agents will proactively identify knowledge gaps, flag outdated information, and even generate initial documentation drafts for human review—turning ACE from passive retrieval to active knowledge management.

Causal Reasoning Integration

Advanced systems are beginning to incorporate causal models that understand not just correlation but causation, enabling more sophisticated reasoning and prediction.

Conclusion: Intelligence Through Context

Agentic Context Engineering represents a paradigm shift in how we build and deploy AI systems. By grounding language models in dynamic, verifiable contexts, ACE solves the hallucination problem while creating systems that adapt to changing information without expensive retraining cycles.

The learning happens not through gradient descent and backpropagation, but through intelligent retrieval, verification, and synthesis. The result is AI that’s transparent, trustworthy, and truly intelligent—systems that know what they know, admit what they don’t, and always provide the receipts.

As organizations seek to deploy AI responsibly and effectively, Agentic Context Engineering offers a path forward that balances capability with accountability, innovation with reliability, and automation with human oversight.

The future of AI isn’t just about bigger models—it’s about smarter architectures. ACE shows us that intelligence emerges not just from training data, but from how we orchestrate access to knowledge, verify information, and reason about the world.

Essential Resources and References

- Agentic Context Engineering: Evolving Contexts for Self-Improving Language Models. https://arxiv.org/abs/2510.04618

- Retrieval-Augmented Generation for Knowledge-Intensive Tasks. https://arxiv.org/abs/2005.11401

- REALM: Retrieval-Augmented Language Model Pre-Training. https://arxiv.org/abs/2002.08909

- Chain-of-Thought Prompting Elicits Reasoning in Large Language Models. https://arxiv.org/abs/2201.11903

- ReAct: Synergizing Reasoning and Acting in Language Models. https://arxiv.org/abs/2210.03629

- Self-RAG: Learning to Retrieve, Generate, and Critique through Self-Reflection. https://arxiv.org/abs/2310.11511

- LangChain Documentation – RAG Architectures. https://python.langchain.com/docs/use_cases/question_answering/

- LlamaIndex – Data Framework for LLM Applications. https://docs.llamaindex.ai/en/stable/

- Haystack by deepset – NLP Framework. https://haystack.deepset.ai/

- Microsoft Semantic Kernel. https://learn.microsoft.com/en-us/semantic-kernel/

- AutoGPT – Autonomous AI Agents. https://github.com/Significant-Gravitas/AutoGPT

- Pinecone – Vector Database Documentation. https://docs.pinecone.io/

- Weaviate – Vector Search Engine. https://weaviate.io/developers/weaviate

- Chroma – Open-Source Embedding Database. https://www.trychroma.com/

- Milvus – Open-Source Vector Database. https://milvus.io/docs

- Qdrant – Vector Search Engine. https://qdrant.tech/documentation/

- Sentence Transformers Documentation. https://www.sbert.net/

- OpenAI Embeddings Guide. https://platform.openai.com/docs/guides/embeddings

- Cohere Embed Models. https://docs.cohere.com/docs/embeddings

- FAISS by Meta AI – Similarity Search. https://github.com/facebookresearch/faiss