The Executive AI Crisis: Bridging Expectation and Reality

The artificial intelligence revolution has created an unprecedented challenge for technology leaders. For the fourth consecutive year, CEOs have ranked AI as the most impactful technology, with 68% of CEOs developing strategies that integrate human employees and machines, including AI agents and robots. Yet beneath this enthusiasm lies a sobering reality: only 11% of CIOs have fully implemented AI technology, while cost overruns with GenAI budgets could consume 35% of the entire annual budget, with cost estimates often off by 500-1000%.

This isn’t merely a cost problem—it’s a fundamental trust crisis. CIOs are under increasing pressure to generate business value from generative AI, facing what industry experts describe as being “between a rock and a hard place.” The enterprise landscape is littered with Version 1.0 generative AI proof of concept projects that did not materialize into business value and have been dumped. The challenge extends far beyond technical implementation to the core question: How do you build AI-native software products and platforms that stakeholders can trust?

The Trust Imperative: Why Traditional Approaches Fail for AI-Native Development

Building AI-native software products requires a fundamentally different approach than traditional software development. Unlike conventional applications where costs and performance are relatively predictable, AI-native solutions introduce variables that can destabilize entire business models:

- Unpredictable Resource Consumption: AI workloads can quickly leap into millions of dollars, catching organizations completely off guard

- Data Quality Dependencies: AI performance is directly tied to data quality, making operational excellence critical

- Dynamic Scaling Requirements: AI applications may require 10x more resources during training phases, then scale down for inference

- Multi-Model Complexity: Modern AI products often combine multiple models, each with different cost and performance characteristics

Traditional software development and operations frameworks simply weren’t designed for these realities. This is why 90% of enterprise deployments of GenAI will slow as costs exceed value by 2025.

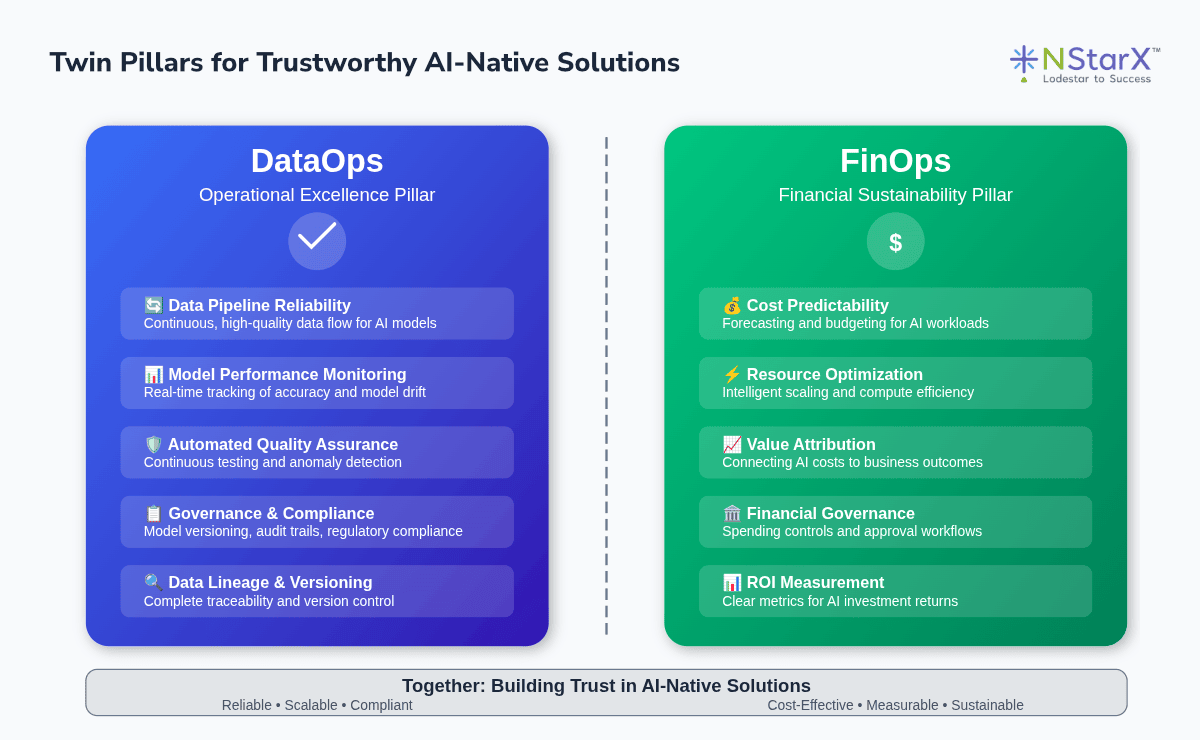

The Twin Pillars of Trust: DataOps and FinOps as Foundation for AI-Native Success

Trust in AI-native solutions requires two foundational pillars working in perfect harmony: DataOps and FinOps. Neither alone is sufficient—both must be seamlessly integrated from the earliest stages of product conception through deployment and scaling.

DataOps: The Operational Excellence Foundation

DataOps ensures that AI-native products deliver consistent, reliable, and accurate results. For AI applications, this means:

- Data Pipeline Reliability: Ensuring continuous, high-quality data flow that AI models depend on for accurate predictions and decisions. Unlike traditional software where a bug might cause a feature to break, poor data quality in AI systems can lead to systematically wrong decisions across an entire organization.

- Model Performance Monitoring: Real-time tracking of model accuracy, drift, and performance degradation. AI models can silently degrade over time as real-world data patterns change, making continuous monitoring essential for trustworthy AI products.

- Automated Quality Assurance: Implementing automated testing and validation frameworks that can detect anomalies, biases, and performance issues before they impact end users.

- Governance and Compliance: Establishing frameworks for model versioning, audit trails, and regulatory compliance that are essential for enterprise AI deployment.

FinOps: The Financial Sustainability Framework

FinOps ensures that AI-native products remain financially viable and can scale sustainably. This encompasses:

- Cost Predictability: Unlike traditional software where infrastructure costs are relatively stable, AI workloads require sophisticated forecasting and budgeting frameworks that can handle the exponential cost curves of model training and the variable costs of inference.

- Resource Optimization: AI-specific cost optimization that goes beyond traditional cloud optimization to include model efficiency, training optimization, and inference acceleration.

- Value Attribution: Connecting AI costs directly to business outcomes, enabling organizations to understand the ROI of different AI features and capabilities.

- Financial Governance: Establishing spending controls, approval workflows, and cost allocation models that prevent runaway AI expenses while enabling innovation.

The Synergy: Why Both Pillars Must Work Together

The magic happens when DataOps and FinOps work in unison to create AI-native products that are both operationally excellent and financially sustainable:

- Cost-Quality Trade-offs: DataOps provides the metrics to understand when spending more on higher-quality data or more sophisticated models delivers proportional business value

- Predictive Resource Planning: Combining operational metrics with cost data enables accurate forecasting of resource needs for scaling AI products

- Automated Optimization: AI-powered systems can make real-time decisions about resource allocation based on both performance requirements and cost constraints

- Trust Through Transparency: Stakeholders gain confidence when they can see both the operational health and financial efficiency of AI systems in real-time

The picture below (Figure 1) aptly captures the two essential pillars of a trust platform for AI-native Platforms:

Figure 1: DataOps and FinOps for AI-Native Platforms

How NStarX Platform Solves the CIO’s Massive Challenge

The NStarX Data and Learning Platform (DLNP) represents a paradigm shift in how organizations approach AI-native development. Rather than treating DataOps and FinOps as separate concerns to be bolted on later, DLNP integrates both as core platform capabilities from day one.

Unified AI Asset Management: Where Trust Begins

Our AI Catalog serves as the foundation for both operational and financial governance:

- Centralized Model Registry: Every AI model, dataset, and algorithm is cataloged with complete lineage tracking, performance metrics, and cost attribution. This creates the transparency needed for both operational monitoring and financial accountability.

- Automated Cost Attribution: Real-time tracking of resource consumption tied directly to specific models, datasets, and business outcomes, eliminating the black box problem that plagued traditional AI deployments.

- Performance-Cost Analytics: Integrated dashboards that show the relationship between model performance and resource consumption, enabling data-driven decisions about optimization trade-offs.

Intelligent Orchestration: DataOps and FinOps at Scale

The platform’s Agentic AI capabilities provide autonomous management of both operational and financial aspects:

- Self-Optimizing Pipelines: AI-driven orchestration that automatically adjusts resource allocation based on performance requirements, cost constraints, and business priorities.

- Predictive Scaling: Advanced algorithms that forecast resource needs based on historical patterns, enabling proactive capacity planning that balances performance with cost efficiency.

- Automated Remediation: When models begin to drift or costs spike unexpectedly, the platform can automatically trigger retraining, resource reallocation, or alert escalation based on predefined policies.

Privacy-Preserving Cost Optimization

Our Federated Learning capabilities address one of the most challenging aspects of AI-native development: balancing data requirements with operational efficiency:

- Distributed Training Economics: By enabling model training across distributed data sources without centralized data movement, organizations can dramatically reduce data transfer costs while maintaining model quality.

- Edge-Cloud Optimization: Intelligent workload placement that balances edge processing costs against cloud compute costs based on latency requirements and cost constraints.

- Collaborative Model Development: Organizations can participate in federated learning consortiums, sharing the costs of model development while maintaining data privacy and competitive advantage.

Visual Pipeline Intelligence: Making the Complex Manageable

The platform’s Visual AI Pipeline Orchestration transforms complex AI workflows into manageable, auditable processes:

- End-to-End Visibility: Visual representation of entire AI pipelines with real-time performance and cost metrics at every stage.

- Bottleneck Identification: Automated identification of pipeline bottlenecks that impact both performance and cost efficiency.

- Scenario Modeling: What-if analysis capabilities that help teams understand the operational and financial impact of different architectural decisions before implementation.

RAG and LLM Integration: Responsible AI at Scale

Our RAG Chat and LLM Models management capabilities ensure that organizations can leverage large language models responsibly:

- Cost-Controlled Inference: Intelligent routing of queries to different models based on complexity requirements and cost constraints.

- Knowledge Base Optimization: RAG systems that minimize expensive LLM calls by leveraging optimized knowledge retrieval.

- Multi-Model Strategies: Capability to deploy multiple models with different cost-performance profiles and automatically route requests based on business rules.

Positioning NStarX as the CIO’s Strategic Partner

The NStarX platform directly addresses the massive challenge facing CIOs: how to build and deploy AI-native solutions that stakeholders can trust. Here’s how we’re uniquely positioned:

Eliminating the Integration Burden

Traditional approaches require CIOs to cobble together separate tools for data management, model development, deployment orchestration, cost monitoring, and financial governance. This creates integration challenges, security vulnerabilities, and operational complexity that often derails AI initiatives.

NStarX provides a unified platform where DataOps and FinOps are native capabilities, not afterthoughts. This dramatically reduces the time to value and eliminates the risk of integration failures.

De-Risking AI Investments

By providing real-time visibility into both operational performance and financial efficiency, the platform enables CIOs to:

- Demonstrate ROI: Clear attribution of AI costs to business outcomes

- Prevent Cost Overruns: Automated guardrails and alerts that prevent runaway spending

- Ensure Reliability: Continuous monitoring and automated remediation that maintains service quality

- Enable Scaling: Predictive analytics that support confident scaling decisions

Building Stakeholder Confidence

The platform’s transparency and automation capabilities help CIOs build trust with key stakeholders:

- For CEOs: Clear demonstration of AI business value and ROI

- For CFOs: Predictable costs and efficient resource utilization

- For Engineering Teams: Simplified workflows and automated optimization

- For Compliance: Complete audit trails and governance frameworks

The Future of AI-Native Development: Sustainable Innovation Through Integrated Operations

The organizations that will succeed in the AI era are those that recognize that building trustworthy AI-native products requires fundamentally different approaches to both operations and financial management. The future belongs to platforms that integrate these capabilities from the ground up.

Emerging Paradigms

- AI-First Architecture: Future AI-native products will be built on platforms that assume AI workloads as the primary use case, not an add-on to traditional infrastructure.

- Autonomous Operations: Advanced AI systems will increasingly manage their own operational and financial optimization, requiring platforms sophisticated enough to support this level of automation.

- Collaborative Intelligence: Organizations will participate in AI ecosystems where models, data, and costs are shared across multiple parties, requiring new frameworks for governance and financial management.

The Platform Advantage

The NStarX platform positions organizations to thrive in this evolving landscape by:

- Future-Proofing Investments: Platform architecture that evolves with advancing AI capabilities while maintaining operational and financial discipline.

- Enabling Innovation: By solving the operational and financial challenges, teams can focus on building innovative AI-native products rather than managing infrastructure complexity.

- Supporting Ecosystem Participation: Federated learning and collaborative capabilities that enable organizations to participate in AI consortiums and partnerships.

Conclusion: Transforming the CIO Challenge into Competitive Advantage

The pressure facing today’s CIOs is unprecedented, but it also represents an unprecedented opportunity. Organizations that master the integration of DataOps and FinOps for AI-native development will gain sustainable competitive advantages that are difficult to replicate.

The NStarX platform transforms the CIO’s massive challenge—building trustworthy, cost-effective AI-native solutions—into a competitive advantage. By providing integrated DataOps and FinOps capabilities as platform-native features, we enable organizations to:

- Build with Confidence: Deploy AI-native products with predictable costs and reliable performance

- Scale Sustainably: Grow AI capabilities without sacrificing operational excellence or financial discipline

- Innovate Responsibly: Focus on creating business value rather than managing infrastructure complexity

- Demonstrate Value: Provide stakeholders with clear visibility into both operational performance and financial efficiency

The question facing CIOs today isn’t whether to invest in AI—it’s whether to build AI-native solutions on a foundation of trust through integrated DataOps and FinOps capabilities.

With NStarX, the answer becomes clear: transform the challenge into your competitive advantage.

References

- Gartner CEO and Senior Business Executive Survey, 2025

- CIO.com State of the CIO Survey, 2025

- Salesforce CIO AI Trends Study, November 2024

- Computerworld – “Rushing into genAI? Prepare for budget blowouts and broken promises,” 2025

- IBM 2025 CEO Study: 5 mindshifts to supercharge business growth

- IDC Future Enterprise Resiliency and Spending Survey, Wave 10, October 2024

- FinOps Foundation – “FinOps for AI Overview,” January 2025

- EY Consulting 2024 CIO Sentiment Study

- Flexera 2024 State of Cloud Report

- McKinsey Global Institute AI Research, 2024

- FinOps Foundation – “How to Forecast AI Services Costs in Cloud”

- Gartner – “Measuring the ROI of GenAI: Assessing Value and Cost”