How C-Suite Leaders Can Transform AI Experiments into Measurable Business Value. This blog is based on NStarX engagements with various enterprises through their AI journey

The boardroom conversations have shifted. What began as excited discussions about AI’s transformative potential in 2024 has evolved into more sobering questions about actual returns. As we enter 2026, C-suite leaders face a critical juncture: How do we move beyond the pilot phase and create systematic, measurable value from our AI investments?

The numbers tell a compelling story. While 58% of data and AI leaders claim their organizations have achieved “exponential productivity gains” from AI, the gap between aspiration and measurement reality has become impossible to ignore. It’s time for a more disciplined approach.

The Strategic Investment Priorities That Define 2026

The Top 3 Enterprise Investment Imperatives

The convergence of market research and executive priorities has crystallized around three critical investment areas that will define competitive advantage in 2026:

- AI/ML Infrastructure and Capabilities (The Clear Leader)The data is unambiguous: 42% of CIOs identify AI/ML as their primary technology investment focus, with 45% deeming it most strategically important. But this isn’t the undirected AI enthusiasm of previous years. Today’s investments focus on building systematic capabilities for AI integration across business processes, not just deploying cool technology.

- Data Infrastructure Modernization (The Foundation)The renaissance of unstructured data has arrived. With 94% of data and AI leaders reporting increased focus on data due to AI initiatives, enterprises are finally investing in the infrastructure needed to manage, process, and derive insights from the text, images, and documents where real business value lives. One insurance executive recently shared that 97% of their company’s data was unstructured—a wake-up call that resonates across industries.

- Talent and Change Management Systems (The Multiplier)The human element has proven more challenging than the technology. With 38% of organizations struggling to find AI/ML talent and 54% of CIOs citing staffing challenges as barriers to strategic objectives, human capital investment has become as crucial as technological infrastructure. As one CIO put it, “We need to preserve our people who are bilingual—who understand both the business and technology.”

Where Enterprises Are Focusing Their AI Investments

Current enterprise AI initiatives cluster around four strategic areas, each with distinct ROI characteristics:

Process Automation (69% of IT leaders): The efficiency play, focusing on internal operations, cost reduction, and operational excellence. Early wins are measurable but often limited in scope.

Customer Experience Enhancement (62% implementation rate): The growth play, applying AI to customer-facing applications to drive revenue and competitive differentiation. Higher complexity but potentially higher returns.

Data Analytics and Insights: The intelligence play, transforming decision-making processes and unlocking hidden data value. Often the bridge between operational efficiency and strategic advantage.

Agentic AI Exploration: The future play, beginning to explore autonomous AI systems for complex task orchestration. Still early but potentially transformative for enterprise operations.

The Hard-Won Lessons from AI Pilots: What Worked and What Didn’t

The Success Patterns

After analyzing hundreds of AI implementations, clear success patterns have emerged:

Focused Problem Definition: Winners started with specific, measurable business challenges rather than broad exploratory initiatives. They asked “What business problem are we solving?” before “What can AI do?”

Strong Business-IT Collaboration: The 75% of implementations that succeeded involved close collaboration between CIOs and line-of-business leaders from day one. Technology and business strategy moved in lockstep.

Data Readiness Investment: Organizations that invested in data quality and structure before AI implementation saw dramatically better outcomes. The “garbage in, garbage out” principle has never been more relevant.

The Common Failure Patterns

The failures were equally instructive:

Technology-First Approach: Many pilots failed by starting with AI capabilities rather than business problems. The fascination with the technology overshadowed practical business needs.

Insufficient Change Management: Technical success often failed to translate to business value due to inadequate user adoption strategies. Building it didn’t mean they would come.

Unrealistic Expectations: The gap between “exponential productivity gains” claims and actual measured results created credibility issues that persist today.

How Enterprises Measure AI ROI Today: The Good, Bad, and Misleading

The current state of AI ROI measurement reveals a troubling disconnect between claims and reality. Here’s how organizations are actually measuring success—and where they’re going wrong. The table 1 below summarizes the the Dos/Dont’s:

| Measurement Approach | Percentage Using | What’s Right | What’s Wrong | Impact on Decision Making |

|---|---|---|---|---|

| Productivity Metrics | 58% claim “exponential gains” | – Measurable output increases – Clear before/after comparison – Quantifiable time savings |

– Lacks controlled experiments – Ignores quality considerations – Difficult to isolate AI impact |

Often overstated, lacks credibility |

| Cost Reduction Focus | 35-40% of implementations | – Easy to quantify – Direct bottom-line impact – Clear financial metrics |

– Ignores revenue opportunities – May compromise service quality – Short-term perspective |

Limits strategic thinking |

| User Satisfaction Surveys | 45-50% of customer-facing AI | – Captures user experience – Indicates adoption success – Qualitative insights |

– Subjective measurements – No financial correlation – Response bias issues |

Poor link to business outcomes |

| Technical Performance KPIs | 60-70% of implementations | – Objective measurements – Continuous monitoring – System optimization focus |

– Disconnected from business value – Technical success ≠ ROI – Limited executive relevance |

Misaligned with business priorities |

| Revenue Attribution | 25-30% attempt direct linking | – Clear business relevance – Executive attention – Growth-focused mindset |

– Difficult causal attribution – Multiple variable confusion – Long measurement cycles |

Often inconclusive results |

| Controlled Experiments | <15% use proper controls | – Scientific rigor – Clear cause-effect relationships – Credible results |

– Complex to implement – Resource intensive – Slower results |

High credibility but rare |

Table 1: ROI measuring metrics and where things can go right and wrong

The stark reality: Most organizations are measuring AI success in ways that either overstate benefits or miss the real value creation entirely.

The Right Way to Measure AI ROI: A Framework for Success

Multi-Dimensional Value Assessment

The most successful organizations have moved beyond single-metric ROI calculations to comprehensive value frameworks:

Financial Impact: Direct cost savings and revenue generation with clear attribution methods

Operational Efficiency: Process improvement and time reduction metrics tied to business outcomes

Strategic Value: Market positioning and competitive advantage indicators that drive long-term success

Risk Mitigation: Compliance improvements and error reduction that protect business value

Best Practices for Quantifying Success vs. Failure

Controlled Experimentation: Goldman Sachs demonstrated the power of rigorous A/B testing, measuring a credible 20% productivity increase in programming through controlled studies comparing AI-enabled developers against traditional approaches.

Baseline Establishment: Document pre-implementation performance across multiple dimensions, not just the primary success indicator. This creates the foundation for genuine before-and-after analysis.

Quality-Adjusted Productivity: Measure both output volume and quality improvements. If AI helps write blog posts faster but they’re boring and inaccurate, that’s not success—it’s a measurable failure.

Long-term Impact Tracking: Extend measurement periods beyond initial implementation to capture learning curve effects and sustainability of improvements.

Identifying High-ROI POCs: Where Most Enterprises Go Wrong

The Common Mistakes

Technology Push vs. Business Pull: Organizations start with “What can we do with ChatGPT?” instead of “What business problems need solving?”

Scale Mismatch: Selecting problems either too narrow to demonstrate significant value or too broad to manage effectively

Data Readiness Assumptions: Underestimating the data preparation and quality requirements for successful AI implementation

The Right Selection Criteria

Clear Value Quantification: Choose use cases where success can be measured in specific business terms—cost reduction, time savings, error reduction, revenue increase.

Manageable Scope: Focus on processes that can be contained, measured, and iterated upon without affecting critical business operations.

Data Availability and Quality: Ensure sufficient, clean, relevant data exists or can be readily obtained.

Stakeholder Alignment: Select areas where business users are engaged champions rather than reluctant participants.

Best Practices for Scaling Successful POCs

The Identification Framework

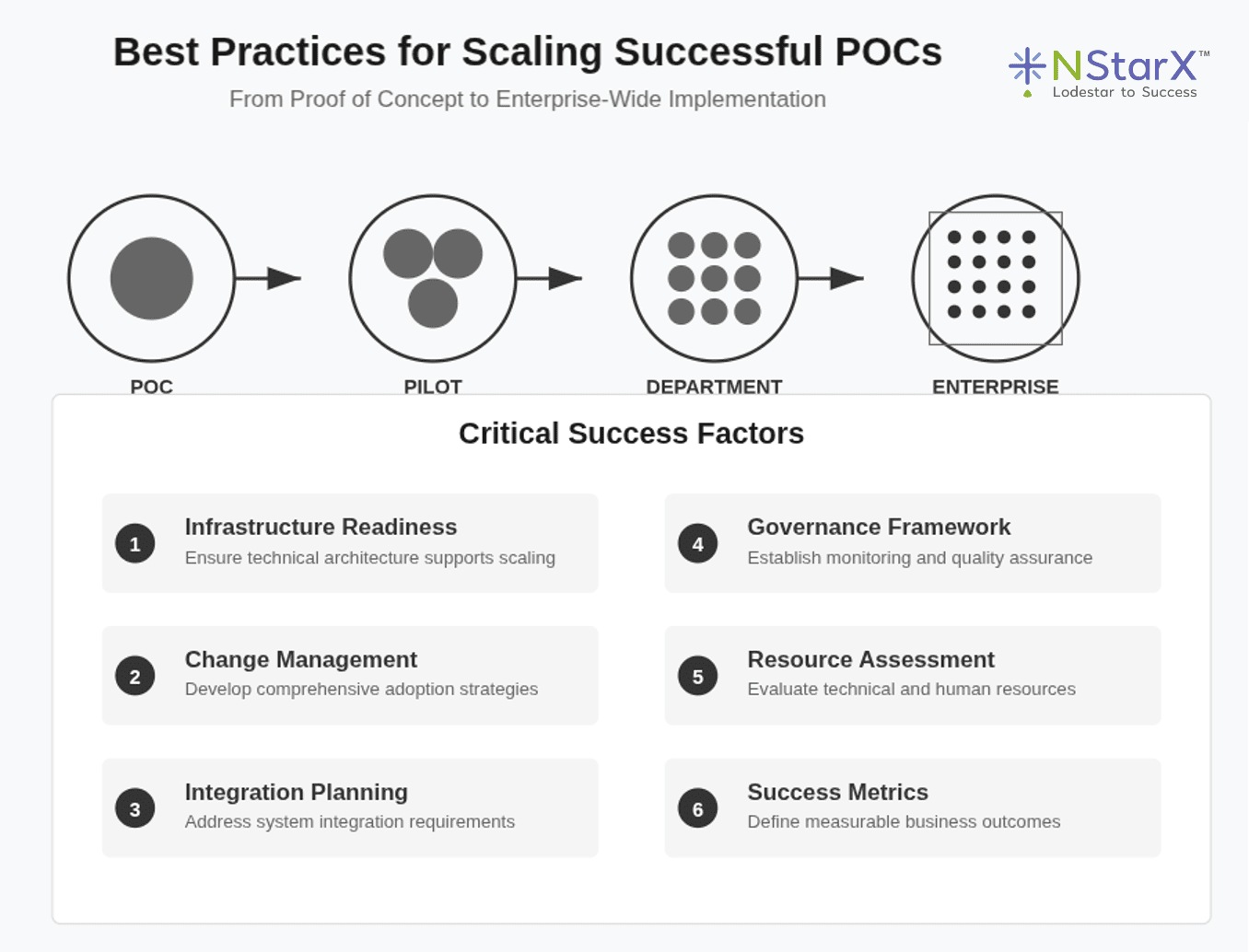

Let us look at a pictorial representation (Figure 1) on what every organizations/enterprises should explore:

Figure 1: Best practices for successfully scaling Pilots

Process Mining Approach: Use data analytics to identify processes with high volume, clear patterns, and measurable inefficiencies.

Business Impact Assessment: Prioritize use cases based on potential financial impact, implementation feasibility, and strategic alignment.

Resource Requirement Analysis: Evaluate required technical infrastructure, data preparation, and change management resources.

The Scaling Imperative

Infrastructure Readiness: Ensure technical architecture can support expanded implementation without performance degradation.

Change Management Preparation: Develop comprehensive training and adoption strategies before scaling beyond pilot users.

Integration Planning: Address how AI solutions will integrate with existing business processes and systems.

Governance Framework: Establish monitoring, compliance, and quality assurance processes for scaled implementations.

What We’ve Learned: Redefining the AI Approach for 2026

The Reality Check from 2024-2025

Hype vs. Reality Gap: The initial optimism has given way to more realistic expectations. Claims of “exponential productivity gains” have been replaced by more measured assessments of actual impact.

Cultural Transformation Requirements: Technology implementation alone is insufficient. With 92% of respondents identifying cultural and change management challenges as primary barriers, the human element has proven more complex than the technical one.

Unstructured Data Renaissance: The focus has shifted back to managing unstructured data, with organizations realizing that most AI value comes from processing text, images, and documents rather than traditional structured data.

Strategic Redirections for Success

From Experimentation to Systematic Deployment: Moving beyond pilot programs to integrated business process transformation

From Technology-Centric to Business-Outcome Focused: Prioritizing use cases based on business value rather than technological sophistication

From Individual Tools to Ecosystem Thinking: Considering how AI initiatives integrate across the entire business ecosystem

The Future of AI ROI Measurement: What’s Coming Next

Emerging Measurement Paradigms

Real-time Impact Assessment: Advanced analytics platforms will enable continuous ROI monitoring, allowing for immediate course corrections and optimization.

Ecosystem-Level Value Measurement: Moving beyond individual project ROI to measuring AI’s impact across interconnected business processes and partner networks.

Adaptive Learning Integration: ROI measurement systems that improve their accuracy over time by learning from previous assessments and outcomes.

Preparing for Dynamic AI Landscapes

Flexible Measurement Frameworks: Developing ROI assessment approaches that can adapt to rapidly evolving AI capabilities and use cases.

Cross-Industry Benchmarking: Establishing industry-specific ROI baselines for more meaningful performance comparison.

Ethical and Social Impact Integration: Incorporating broader societal and ethical considerations into ROI calculations as AI becomes more pervasive.

The Path Forward: Building Sustainable AI Value

The transition from AI experimentation to systematic value creation requires fundamental shifts in approach. Success in 2026 will depend on enterprises’ ability to move beyond pilot fatigue and implement rigorous, business-focused AI initiatives with clear value measurement.

The evidence is clear: organizations achieving real AI ROI share common characteristics. They start with business problems rather than technological capabilities. They implement rigorous measurement frameworks. They invest in change management. They maintain realistic expectations about implementation timelines and outcomes.

As one CIO recently observed, “Our job is to think about technology and introduce technology, but we’re really here to solve business challenges. We need to train the organization to leverage AI to solve business problems, not just to create something new.”

The competitive advantage in 2026 will belong to organizations that can systematically identify, implement, measure, and scale AI initiatives that deliver genuine business value. This requires not just technological sophistication, but organizational maturity in change management, measurement discipline, and strategic patience.

The future belongs to enterprises that can bridge the gap between AI’s technical possibilities and business realities through systematic, measurable, and scalable approaches to value creation. The question isn’t whether AI will transform business—it’s whether your organization will be among those that capture that transformation as measurable, sustainable competitive advantage.

References:

- Davenport, Thomas H., and Randy Bean. “Five Trends in AI and Data Science for 2025.” MIT Sloan Management Review, January 2025.

- Stackpole, Beth. “State of the CIO, 2025: CIOs set the AI agenda.” CIO Magazine, May 2025.

- Bean, Randy. “2025 AI & Data Leadership Executive Benchmark Survey.” Data & AI Leadership Exchange, 2025.

- Goldman Sachs. “Generative AI Productivity Study: Programming Applications.” Internal Research Report, 2024.

- Acemoglu, Daron. “The Economics of AI and Productivity.” Nobel Prize Economics Commentary, 2024.

- UiPath. “IT Leaders and Agentic AI Survey.” Market Research Report, 2025.