Why Video Streaming has boomed and yet — Why It Feels Broken

Video streaming is arguably the dominant medium of our era. With over 3.5 billion users globally and exponential growth in platforms like YouTube, Netflix, Twitch, and enterprise VOD platforms, video has become the default mode of communication, storytelling, advertising, education, and commerce. From binge-worthy content to Zoom calls and e-learning modules, video defines how we consume and convey information.

Yet beneath this glossy veneer, cracks are visible. The system feels bloated, expensive, inefficient, and—most importantly—impersonal. The long tail of content is underutilized. Personalization is hit-or-miss. Localization is an expensive afterthought. Content moderation and compliance drain time and resources. For creators, marketers, and operations teams, the promise of seamless video streaming is often derailed by practical limitations.

Where Streaming Is Broken for Enterprises (B2B + B2C)- Some examples illustrated

In B2B (Enterprise, Corporate Comms, Learning):

- Training and internal videos are costly and hard to update

- Global localization is sluggish and costly

- Metadata tagging and compliance checks are manual

- Discovery and reusability of archived content is abysmal

In B2C (OTT, Sports, News):

- Recommendations are too basic, failing to capture user intent

- Editing highlights or trailers requires hours of manual effort

- Live content lacks intelligent augmentation (e.g., overlays, translation)

- Ad production cycles can’t keep up with campaign agility

These problems translate to real pain points:

- Missed monetization from untapped archives

- High churn from poor engagement

- High OPEX in editing, compliance, and distribution

- Delay in time-to-market for new campaigns or shows

So let us discuss, what are the limitations of Traditional Technologies

Despite significant investment, many legacy streaming architectures are built for a pre-AI world:

- Rule-based recommendation engines can’t handle complex user queries

- Manual subtitling or dubbing introduces lag and cost

- Traditional asset management systems don’t semantically understand content

- Hard-coded workflows don’t adapt in real-time to context or audiences

Moreover, content teams are often forced to stitch together workflows across MAMs, CDNs, DAMs, transcription services, translation vendors, and editorial platforms—slowing them down dramatically.

Enter LLMs! – Why Generative AI and LLMs Are a Game-Changer

Generative AI, especially in the form of Large Language Models (LLMs), brings a breakthrough layer of intelligence and adaptability to the video ecosystem. Here’s how:

- Auto-summarization: Create real-time summaries or “previously on…” segments from raw footage.

- Multi-language support: Translate, dub, or subtitle content dynamically with tools like ElevenLabs and Papercup.

- Semantic search and discovery: Let users find content using conversational queries (“Find scenes where a character loses hope in Season 2”).

- Contextual personalization: Move beyond click history to match user mood, behavior, and micro-genres.

- Script and storyboard generation: Help creative teams ideate, script, and visualize content faster.

- Ad variant generation: Automatically generate, test, and optimize ad creatives at scale.

These tools don’t just cut cost—they increase creative and operational velocity.

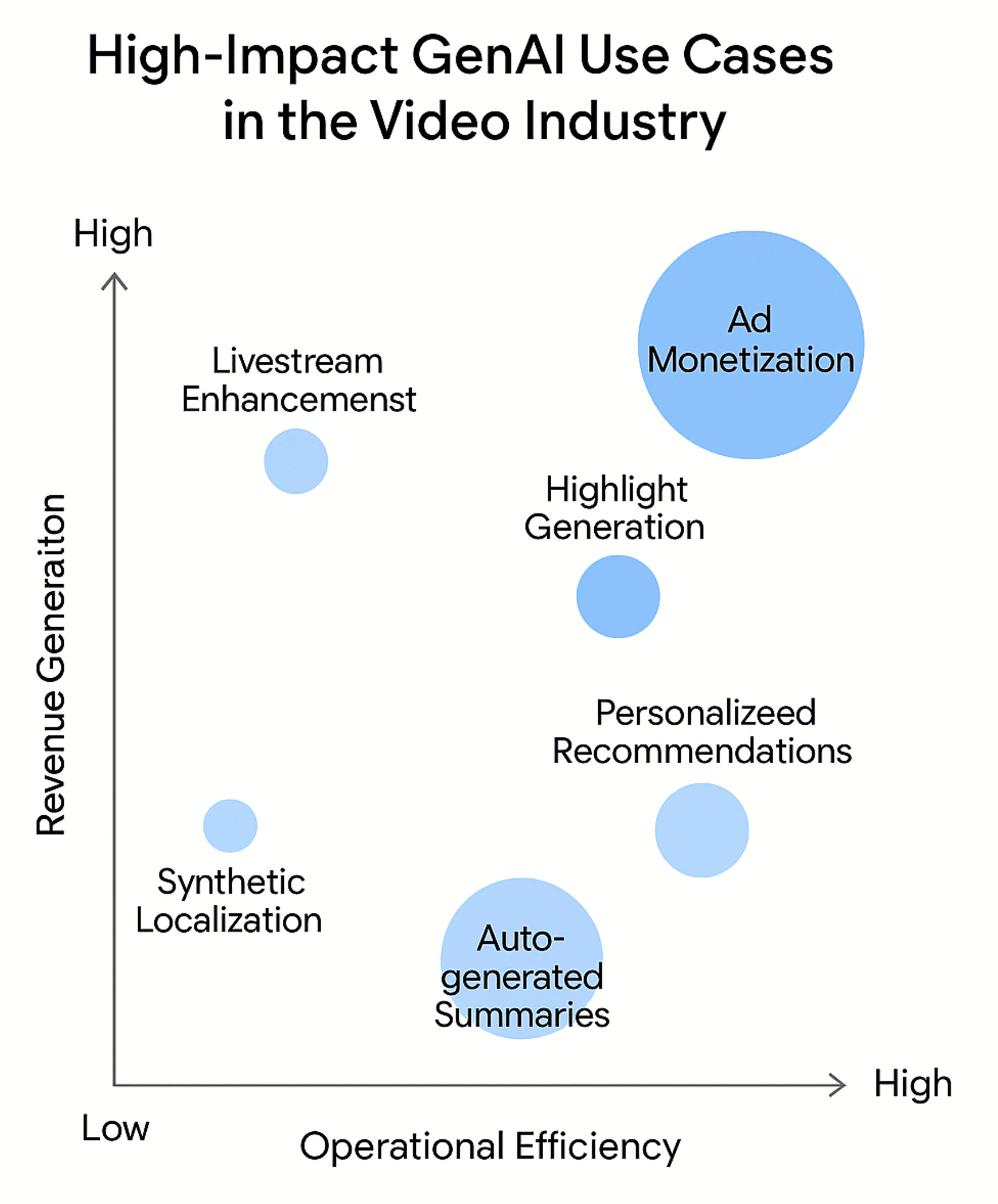

Potential High-Impact Use Cases for Revenue and Efficiency

| Use Case | Impact on Revenue | Impact on Efficiency |

|---|---|---|

| AI-Generated Ad Variants | Shortens go-to-market; improves campaign performance | Reduces cost of creatives by 80–90% |

| Automated Video Highlighting | Increases viewer retention; improves monetization on clips | Cuts post-production effort by up to 70% |

| Real-time Summarization/Recaps | Boosts engagement and accessibility | Frees editorial bandwidth; reduces manual scripting |

| Multilingual Subtitling/Dubbing | Expands audience across global regions | Accelerates localization; reduces translator dependencies |

| Semantic Search & Content Recall | Drives upsell of archives; boosts time-on-platform | Reduces search latency and metadata curation needs |

| Compliance & Moderation Tagging | Avoids legal fines; protects brand reputation | Cuts down legal review cycle by over 60% |

Table 1: Use Cases that will be seen in the future of the Video Industry

Picture 1: Sample representation of use cases that will generate revenues and Ops efficiencies

Let us look at some real world examples: Real-World Examples of GenAI in Video Today

1. Kalshi NBA Finals Ad (2025)

- AI-scripted, visualized, and edited ad aired to millions

- Cost: $2,000 | Time: 3 days | Reach: 20M

2. Moments Lab (MXT-2)

- Used by Warner Bros and Hearst

- Automates highlight creation and indexing

3. Amazon Prime “AI Topics”

- Grouping and recommending content based on themes, not just genres

4. Warner Bros Discovery Cycling Intelligence

- Adds AI overlays and commentary to live events

5. Synthesia AI Avatars

- Used by 60% of Fortune 100 for scalable employee training and internal comms

6. Meta “MovieGen”, OpenAI “Sora”, RunwayML

- Text-to-video tools powering the next generation of AI-native content

Beyond the hype, let us understand the limits of today’s GenAI & LLMs

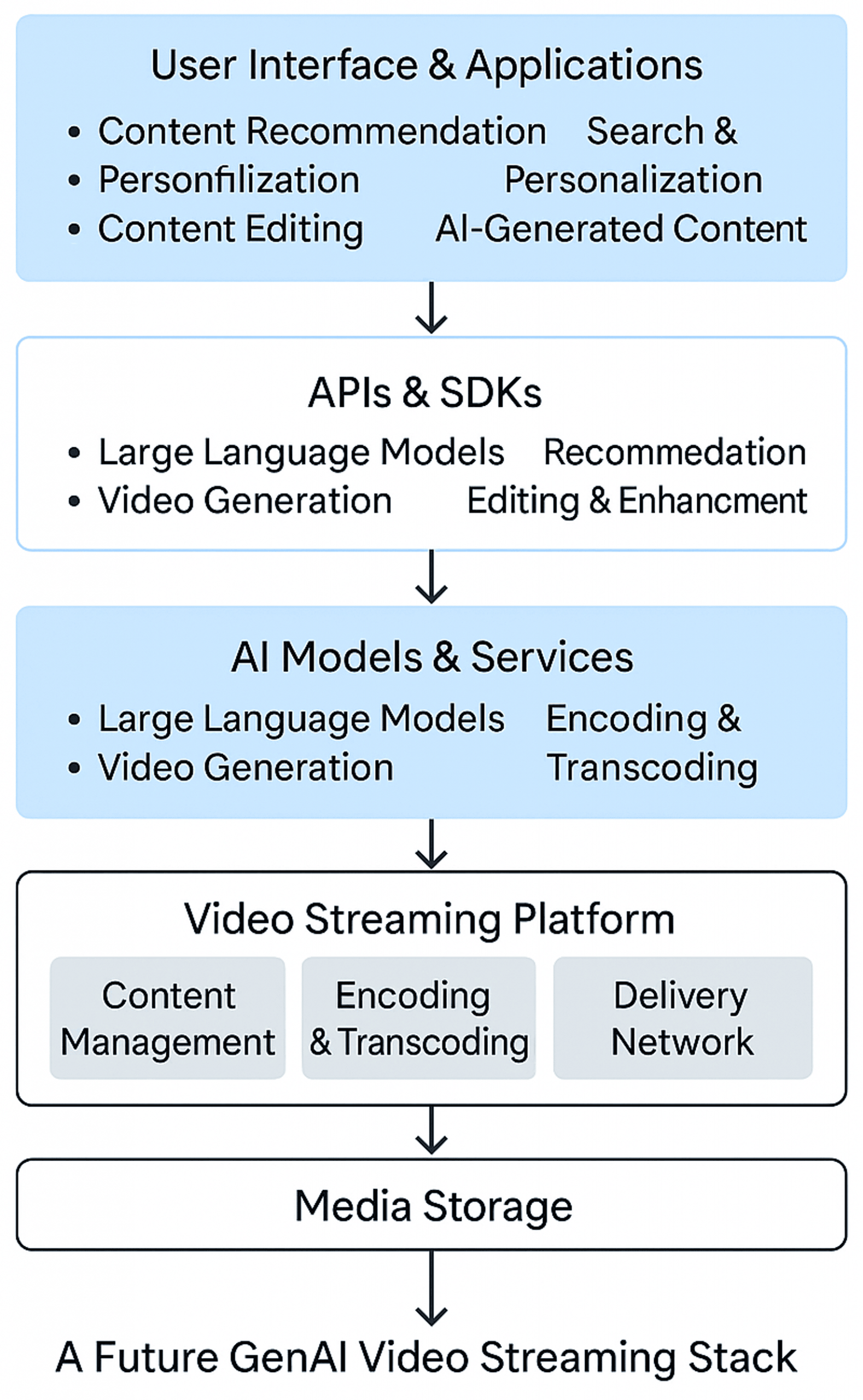

Here is the sample representation of at a very high level of the Video Streaming GenAI stack. However, this needs to be flushed out in detail when detailing the usecase at the granular level.

Picture 2: High Level Video Streaming GenAI Architecture

Picture 2: High Level Video Streaming GenAI Architecture

While promising, GenAI tools today have real constraints:

- Accuracy Gaps: AI can hallucinate or misinterpret tone/context

- Legal & IP Ambiguity: Who owns AI-generated content? Who is liable?

- Latency & Scale: Real-time pipelines using LLMs can get expensive and hard to scale

- Lack of Emotional Depth: AI struggles to grasp nuance, irony, and emotional arcs

- Bias & Ethics: AI models may reinforce stereotypes or inappropriate patterns from training data

NStarx thinks we need the Best of Both Worlds: Humans + AI (Let 1+1 resonate to 11)

Enterprises should view AI not as a replacement, but a collaborator:

- Use AI for first drafts, then let humans refine tone, cultural cues, and style

- Establish review workflows where AI flags issues but humans approve

- Build feedback loops where humans correct AI, improving models over time

- Employ ethics teams to define what’s acceptable and brand-safe

This symbiosis boosts speed without compromising on trust or creativity.

The true color of any hype surfaces in the production grade deployment: Challenges & Best Practices

Core Challenges:

- Integration Complexity: Many GenAI tools lack robust APIs

- Data Readiness: Inconsistent metadata and fragmented content stores

- Change Resistance: Creators may resist “AI-generated anything”

- Security & Governance: Concerns around prompt injection, misuse, and compliance

Best Practices:

1. Start Small: Pilot one or two use cases like auto-highlighting or summaries

2. Use Modular Agents: Avoid black-box monoliths; use composable GenAI components

3. Tag and Taxonomize: Structured metadata improves AI utility

4. Build Governance: Setup an AI Review Board and version-control prompts

5. Train Creators: Help editors, marketers, and analysts work with—not against—AI

So, what does the future of video in the GenAI Era would look like:

If LLMs and GenAI become foundational in streaming workflows:

- Content creation democratizes—every producer can create multilingual video content

- Advertising becomes dynamic—campaigns are AI-personalized per viewer cohort

- Discoverability explodes—semantic search and conversational interfaces dominate

- Live experiences become intelligent—with real-time overlays, summaries, and adaptation

- Production costs drop—while variety and personalization increase exponentially

In short: a faster, smarter, more inclusive video future.

Conclusion

The video streaming industry is at a transformative inflection point. Legacy tools and workflows are no longer sufficient to meet the demands of global, real-time, personalized video consumption. Generative AI and Large Language Models present a historic opportunity—not just to automate tasks, but to reimagine how video is created, managed, localized, and monetized.

For enterprises, the time to act is now. By embracing GenAI with clear goals, ethical frameworks, and a human-in-the-loop approach, video streaming organizations can unlock faster production cycles, deeper audience engagement, and stronger bottom-line impact. The pioneers of this shift will not only lead in innovation—but will redefine what storytelling, communication, and media look like in the AI-native era.

References

- Business Insider: Kalshi AI NBA Ad [2025]

- The Verge: Amazon Prime “AI Topics”

- TVTechnology: Warner Bros AI Livestream Commentary

- Moments Lab MXT-2 Pitch Deck

- Meta MovieGen, OpenAI Sora, Runway Gen-3

https://about.fb.com/news/2024/11/meta-ai-generative-video-moviegen/

https://openai.com/sora

https://research.runwayml.com/gen3 - Synthesia, Papercup Use Cases

https://www.synthesia.io/

https://www.papercup.com/blog/ai-dubbing-in-media - Vimeo CEO AI Strategy Commentary