Author: NStarX Engineering team has been working with vibe coding and identifying means to help customers explore how they can go AI-native in their Software Journey. The team comes together to pen their thoughts on this important topic.

Executive Summary

The era of AI-native teams has arrived, fundamentally transforming how organizations build, deploy, and scale software. As we navigate through 2025, companies face a critical inflection point: adapt their talent models to embrace AI augmentation or risk falling irreversibly behind. This comprehensive guide explores the operating models, roles, and practices that distinguish successful AI-native organizations from those struggling with implementation failures.

1. Introduction: Modern Software Engineering in the Age of Agents

The Paradigm Shift

Software development in 2025 looks radically different from even two years ago. Traditional software development teams were organized in rigid, siloed structures with work handed off from one role to the next, but today’s AI-native teams are designed for continuous collaboration and adaptability across both discovery and delivery.

The numbers tell a compelling story. As the digital era launched in the 1990s, industrial-age principles began crumbling with the rise of computing, and today, approximately 5.8 percent of the US population is employed in tech jobs, with speed and customer access becoming the keys to competitive advantage. The emergence of AI agents has accelerated this transformation exponentially.

What Defines an AI-Native Team?

An AI-native team isn’t simply a traditional team using AI tools—it represents a fundamental reimagining of how work gets done. These teams are characterized by:

Human-AI Collaboration Architecture: In the agentic organization, humans are positioned above the loop to steer and direct outcomes and selectively within the loop where human contact matters, with AI-first workflows where humans and IT systems are selectively reintroduced in AI-native design.

Flat, Adaptive Structures: Winning operating models empower agentic teams with flat decision and communication structures that operate with high context sharing and alignment across agentic teams, with organization charts pivoting toward agentic networks or work charts based on exchanging tasks and outcomes.

Continuous Experimentation: A typical AI-native development pod includes just three to five people: a Product Strategist to define direction, an Engineer to orchestrate execution, and a QA lead to embed quality from the start, with designers and AI specialists rotating in depending on project needs.

The shift is profound. Where traditional teams might have 8-12 people with multiple handoff layers, AI-native pods deliver more with less through tighter feedback cycles and AI amplification.

2. Real-World Examples: Success Stories and Cautionary Tales

Companies Building Successful AI-Native Teams

OpenAI: The Pioneer Model

OpenAI’s annualized revenue hit $10 billion by June 2025, nearly doubling from $5.5 billion in December 2024, with projections of $12.7 billion in full-year revenue for 2025. The company has formed over 120 strategic partnerships with enterprises and government bodies, demonstrating the power of a well-executed AI-native operating model.

OpenAI’s success stems from several key factors:

- Rapid iteration cycles: Models are updated monthly or more frequently

- Cross-functional AI-first teams: Research, engineering, and safety work in tight collaboration

- Product-oriented structure: Prioritizes shipping production-ready capabilities

Anthropic: Safety-First at Scale

Anthropic’s revenue soared from $10 million in 2022 to $1 billion in 2024, with projections at $2.2 billion in 2025—a 220× growth over three years. The company incorporated itself as a Delaware public-benefit corporation, enabling directors to balance stockholders’ financial interests with its public benefit purpose.

Anthropic’s approach demonstrates that AI-native doesn’t mean sacrificing rigor:

- Constitutional AI framework: Embedded in team workflows

- Safety research: Integrated into product development from day one

- Iterative improvement cycles: Continuous model refinement

Healthcare Leaders: Kaiser Permanente & Mayo Clinic

Kaiser Permanente deployed Abridge’s ambient documentation solution across 40 hospitals and 600+ medical offices, marking the largest generative AI rollout in healthcare history and Kaiser’s fastest implementation of a technology in over 20 years. Mayo Clinic is investing more than $1 billion in AI over the next few years across more than 200 projects that go beyond administrative automation to include diagnostics and patient care.

These organizations succeeded by:

- Starting with low-stakes administrative pilots to build expertise

- Prioritizing production-ready solutions that perform reliably at scale

- Establishing clear evaluation frameworks before deployment

The Failure Landscape: Why 80-95% of AI Projects Don’t Make It

The statistics are sobering and demand attention:

According to S&P Global Market Intelligence’s 2025 survey of over 1,000 enterprises across North America and Europe, 42% of companies abandoned most of their AI initiatives this year—a dramatic spike from just 17% in 2024, with the average organization scrapping 46% of AI proof-of-concepts before they reached production.

MIT estimates that 95% of generative AI pilots fail, often due to brittle workflows and misaligned expectations, with RAND noting failure rates of up to 80%—nearly double that of non-AI IT projects.

Root Causes of Failure

1. Organizational Structure Misalignment

The global CDO Insights 2025 survey identifies the top obstacles as data quality and readiness (43%), lack of technical maturity (43%), and shortage of skills and data literacy (35%).

2. The Learning Gap

The MIT report discovered a “learning gap”—people and organizations simply did not understand how to use AI tools properly or how to design workflows that could capture the benefits of AI while minimizing downside risks.

3. Data Infrastructure Deficits

Informatica’s CDO Insights 2025 survey shows winning programs invert typical spending ratios, earmarking 50-70% of the timeline and budget for data readiness—extraction, normalization, governance metadata, quality dashboards, and retention controls.

4. Lack of Clear Business Value

Gartner predicts at least 30% of generative AI projects will be abandoned after proof of concept by the end of 2025, due to poor data quality, inadequate risk controls, escalating costs, or unclear business value.

Notable Case Study: Air Canada

In 2025, Air Canada was taken to court after its chatbot gave misleading information on bereavement fares, representing a major AI blunder. This incident highlights the critical importance of proper governance and testing before deployment.

3. The Right Recipe for Success: Operating Models That Work

The Core Framework: Roles, Runbooks, and Ladders

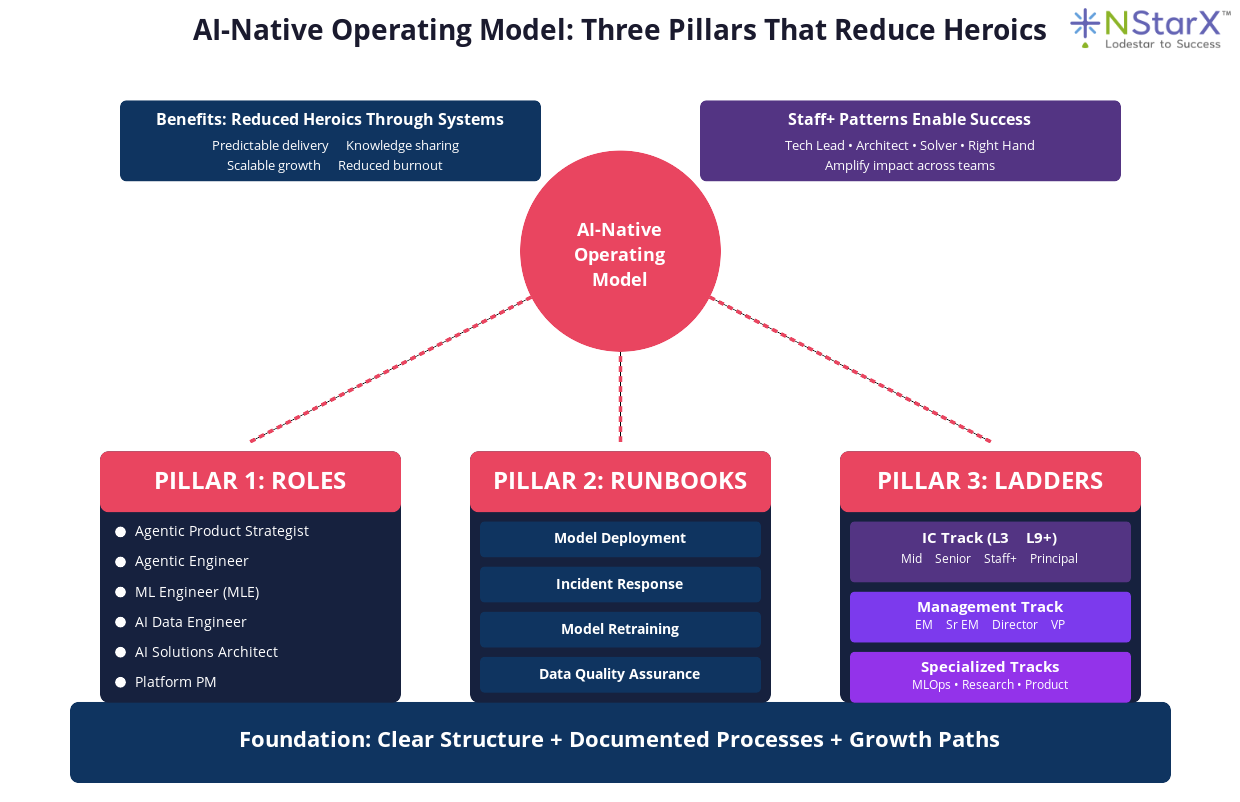

Let us look at how NStarX team looks at the AI-Native Operating model in the Figure 1 below:

Figure 1: AI Native Operative Model

Building an effective AI-native team requires three interconnected pillars:

Pillar 1: Clearly Defined Roles (Beyond Traditional Job Titles)

Agentic Product Strategist

The Agentic Product Strategist is the connective tissue between business intent and AI-powered execution, defining what success looks like and using AI to pressure test that definition before a single line of code is written, exploring product ideas by prompting AI models with user feedback, competitor insights, and strategic goals.

Key responsibilities:

- Translating business objectives into AI-feasible product strategies

- Conducting AI-assisted market analysis and user research

- Defining success metrics that account for AI uncertainty

- Managing the product backlog with AI-driven prioritization

Agentic Engineer

The Agentic Engineer blends software development expertise with AI orchestration, with the human role shifting from execution to orchestration and shaping workflows by setting constraints and reviewing outputs for alignment and quality.

This role requires:

- Prompt fluency: Crafting effective instructions for AI systems

- Architecture thinking: Designing human-AI collaboration patterns

- Quality assurance: Validating AI-generated code and outputs

- Tooling expertise: Managing the AI development stack

Machine Learning Engineer (MLE)

ML engineers move ML solutions into production and optimize the environment for performance and scalability, serving as the connecting fabric between data scientists from an IT perspective to ensure ML models run well in production.

Critical skills include:

- Model deployment and serving infrastructure

- Performance optimization and resource management

- Monitoring and observability implementation

- Production pipeline automation

AI Data Engineer

AI data engineers design and maintain scalable data pipelines and architectures, ensuring that AI models operate on high-quality, structured data essential for accurate predictions and automation, managing ETL processes to integrate data from multiple systems.

AI Solutions Architect

Once you’ve got more than one pod running or your agents are coordinating across multiple workflows, the AI Solutions Architect designs how deep learning models, neural networks, and machine learning algorithms fit together across tools and stages.

Platform Product Manager

The Platform PM plays a unique role in AI-native organizations:

- Building internal tools and platforms that accelerate team velocity

- Defining APIs and interfaces for AI service consumption

- Measuring developer productivity and platform adoption

- Creating documentation and enablement resources

AI Ethicist/Governance Lead

The AI Ethicist ensures that AI is developed and used ethically, responsibly, and moderately, integrating ethics into AI models and algorithms and promoting responsible AI while working to identify and mitigate bias in AI systems.

Pillar 2: Runbooks That Reduce Heroics

Successful AI-native teams rely on documented, repeatable processes rather than individual heroics. Key runbooks include:

1. AI Model Deployment Runbook

Based on MLOps best practices:

- Pre-deployment checklist (data validation, model testing, integration testing)

- Staged rollout procedures (canary, blue-green, feature flags)

- Rollback protocols with clear trigger conditions

- Post-deployment monitoring requirements

2. Incident Response Runbook for AI Systems

When enterprise AI fails, the damage extends beyond wasted budgets, with Gartner predicting over 40% of agentic AI projects will be canceled by 2027 due to escalating costs, unclear business value, or inadequate risk controls.

Essential components:

- Detection: Automated alerts for model drift, performance degradation

- Triage: Severity classification and escalation paths

- Mitigation: Immediate actions to protect users

- Root cause analysis: Post-mortem procedures

- Communication: Stakeholder notification templates

3. Model Retraining and Update Runbook

Continuous training of the model by automating the ML pipeline achieves continuous delivery of model prediction service, particularly helpful for solutions that operate in constantly changing environments.

4. Data Quality Assurance Runbook

Steps include:

- Automated data validation checks

- Data lineage tracking

- Bias detection protocols

- Data versioning procedures

Pillar 3: Career Ladders That Promote Growth

Traditional career ladders often break in AI-native organizations. The solution is creating parallel tracks:

Individual Contributor (IC) Track

- L3-L4 (Mid-level): Execute well-defined AI projects with supervision

- L5-L6 (Senior): Own end-to-end AI features, mentor juniors

- L7-L8 (Staff+): Define technical strategy, influence architecture across teams

- L9+ (Principal/Distinguished): Shape company-wide AI strategy, industry thought leadership

Management Track

- Engineering Manager: Lead one AI pod (5-8 people)

- Senior Engineering Manager: Oversee multiple pods, define standards

- Director: Own AI engineering for a product area

- VP Engineering: Set technical vision and culture

Specialized Tracks

- MLOps Engineer Track: Focus on production ML infrastructure

- AI Research Track: Advance state-of-the-art capabilities

- AI Product Track: Product management specialization for AI products

Staff+ Patterns for AI-Native Teams

Software engineers can boost their impact by helping other teams, focusing on business-driven work, and building strong relationships, with growth coming from mentoring, setting cultural norms, thinking strategically, and designing a career path based on what motivates them.

The Four Archetypes in AI Context:

- Tech Lead: Guides execution of AI projects, makes architectural decisions for the team

- Architect: Defines technical standards and patterns across AI systems

- Solver: Tackles the hardest AI problems that others can’t solve

- Right Hand: Extends the reach of engineering leadership into AI initiatives

Critical Staff+ behaviors:

- Setting technical direction without formal authority

- Defining standards for AI development and deployment

- Mentoring multiple levels of engineers

- Building bridges between technical and business stakeholders

4. Pair Operations: MLE × SRE and Platform Enablement

The MLE × SRE Pairing Pattern

One of the most powerful patterns in AI-native teams is the collaboration between Machine Learning Engineers and Site Reliability Engineers:

Why This Pairing Matters

MLOps combines data engineering, machine learning, and DevOps into a single discipline, encompassing the skills, frameworks, technologies, and best practices that equip teams to industrialize ML models and evolve processes over time.

Traditional handoffs between data science and operations create friction. The MLE × SRE pair eliminates this by:

Joint Ownership Model:

- MLE focus: Model quality, training pipelines, feature engineering

- SRE focus: Reliability, monitoring, infrastructure scalability

- Shared responsibility: Production performance, incident response, capacity planning

Operational Excellence

The objective of an MLOps team is to automate the deployment of ML models into the core software system or as a service component, automating the complete ML-workflow steps without any manual intervention, with triggers including calendar events, messaging, monitoring events, and changes on data, model training code, and application code.

Key practices:

- Pair programming sessions on deployment automation

- Shared on-call rotation for production ML systems

- Joint architecture reviews before new model deployments

- Co-creation of runbooks combining ML and ops perspectives

Platform PM: Enabling Velocity at Scale

The Platform Product Manager role is critical but often overlooked:

Core Responsibilities

When working on AI products, numerous new teams come into play, including data scientists, data engineers, machine learning scientists, machine learning engineers, applied scientists, and business intelligence professionals.

The Platform PM:

- Defines developer experience for internal AI consumers

- Builds abstractions that hide complexity while maintaining power

- Measures platform adoption and developer productivity

- Prioritizes platform investments based on unblocking value

Platform PM Success Metrics

Unlike product PMs, Platform PMs measure success differently:

- Adoption rate: % of teams using platform services

- Time to first value: How quickly can a team ship with the platform?

- Developer satisfaction (NPS or similar)

- Reduction in toil: Hours saved through automation

- Incident reduction: Fewer production issues due to platform reliability

Enablement Metrics That Matter

Key performance indicators are the bedrock of both business and technology success, and when adopting generative AI, KPIs remain critical for evaluating success, helping to objectively assess model performance, align initiatives with business goals, enable data-driven adjustments, and demonstrate overall project value.

Model Quality Metrics

- Accuracy, precision, recall, F1 score

- AUC-ROC for classification tasks

- Perplexity or BLEU scores for language models

- Human evaluation scores for generative outputs

System Performance Metrics

- Inference latency (p50, p95, p99)

- Throughput (requests per second)

- Resource utilization (GPU, memory, cost per inference)

- Availability and uptime

Business Operational Metrics

Business operational metrics help connect technical model quality with downstream financial impact, allowing organizations to understand whether AI initiatives are generating tangible value for the business.

Examples:

- Time saved (e.g., reduced documentation time by 50%)

- Cost reduction (e.g., lower customer service costs)

- Revenue impact (e.g., increased conversion rates)

- Quality improvements (e.g., reduced error rates)

Adoption Metrics

Unlike predictive AI technologies, gen AI success hinges heavily on changes in human behavior and acceptance—customer AI agents are only effective if customers actually engage with them, and AI-enabled employee productivity tools can only drive gains if employees adopt them.

Track:

- Daily/monthly active users

- Feature utilization rates

- User retention and stickiness

- Feedback sentiment scores

Human-AI Collaboration Metrics

Key indicators include the percentage of AI-generated code reviewed and improved by humans, number of AI outputs rejected due to ethical or contextual misalignment, and time taken for human validation loops after AI-generated outputs.

These metrics ensure AI remains a collaborator, not a blind automation that reduces human oversight.

5. When Models Fail: Preparation and Phased Approaches

Warning Signs of Potential Failure

Organizations should watch for these red flags:

Technical Indicators:

- Model drift exceeding acceptable thresholds

- Increasing inference latency or costs

- Rising rate of human overrides or corrections

- Data quality degradation

Organizational Indicators:In 2019, MIT cited that 70% of AI efforts saw little to no impact after deployment, with some predicting as high as 85% of AI projects missing expectations—a figure much greater than the 25-50% regular IT project failure rate.

- Siloed teams working in isolation

- Lack of executive sponsorship or unclear ownership

- Insufficient investment in data infrastructure

- Missing governance frameworks

Human Factors:Employees often struggle to trust AI due to concerns about reliability, transparency, and fairness, with many wary because technologies can sometimes produce unpredictable or biased outcomes, leading to a lack of confidence in decision-making processes.

Phased Enterprise Approach

Phase 1: Foundation (Months 1-6)

Goals:

- Establish data infrastructure and governance

- Build initial AI expertise through pilots

- Define success criteria and measurement frameworks

- Create organizational alignment

Actions:

- Hire or upskill for core AI roles (Data Engineer, MLE, Data Scientist)

- Implement data quality processes

- Run 2-3 low-stakes proof-of-concepts

- Establish AI ethics and governance board

Phase 2: Expansion (Months 7-18)

Organizations using blended teams—combining specialized freelance talent with full-time employees—are twice as likely to reach advanced stages of AI innovation, with 40% successfully deploying AI to production or achieving scaled usage.

Goals:

- Scale successful pilots to production

- Build reusable platform capabilities

- Grow team and establish career ladders

- Implement MLOps practices

Actions:

- Deploy first production AI features

- Create internal AI platform and tooling

- Establish MLE × SRE pairing for critical systems

- Document runbooks and best practices

- Launch formal AI training programs

Phase 3: Optimization (Months 19-36)

Goals:

- Achieve AI-native operating model

- Optimize costs and performance

- Expand to advanced use cases

- Build competitive moats through AI

Actions:

- Implement advanced MLOps automation

- Establish centers of excellence

- Build proprietary AI capabilities

- Measure and optimize enablement metrics

- Share learnings externally (thought leadership)

The Staff+ Role in Transformation

To increase impact, staff engineers should choose problems tied to business outcomes and communicate progress as they’re being built, showing how work affects customers and drives customer growth.

Staff+ engineers play critical roles:

- Champions of best practices: Setting examples others follow

- Technical due diligence: Evaluating build vs. buy decisions

- Architecture governance: Ensuring scalability and maintainability

- Cultural leadership: Modeling collaborative behaviors

6. Production Team Example: Healthcare AI Use Case

Case Study: AI-Native Clinical Decision Support System

Let’s examine how an AI-native team would structure itself to build a production clinical decision support system for a large healthcare provider.

Business Context

The Challenge: Healthcare’s AI moment is here, with providers seeing products that deliver ROI and witnessing peers adopt at scale, and buying cycles have compressed from 12 to 18 months to under six months.

A health system with 40 hospitals and 600 clinics needs to:

- Reduce physician documentation time by 50%

- Improve diagnostic accuracy for rare conditions

- Provide real-time clinical recommendations during patient visits

- Ensure HIPAA compliance and patient safety

Team Structure

Core Product Pod (5 people):

- AI Product Manager (1)

- Defines clinical workflows and success metrics

- Manages physician stakeholder relationships

- Prioritizes features based on clinical impact

- Ensures regulatory compliance alignment

- Senior ML Engineers (2)

- Build and train diagnostic models

- Implement RAG systems for clinical literature

- Own model performance and retraining pipelines

- Pair with SREs on production deployment

- AI Data Engineer (1)

- Manages HIPAA-compliant data pipelines

- Handles EHR integration and data extraction

- Ensures data quality and completeness

- Implements data versioning and lineage tracking

- Clinical Domain Expert (1)

- Validates clinical accuracy of recommendations

- Provides medical expertise for edge cases

- Helps design clinical evaluation protocols

- Liaison with physician users

Supporting Roles (Matrixed):

- SRE (0.5 FTE): Production reliability, monitoring, infrastructure

- Platform Engineer (0.25 FTE): Integration with internal tools

- AI Ethicist (0.25 FTE): Bias detection, fairness audits

- Clinical Informaticist (0.5 FTE): EHR workflows and optimization

Technical Architecture

Components:

- Real-time Clinical Note Processing

- Ambient listening during patient visits

- NLP-based information extraction

- Structured data generation for EHR

- Diagnostic Support System: At Massachusetts General Hospital and MIT, AI algorithms detected lung nodules with 94% accuracy compared to 65% for radiologists, and showed 90% sensitivity in breast cancer detection, surpassing the 78% sensitivity of human experts.Features:

- Symptoms-to-diagnosis mapping

- Rare condition flagging

- Evidence-based treatment suggestions

- Drug interaction checking

- Clinical Literature RAG System

- Real-time search across medical journals

- Evidence grading and reliability scoring

- Automatic updates as new research publishes

Implementation Phases

Phase 1: Safety Validation (Months 1-3)

This initial phase assesses the foundational safety of the AI model or tool, where models are deployed in a controlled, non-production setting where they do not influence clinical decisions, with testing done retrospectively or in “silent mode” where predictions are observed without impacting patient care.

Activities:

- Retrospective evaluation on historical patient records

- Shadow mode deployment with manual verification

- Clinical accuracy validation by physician review board

- Ethics review and bias testing

Success Criteria:

- 95%+ agreement with physician diagnoses on test set

- Zero false positives on critical conditions

- Approval from hospital ethics committee

Phase 2: Pilot Deployment (Months 4-9)

Advocate Health evaluated over 225 AI solutions to select 40 use cases to go live with, including the largest deployment of Microsoft Dragon Copilot, imaging tools like Aidoc and Rad AI, and AI for call centers.

Rollout:

- Deploy to 3 volunteer clinics (~50 physicians)

- Provide extensive training and support

- Collect feedback through surveys and interviews

- Measure time savings and accuracy improvements

Key Metrics:

- Average documentation time reduction: Target 30+ minutes/day

- Physician satisfaction score: Target 4.0+/5.0

- System reliability: 99.9% uptime

- Zero patient safety incidents

Phase 3: Scale Production (Months 10-24)

Progressive rollout:

- Month 10-12: Expand to 10 clinics (200 physicians)

- Month 13-18: Deploy to 50 clinics (1,000 physicians)

- Month 19-24: Full deployment (40 hospitals, 600 clinics)

Ongoing Operations:

- Continuous model monitoring and drift detection

- Monthly retraining with new clinical data

- Quarterly clinical validation studies

- Regular bias audits across patient demographics

Measured Outcomes

After 18 months:

Efficiency Gains: These initiatives are projected to reduce documentation time by more than 50%, while automating prior authorizations, referrals, and coding workflows.

Clinical Impact:

- 23% improvement in rare condition detection

- 15% reduction in medication error flags

- 8% improvement in treatment plan adherence

- 92% physician adoption rate

Business Results:

- $47M annual savings from reduced documentation time

- $18M savings from reduced medical errors

- 25% faster patient throughput in clinics

- ROI achieved in 14 months

Key Success Factors

- Clinical co-design: Physicians involved from day one

- Staged validation: Rigorous safety testing before each expansion

- Clear ownership: Product Manager owned end-to-end success

- MLE × SRE pairing: Ensured production reliability

- Continuous monitoring: Real-time alerting on model performance

- Governance framework: Ethics reviews at each phase gate

7. The Future: Evolution of AI-Native Teams (2025-2030)

Emerging Trends

1. Autonomous AI Agents

The agentic economy demands a strategic pivot from simply managing technology to architecting a high-performing ecosystem of human and AI agents, with optimal human-to-agent ratios being scenario-based and dynamic, consistently trending toward a greater number of agents per human.

By 2027-2028:

- AI agents will handle 40-60% of routine engineering tasks

- Engineers will focus primarily on orchestration and validation

- “Prompt engineering” will evolve into “agent choreography”

- New roles emerge: Agent Behavior Designer, AI Integration Architect

2. Hyper-Specialized AI Roles

The current “AI Engineer” role will fragment into specializations:

- LLM Engineers: Focus on prompt engineering and fine-tuning

- RAG Architects: Design retrieval-augmented generation systems

- AI Security Engineers: Protect against adversarial attacks and jailbreaks

- Model Efficiency Engineers: Optimize inference costs and latency

3. Evolution of Management

IT professionals are moving beyond reactive tasks like troubleshooting and ticket management, which are increasingly automated by AI agents, with their new focus on more strategic work such as designing and managing the hybrid human-machine systems that run the business.

Future engineering managers will:

- Manage hybrid human-AI teams

- Optimize human-AI task allocation

- Monitor AI agent performance alongside human performance

- Navigate ethical dilemmas in AI decision-making

4. Platform Consolidation

The current explosion of AI tools will consolidate:

- Unified AI development platforms (like AWS Bedrock, Azure AI)

- Standardized MLOps practices across industries

- Open-source AI infrastructure becoming table stakes

- Commoditization of foundation models

5. Regulatory Frameworks

Healthcare organizations are working to establish paths from proof-of-concept projects to in-production use cases, with 26.1% of healthcare respondents having proof-of-concept projects for GenAI-enabled clinical decision support systems and 40.6% reporting this use case is in production.

By 2026-2027:

- Mandatory AI audits for high-stakes applications

- Industry-specific AI compliance certifications

- Standardized bias testing requirements

- Liability frameworks for AI decisions

Preparing for the Future

For Organizations:

- Invest in foundational capabilities now

- Build data infrastructure that scales

- Establish governance frameworks early

- Create career ladders for AI roles

- Embrace continuous learning

- Budget 10-15% of engineering time for upskilling

- Bring in external experts for knowledge transfer

- Encourage experimentation with new AI tools

- Plan for organizational change

- Redesign processes around AI augmentation

- Update job descriptions and expectations

- Revise compensation models for AI-augmented roles

For Individual Contributors:

- Develop T-shaped skills

- Deep expertise in one area (e.g., backend engineering, ML)

- Broad understanding of AI capabilities and limitations

- Strong collaboration and communication skills

- Build AI fluency

- Learn prompt engineering and AI tool usage

- Understand when to use AI vs. traditional approaches

- Develop critical thinking about AI outputs

- Focus on uniquely human skills

- Creative problem-solving

- Ethical reasoning and judgment

- Empathy and stakeholder management

- Strategic thinking and vision-setting

8. Conclusion

The transition to AI-native teams represents one of the most significant transformations in software engineering history. Success requires more than adopting new tools—it demands a fundamental rethinking of team structures, career paths, and operational practices.

Key Takeaways

1. Structure Matters: Organizations using blended teams combining specialized freelance talent with full-time employees are twice as likely to reach advanced stages of AI innovation.

2. Reduce Heroics Through Systems: The most successful AI-native teams rely on documented runbooks, clear role definitions, and measurable ladders rather than individual heroics.

3. Embrace Specialized Patterns: The MLE × SRE pairing and Platform PM enablement models accelerate time-to-production while maintaining reliability.

4. Measure What Matters: Organizations using AI-enabled KPIs are five times more likely to effectively align incentive structures with objectives compared to those that rely on legacy KPIs.

5. Prepare for Failure: With 80-95% of AI projects failing, having clear phase gates, governance frameworks, and rollback procedures is essential.

6. Start Now: The gap between early adopters and laggards is widening rapidly. Organizations that delay building AI-native capabilities risk falling irreversibly behind.

The Path Forward

Building an AI-native team is a journey, not a destination. It requires patience, investment, and willingness to learn from failures. But the organizations that get it right—like OpenAI, Anthropic, Kaiser Permanente, and Mayo Clinic—are demonstrating that the rewards justify the effort.

Companies that recognize this shift and proactively build their teams around new AI-native roles and ratios will be the ones that redefine what’s possible, creating enduring value and a truly future-proof enterprise.

The question is no longer whether to become AI-native, but how quickly you can make the transformation while maintaining quality and safety. The operating models, roles, and practices outlined in this guide provide a roadmap—but ultimately, each organization must adapt these patterns to its unique context and culture.

The future belongs to teams that can seamlessly blend human creativity and judgment with AI’s computational power. Start building that future today.

References

- Hatchworks. (2025, July 17). The AI Development Team of the Future: Skills, Roles & Structure. https://hatchworks.com/blog/gendd/ai-development-team-of-the-future/

- Technext. (2025, April 9). A Simple Guide to Building an Ideal AI Team Structure in 2025. https://technext.it/ai-team-structure/

- Space-O Technologies. (2025). How to Build an AI Development Team in 2025. https://www.spaceo.ai/blog/build-an-ai-development-team/

- McKinsey & Company. (2025). The agentic organization: Contours of the next paradigm for the AI era. https://www.mckinsey.com/capabilities/people-and-organizational-performance/our-insights/the-agentic-organization-contours-of-the-next-paradigm-for-the-ai-era

- Medium – THE BRICK LEARNING. (2025, May 16). How We Structure AI Teams for Strategic Success. https://medium.com/towards-data-engineering/how-we-structure-ai-teams-for-strategic-success-and-how-you-can-join-one-105d681a4850

- edX. Key roles for an in-house AI team. https://www.edx.org/resources/roles-you-need-for-ai-team

- Medium – Philippe Dagher. (2025, September). Designing Products in the AI-Native Era: Key Lessons for Future-Forward Teams. https://medium.com/dataai/designing-products-in-the-ai-native-era-key-lessons-for-future-forward-teams-7e5a19e35c91

- CIO. (2025, October 21). The new org chart: Unlocking value with AI-native roles in the agentic era. https://www.cio.com/article/4060162/the-new-org-chart-unlocking-value-with-ai-native-roles-in-the-agentic-era.html

- 8allocate. (2025, July 7). How to Structure an AI-Enabled Product Team. https://8allocate.com/blog/how-to-structure-an-ai-enabled-product-team/

- Gartner. Staff AI Teams With Various Roles and Skills for Success. https://www.gartner.com/smarterwithgartner/how-to-staff-your-ai-team

- PYMNTS.com. (2025, September 24). Microsoft Turns to Anthropic in Shift From OpenAI Relationship. https://www.pymnts.com/artificial-intelligence-2/2025/microsoft-turns-to-anthropic-in-shift-from-openai-relationship

- Green Flag Digital. (2025). Ranking the 25 Top Venture-Backed AI Companies Growing Fast in 2025. https://greenflagdigital.com/research/ranking-top-ai-companies-2025/

- BusinessBecause. (2025, April 18). OpenAI, Anthropic & 10 Of The Top AI Companies To Work For. https://www.businessbecause.com/news/mba-jobs/9749/top-ai-companies-forbes

- Silk Data. Top AI companies of 2025: Comprehensive overview. https://silkdata.tech/blog/article/top-ai-companies

- SQ Magazine. (2025). OpenAI vs. Anthropic Statistics 2025: Growth Meets Safety. https://sqmagazine.co.uk/openai-vs-anthropic-statistics/

- Wikipedia. (2025). Anthropic. https://en.wikipedia.org/wiki/Anthropic

- CNBC. (2025, October 3). OpenAI, Anthropic push AI startups to move fast – can Europe keep up. https://www.cnbc.com/2025/10/03/openai-anthropic-push-ai-startups-to-move-fast-can-europe-keep-up.html

- Forge Global. (2025, July 29). Insights: 5 AI Companies To Expand Globally in 2025. https://forgeglobal.com/insights/startup-trends-5-ai-companies-look-to-expand-globally-in-2025/

- DesignRush. (2025). Top 20 AI Companies in 2025: Leaders, Innovators and the Battle for Market Dominance. https://www.designrush.com/agency/ai-companies/trends/top-ai-companies

- CyberScoop. (2025, September 15). Top AI companies have spent months working with US, UK governments on model safety. https://cyberscoop.com/openai-anthropic-ai-safety-government-research-us-uk/

- RAND Corporation. (2024, August 13). Why AI Projects Fail and How They Can Succeed. https://www.rand.org/pubs/research_reports/RRA2680-1.html

- WorkOS. (2025, July 22). Why Most Enterprise AI Projects Fail—and the Patterns That Actually Work. https://workos.com/blog/why-most-enterprise-ai-projects-fail-patterns-that-work

- Fortune. (2025, August 21). Why did MIT find 95% of AI projects fail? Hint: it wasn’t about the tech itself. https://fortune.com/2025/08/21/an-mit-report-that-95-of-ai-pilots-fail-spooked-investors-but-the-reason-why-those-pilots-failed-is-what-should-make-the-c-suite-anxious/

- Informatica. (2025, March 31). The Surprising Reason Most AI Projects Fail – And How to Avoid It at Your Enterprise. https://www.informatica.com/blogs/the-surprising-reason-most-ai-projects-fail-and-how-to-avoid-it-at-your-enterprise.html

- Tim Spark. (2025). Why AI Projects Fail (95% in 2025) — Artificial Intelligence Project Failures Explained. https://timspark.com/blog/why-ai-projects-fail-artificial-intelligence-failures/

- LexisNexis. (2025, September 15). Why AI Projects Fail: 8 Common Obstacles. https://www.lexisnexis.com/community/insights/professional/b/industry-insights/posts/why-ai-projects-fail

- AIMultiple. AI Fail: 4 Root Causes & Real-life Examples. https://research.aimultiple.com/ai-fail/

- NTT DATA Group. Between 70-85% of GenAI deployment efforts are failing to meet their desired ROI. https://www.nttdata.com/global/en/insights/focus/2024/between-70-85p-of-genai-deployment-efforts-are-failing

- Gartner. (2024, July 29). Gartner Predicts 30% of Generative AI Projects Will Be Abandoned After Proof of Concept By End of 2025. https://www.gartner.com/en/newsroom/press-releases/2024-07-29-gartner-predicts-30-percent-of-generative-ai-projects-will-be-abandoned-after-proof-of-concept-by-end-of-2025

- OnGraph Technologies. (2024, November 19). 30% of Gen AI Projects will Fail by 2025. Here’s Why and How to avoid it. https://ongraphtech.medium.com/30-of-gen-ai-projects-will-fail-by-2025-heres-why-and-how-to-avoid-it-2413b3680a82

- InfoQ. (2025). How Software Engineers Can Grow into Staff Plus Roles. https://www.infoq.com/news/2025/10/software-engineer-staff-plus/