Executive Summary

Healthcare organizations are at a critical inflection point. Generative AI applications promise unprecedented capabilities in patient care, clinical decision support, and operational efficiency. However, these same technologies introduce complex security challenges that traditional perimeter-based security cannot address. For healthcare executives, implementing Zero Trust security architecture isn’t just a technical requirement—it’s a strategic imperative that directly impacts patient safety, regulatory compliance, and organizational resilience.

The Healthcare AI Revolution: Opportunities and Realities

Healthcare providers are rapidly embracing Generative AI applications to transform patient care and operational efficiency. The technology’s ability to process vast amounts of medical data, generate clinical insights, and automate complex workflows has created compelling value propositions across the healthcare ecosystem.

Current AI Implementation Landscape in Healthcare

Clinical Decision Support Systems are leveraging large language models to analyze patient records, medical literature, and diagnostic data to provide evidence-based treatment recommendations. Leading health systems like Mayo Clinic and Cleveland Clinic have deployed AI-powered clinical decision support tools that help physicians identify potential drug interactions, suggest treatment protocols, and flag critical patient conditions.

Medical Documentation and Administrative Automation represents another significant adoption area. Healthcare providers are implementing AI-powered solutions for clinical note generation, prior authorization processing, and billing optimization. These applications can reduce documentation time by 30-50% while improving accuracy and compliance.

Drug Discovery and Research Applications utilize generative AI to accelerate pharmaceutical research, predict molecular behavior, and optimize clinical trial design. Major pharmaceutical companies and research institutions are investing billions in AI-driven drug discovery platforms.

Patient Engagement and Virtual Health solutions employ conversational AI to provide 24/7 patient support, triage symptoms, and deliver personalized health education. These applications handle millions of patient interactions while maintaining HIPAA compliance requirements.

Success Stories: Transformative Healthcare AI Implementations

Mayo Clinic’s AI-Powered Diagnostic Platform

Mayo Clinic has successfully implemented AI algorithms for medical imaging analysis, particularly in radiology and pathology. Their AI systems analyze medical images to detect conditions like heart disease and cancer with accuracy rates exceeding 95%. The platform processes over 100,000 scans annually while maintaining strict data governance and security protocols.

Key Success Factors:

- Comprehensive data governance framework

- Multi-layered security architecture

- Continuous model validation and monitoring

- Strong physician-AI collaboration protocols

Kaiser Permanente’s Predictive Analytics

Kaiser Permanente deployed predictive AI models to identify patients at risk for sepsis, heart failure, and other critical conditions. Their Early Warning System analyzes real-time patient data to alert clinicians up to 6 hours before traditional methods, resulting in 18% reduction in mortality rates and $50 million in annual cost savings.

Success Metrics:

- 18% reduction in sepsis mortality

- 20% decrease in hospital readmissions

- $50 million annual cost savings

- 99.9% system uptime with zero security incidents

Cleveland Clinic’s AI Research Initiatives

Cleveland Clinic has established comprehensive AI research programs focusing on cardiovascular disease, neurological disorders, and cancer treatment. Their AI models analyze genomic data, medical imaging, and electronic health records to develop personalized treatment strategies.

Failure Cases: Lessons from Healthcare AI Security Breaches

Change Healthcare Cyberattack (2024)

The Change Healthcare ransomware attack, which occurred in February 2024, represents one of the most significant healthcare cybersecurity incidents in recent history. The attack disrupted healthcare operations across the United States, affecting pharmacy operations, insurance claims processing, and patient care delivery.

Financial Impact:

- $872 million in direct costs to UnitedHealth Group

- Estimated $100 billion in downstream economic impact

- Millions of patients affected by delayed prescriptions and treatments

- 6-month recovery timeline for full system restoration

Security Failures:

- Insufficient network segmentation

- Inadequate multi-factor authentication implementation

- Legacy system vulnerabilities

- Lack of comprehensive backup and recovery procedures

Zero Trust Security Gaps:

- Trusted internal network architecture enabled lateral movement

- Missing continuous authentication for critical systems

- Insufficient data classification and access controls

- Inadequate real-time threat monitoring

Anthem Data Breach Lessons

The 2015 Anthem data breach, while pre-dating current AI implementations, provides critical insights into healthcare security vulnerabilities. The breach exposed personal information of 78.8 million individuals and resulted in $115 million in settlements.

Key Security Failures:

- Lack of data encryption for sensitive information

- Insufficient network monitoring and threat detection

- Weak access controls and privilege management

- Missing data loss prevention capabilities

The Expanding AI Attack Surface in Healthcare

Traditional Healthcare Security Challenges

Healthcare organizations have historically struggled with cybersecurity due to several inherent challenges:

Legacy System Integration: Healthcare environments typically include decades-old medical devices, electronic health record systems, and clinical applications that were never designed with modern security principles.

Regulatory Complexity: HIPAA, HITECH, FDA regulations, and state privacy laws create complex compliance requirements that must be balanced with operational efficiency.

Operational Criticality: Healthcare systems cannot afford downtime, making security updates and patches challenging to implement without disrupting patient care.

Diverse User Base: Healthcare organizations must secure access for physicians, nurses, administrative staff, contractors, vendors, and patients across multiple locations and devices.

AI-Specific Security Vulnerabilities

Generative AI applications introduce entirely new categories of security risks that traditional healthcare security frameworks cannot adequately address:

Model Poisoning Attacks: Adversaries can manipulate training data to corrupt AI models, potentially causing misdiagnosis or inappropriate treatment recommendations. In healthcare, such attacks could have life-threatening consequences.

Prompt Injection Vulnerabilities: Malicious users can craft inputs designed to manipulate AI systems into revealing sensitive patient information or generating harmful recommendations. Healthcare AI systems processing natural language queries are particularly vulnerable.

Data Exfiltration Through AI Models: Large language models can inadvertently memorize and reproduce sensitive patient information from their training data, creating new pathways for data breaches.

Adversarial Attacks on Medical AI: Subtle modifications to medical images or patient data can fool AI diagnostic systems, potentially leading to misdiagnosis or delayed treatment.

Supply Chain Vulnerabilities: Healthcare AI applications often rely on third-party models, cloud services, and data processors, creating extensive supply chain risks.

Quantifying the Expanded Attack Surface

Healthcare AI implementations significantly expand the organizational attack surface:

API Endpoints: Each AI application typically exposes 10-50 API endpoints for data ingestion, model inference, and result delivery.

Data Pipelines: AI systems require continuous data flows from electronic health records, medical devices, laboratory systems, and external data sources.

Cloud Infrastructure: Most healthcare AI applications utilize cloud services, creating new perimeter boundaries and shared responsibility models.

Third-Party Integrations: AI vendors, cloud providers, and technology partners create extensive interconnections that traditional security tools cannot adequately monitor.

Human-AI Interfaces: Clinicians, researchers, and administrators interact with AI systems through various interfaces, each requiring appropriate access controls and monitoring.

Current Zero Trust Implementation Approaches in Healthcare

Leading Healthcare Organizations’ Zero Trust Strategies

Comprehensive Identity and Access Management

Progressive healthcare organizations are implementing sophisticated identity and access management (IAM) systems that extend beyond traditional user authentication to include device identity, application identity, and data classification.

Mayo Clinic has deployed a comprehensive IAM platform that provides single sign-on access to over 200 clinical applications while maintaining granular access controls based on user roles, patient relationships, and data sensitivity levels. Their system processes over 1 million authentication requests daily with sub-second response times.

Microsegmentation for Clinical Networks

Leading health systems are implementing network microsegmentation to isolate critical clinical systems and limit lateral movement in case of security breaches. This approach creates granular network zones for different types of healthcare applications and data.

Cleveland Clinic has implemented microsegmentation across their clinical networks, creating separate zones for electronic health records, medical imaging systems, laboratory information systems, and AI applications. Each zone has specific access policies and monitoring requirements.

Data-Centric Security Approaches

Healthcare organizations are moving beyond perimeter security to implement data-centric security that follows information throughout its lifecycle. This includes data classification, encryption, access controls, and usage monitoring.

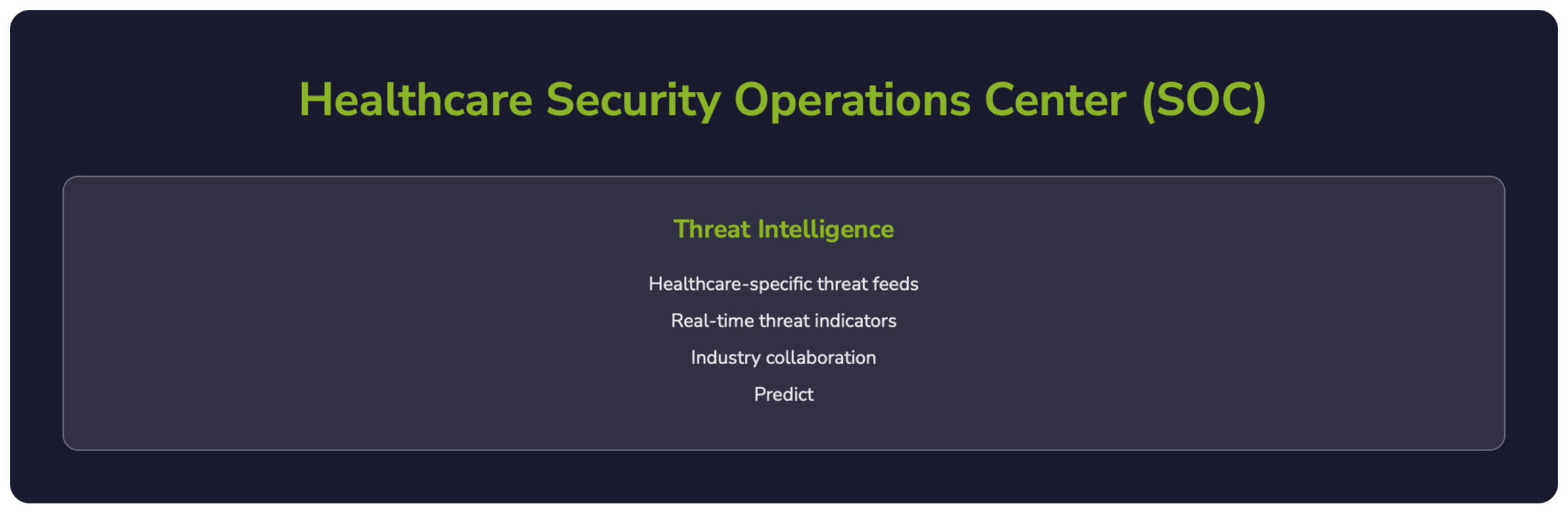

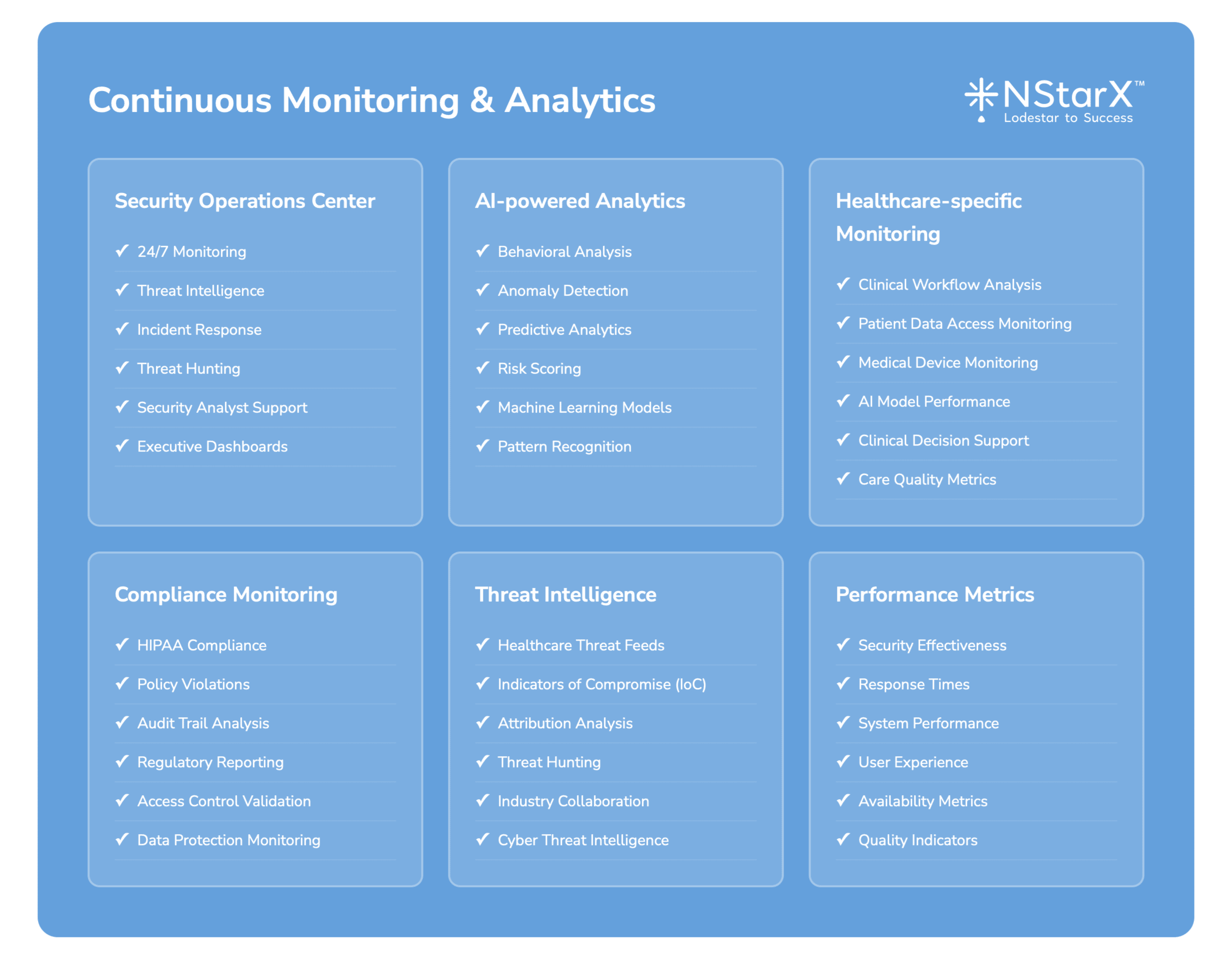

Continuous Monitoring and Threat Detection

Advanced healthcare organizations have implemented Security Operations Centers (SOCs) with specialized healthcare threat intelligence and AI-powered anomaly detection capabilities.

Regulatory Compliance and Zero Trust

Healthcare Zero Trust implementations must address specific regulatory requirements:

HIPAA Compliance: Zero Trust architectures must ensure that all access to protected health information (PHI) is properly authenticated, authorized, and audited.

FDA Regulations: AI-powered medical devices and clinical decision support systems must comply with FDA cybersecurity requirements, including pre-market and post-market security controls.

State Privacy Laws: Healthcare organizations must comply with varying state privacy regulations, including California’s CCPA and Virginia’s CDPA.

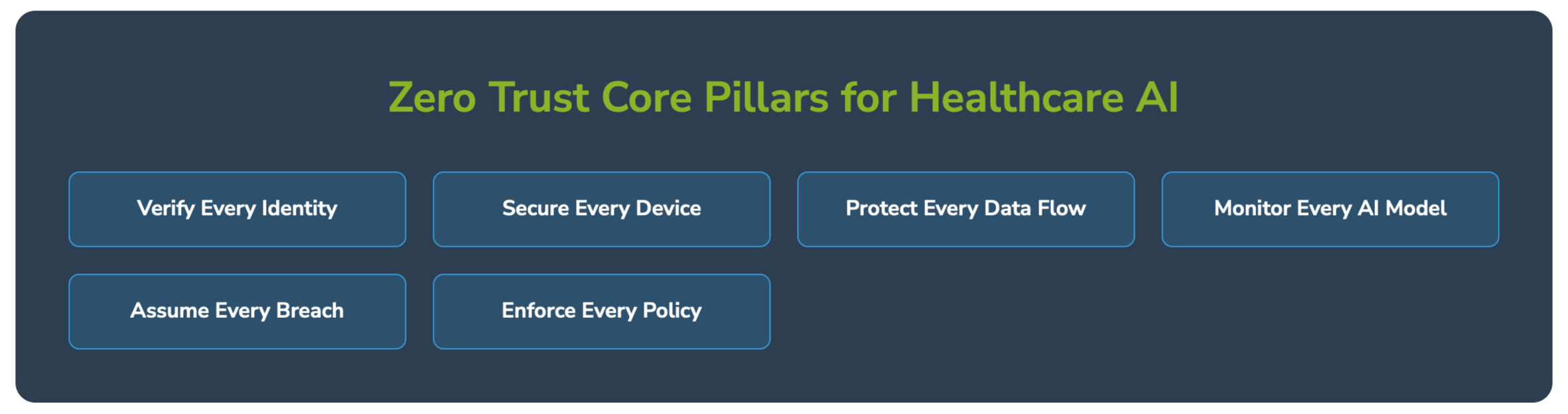

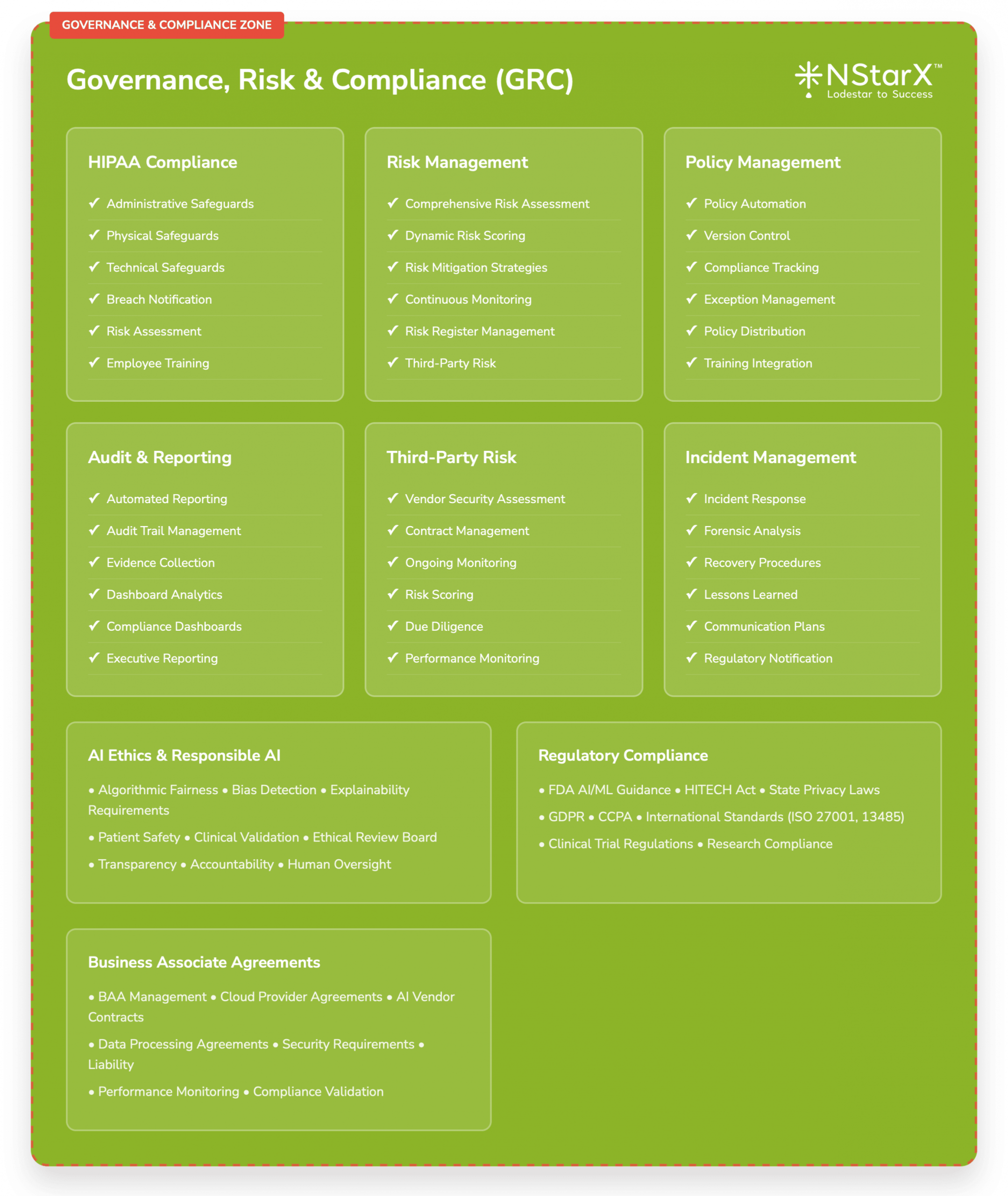

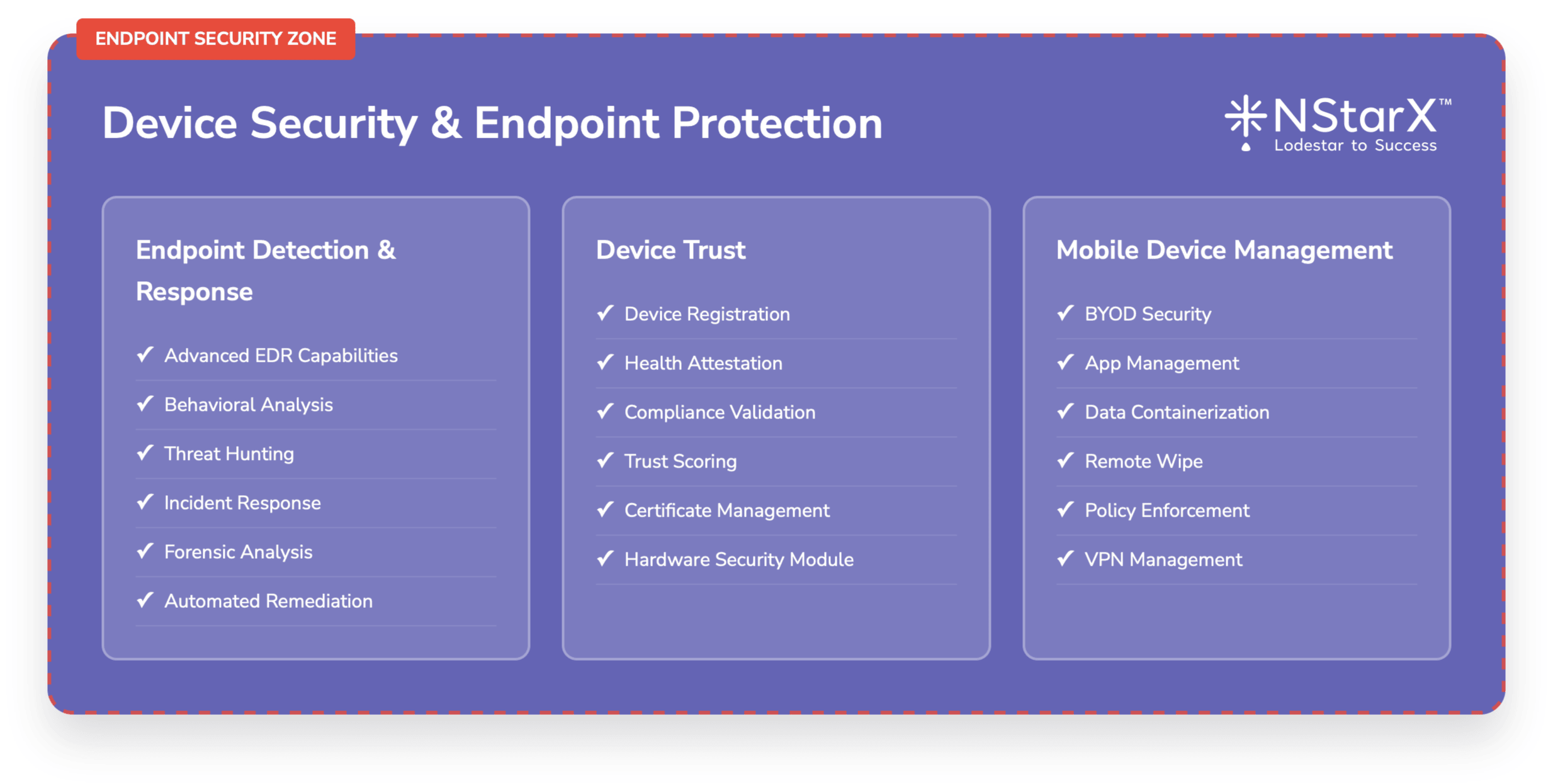

In the Figure 1 below, NStarX shows the basic components of the Zero Trust Implementation Approach as of today.

GenAI Challenges: Navigating the Unknown

The Uncertainty Principle in Healthcare AI

Generative AI applications in healthcare operate in a fundamentally uncertain environment. Unlike traditional software systems with predictable inputs and outputs, GenAI systems can produce unexpected results that are difficult to validate or verify.

Clinical Decision Uncertainty: AI-generated treatment recommendations may be based on patterns that aren’t immediately apparent to human clinicians, creating challenges for validation and accountability.

Data Lineage Complexity: Large language models trained on vast datasets make it difficult to trace the source of specific recommendations or identify potential biases in training data.

Emergent Behaviors: GenAI systems can exhibit unexpected behaviors or capabilities that weren’t explicitly programmed, creating challenges for security testing and validation.

Unknown Attack Vectors

The novelty of GenAI technologies means that new attack vectors are constantly being discovered:

Zero-Day AI Vulnerabilities: Security researchers regularly discover new ways to manipulate AI systems, from prompt injection techniques to model extraction attacks.

Cross-Modal Attacks: Healthcare AI systems that process multiple data types (text, images, numerical data) may be vulnerable to attacks that exploit interactions between different modalities.

Temporal Attacks: AI models may be vulnerable to attacks that exploit changes in data patterns over time, particularly relevant in healthcare where patient conditions and treatment protocols evolve.

Addressing GenAI Unknowns Through Zero Trust

Zero Trust principles provide a framework for managing GenAI uncertainties:

Assume Breach Mentality: Assume that GenAI systems will be compromised and design security controls to minimize impact and enable rapid detection.

Continuous Verification: Implement continuous monitoring and validation of AI system outputs, not just initial deployment testing.

Principle of Least Privilege: Limit AI system access to only the minimum data and resources required for specific functions.

Defense in Depth: Implement multiple layers of security controls to address both known and unknown AI vulnerabilities.

Zero Trust Security Framework for Healthcare AI

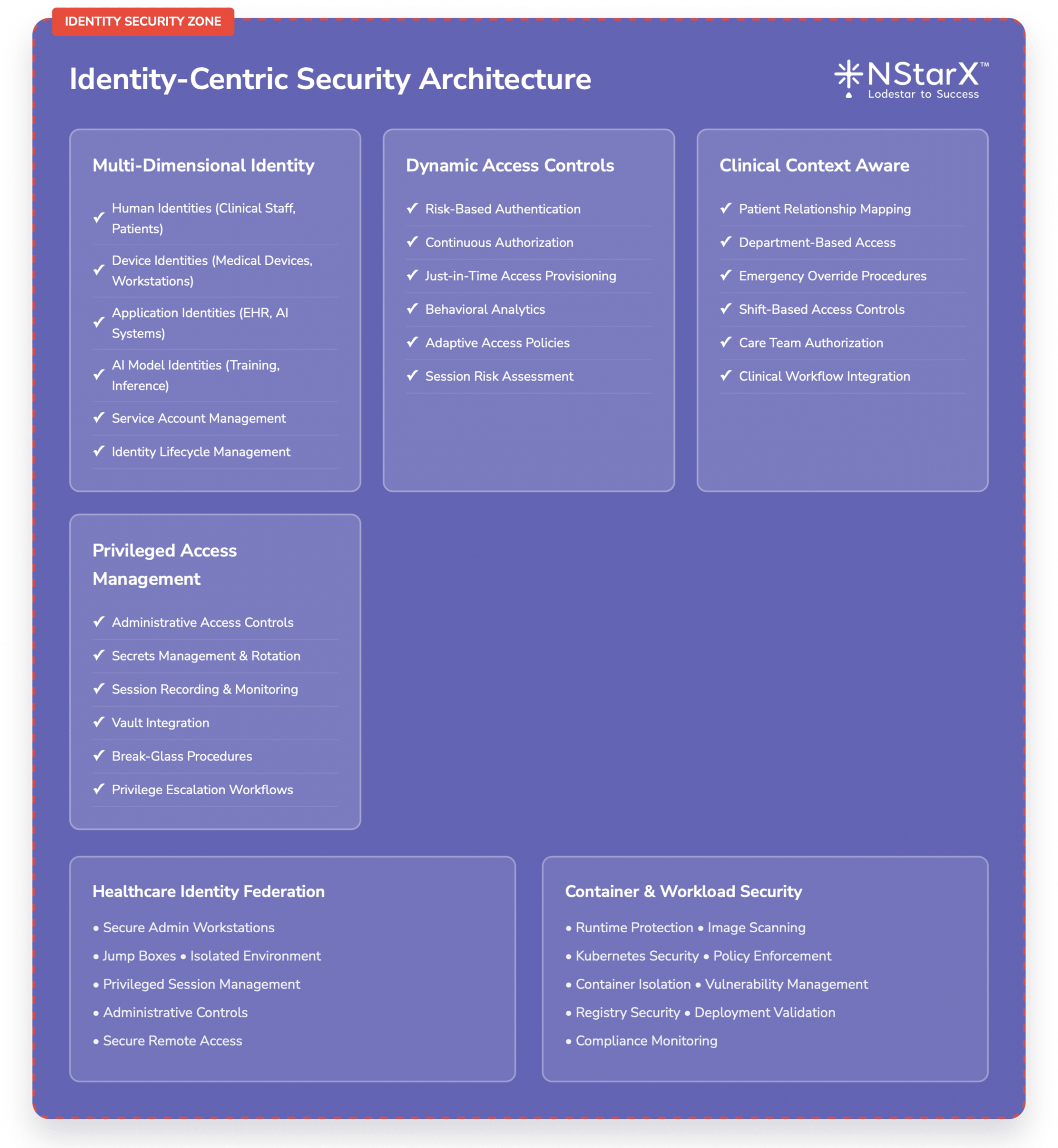

Identity-Centric Security Architecture

Healthcare AI implementations require sophisticated identity management that extends beyond human users to include AI models, applications, and data resources.

Multi-Dimensional Identity Management:

- Human identities (clinicians, administrators, researchers)

- Device identities (medical devices, workstations, mobile devices)

- Application identities (AI models, clinical systems, third-party services)

- Data identities (patient records, research datasets, AI training data)

Dynamic Access Controls:

- Risk-based authentication that considers user behavior, device trust, location, and data sensitivity

- Just-in-time access provisioning for temporary elevated privileges

- Continuous authorization that adapts to changing contexts and threat levels

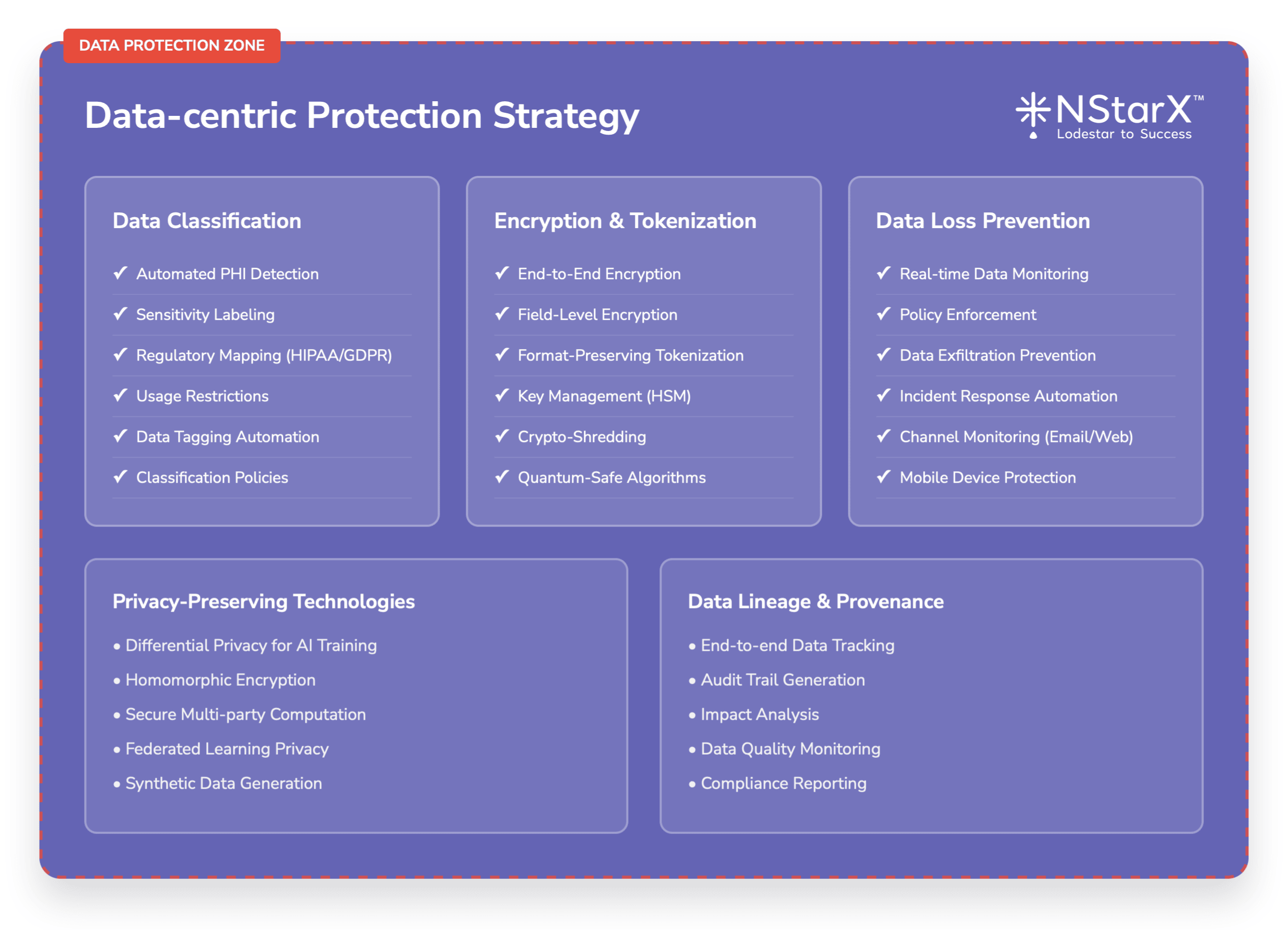

Data-Centric Protection Strategy

Healthcare AI applications process vast amounts of sensitive data that requires comprehensive protection throughout its lifecycle.

Data Classification and Labeling:

- Automated classification of patient data based on sensitivity levels

- Dynamic labeling that adapts to data usage and context

- Integration with AI model training and inference pipelines

Encryption and Tokenization:

- End-to-end encryption for data in transit and at rest

- Tokenization of sensitive identifiers in AI training datasets

- Homomorphic encryption for privacy-preserving AI computations

Data Loss Prevention:

- Real-time monitoring of data flows to and from AI systems

- Automated detection of sensitive data in AI model outputs

- Policy enforcement for data sharing and external communications

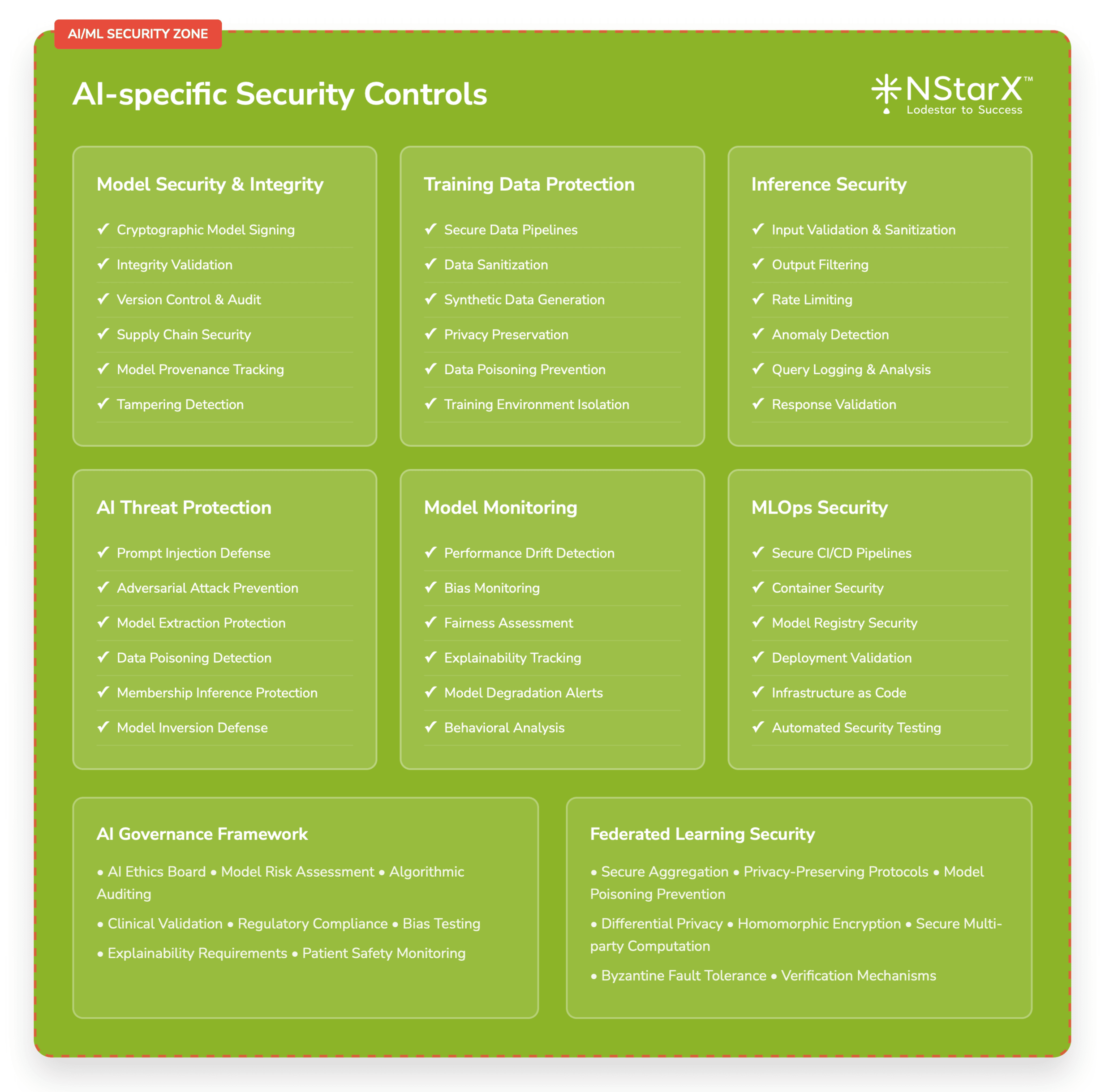

AI-Specific Security Controls

Zero Trust implementations for healthcare AI must include controls specifically designed for AI/ML workloads.

Model Security and Integrity:

- Cryptographic signing of AI models to ensure integrity

- Secure model deployment and versioning procedures

- Runtime monitoring of model behavior and performance

Training Data Protection:

- Secure data pipelines for AI model training

- Differential privacy techniques to protect individual patient information

- Synthetic data generation for non-production environments

Inference Security:

- Input validation and sanitization for AI queries

- Output filtering and validation to prevent data leakage

- Rate limiting and anomaly detection for AI service usage

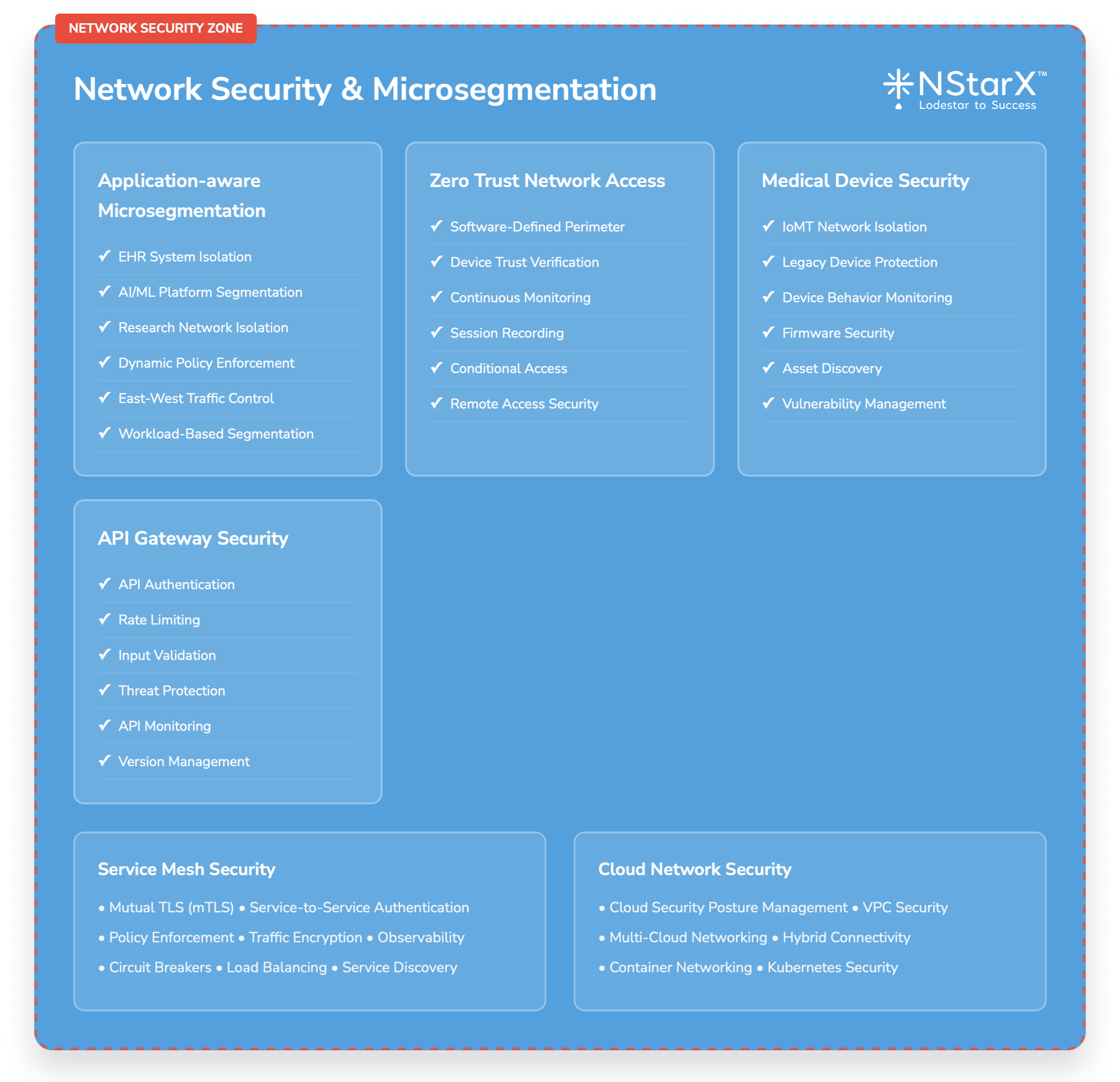

Network Security and Microsegmentation

Healthcare AI applications require sophisticated network security that can adapt to dynamic cloud and hybrid environments.

Application-Aware Microsegmentation:

- Dynamic network policies based on application behavior and data flows

- Automated policy enforcement for AI workload communications

- Integration with cloud-native security tools and services

Zero Trust Network Access (ZTNA):

- Software-defined perimeter for remote access to AI applications

- Device trust verification and continuous monitoring

- Session recording and analysis for audit and compliance

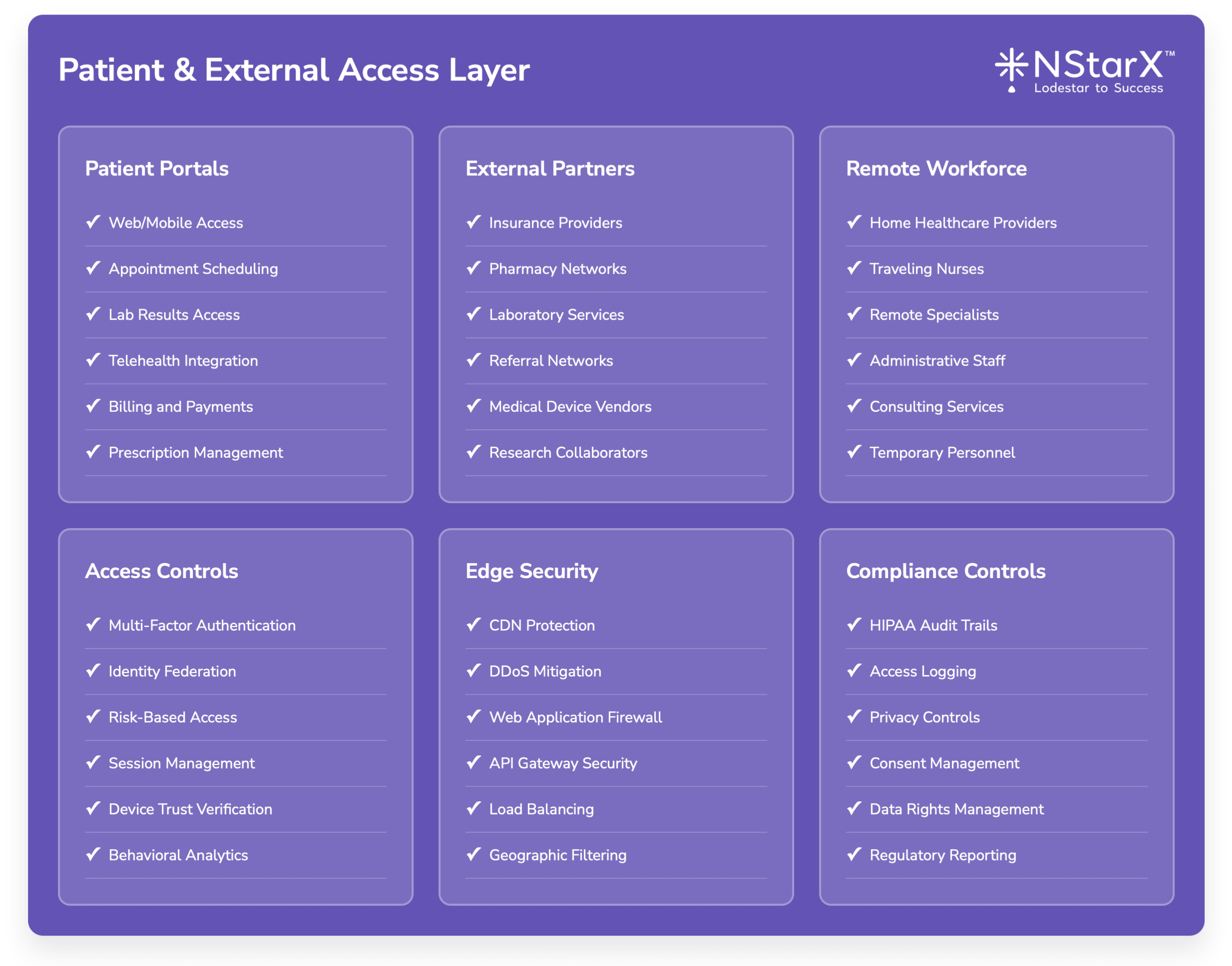

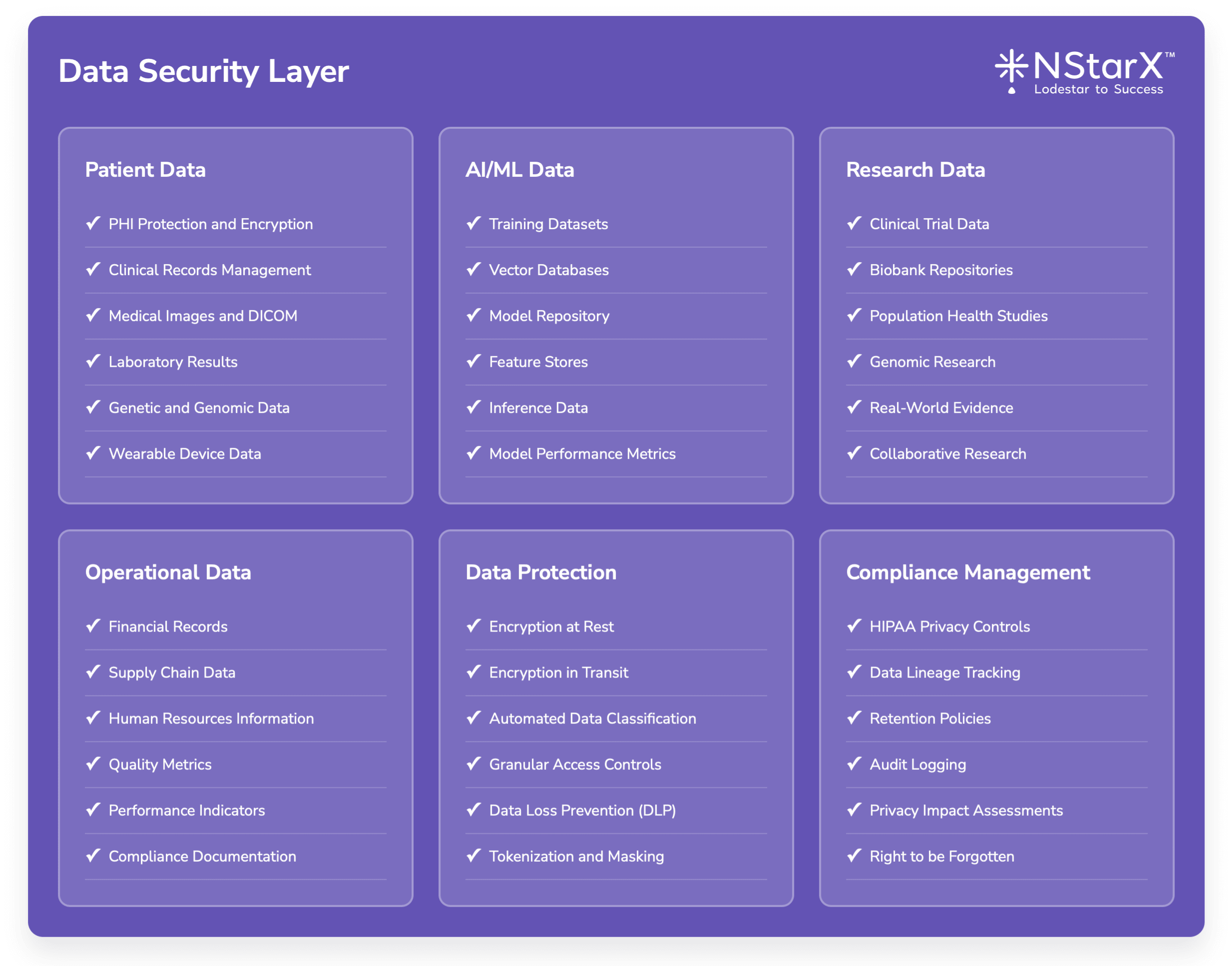

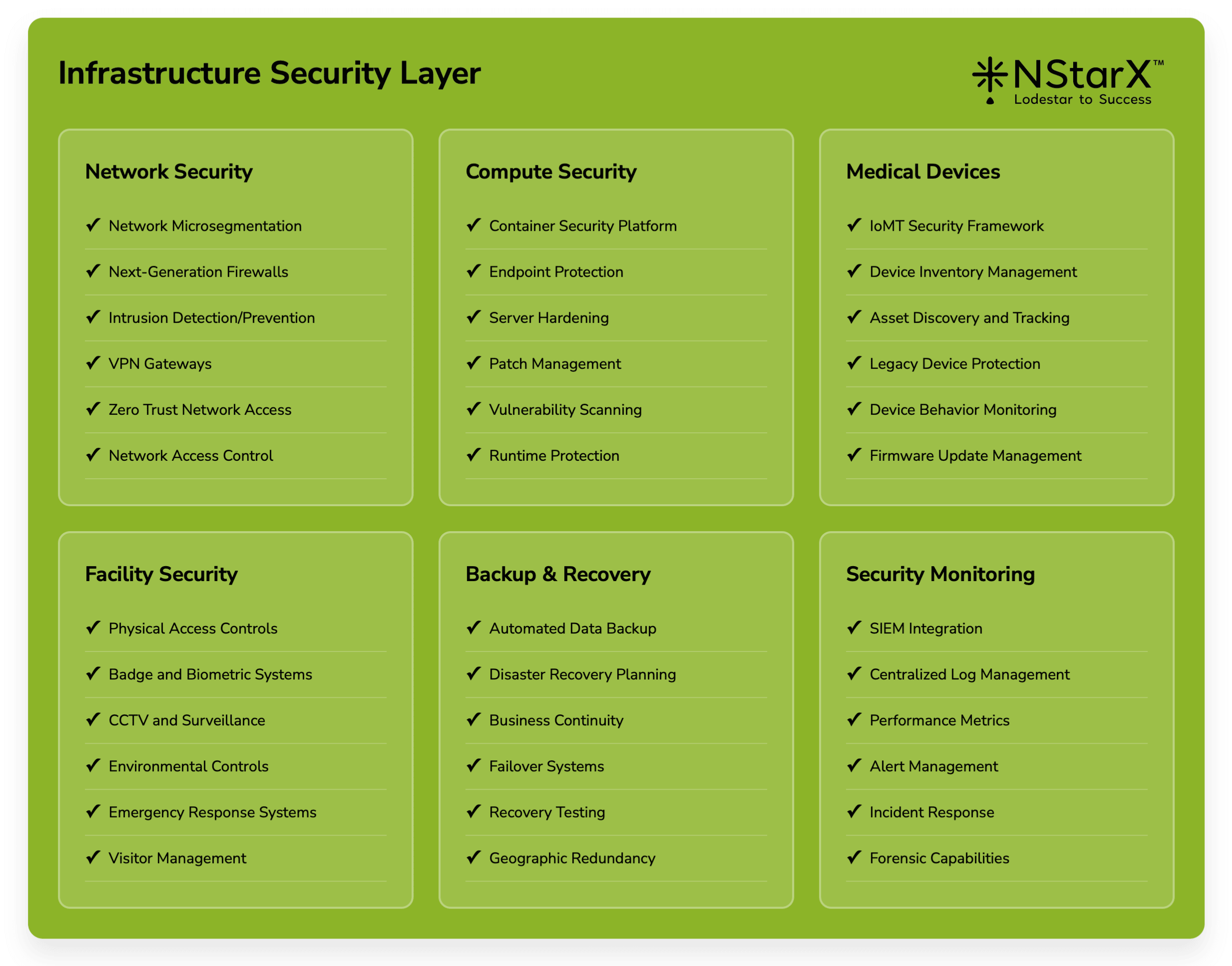

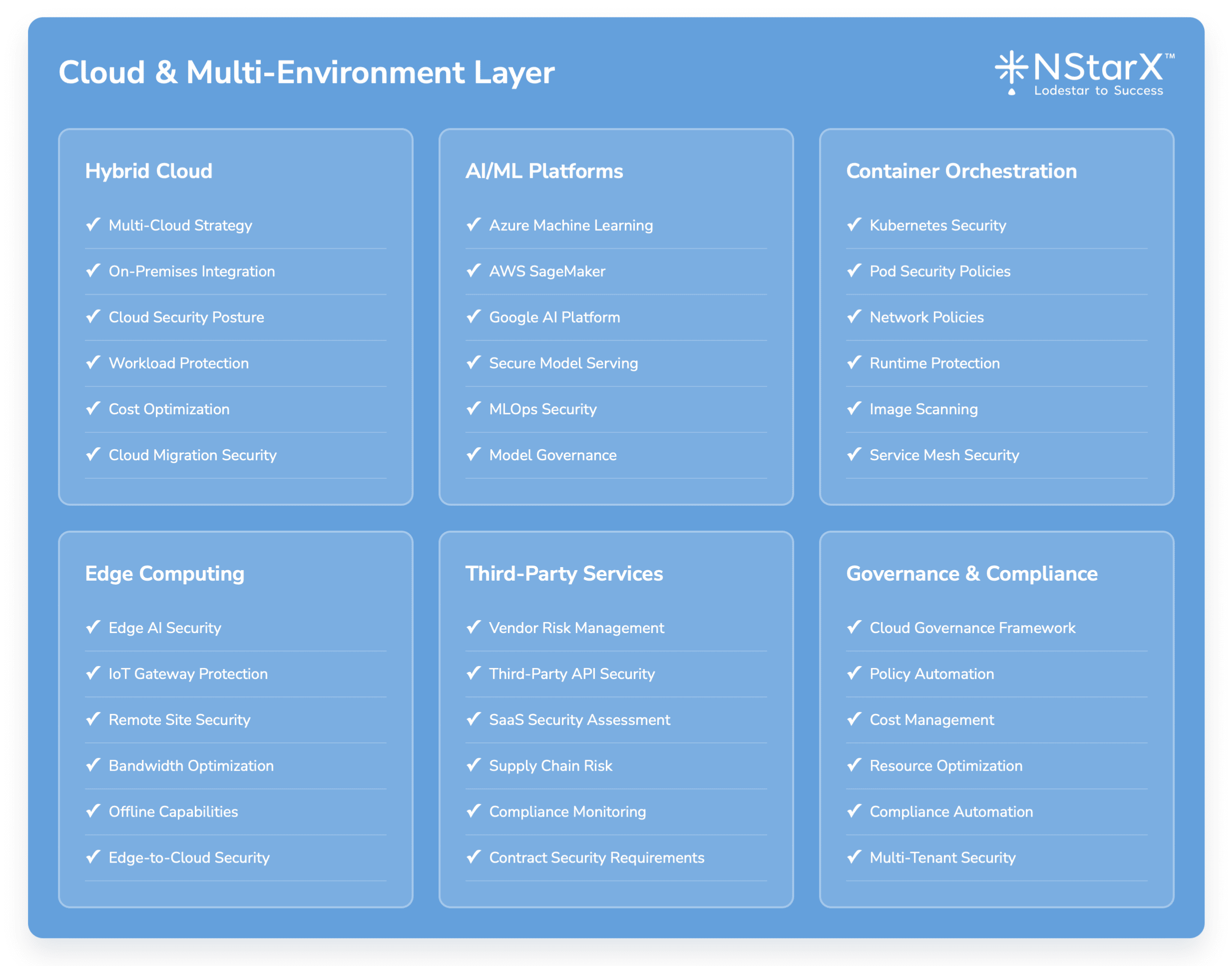

The below Figure 2 captures some of the exhaustive components of Zero Security Framework:

Figure 1: Zero Security Framework

Best Practices for Healthcare AI Zero Trust Implementation

Governance and Risk Management

Establish AI Governance Framework:

- Cross-functional AI governance committee including clinical, technical, legal, and compliance stakeholders

- Clear policies for AI development, deployment, and monitoring

- Regular risk assessments and security reviews for AI applications

Implement Continuous Risk Assessment:

- Real-time risk scoring for AI applications and users

- Dynamic policy adjustment based on threat intelligence

- Integration with existing healthcare risk management processes

Technical Implementation Guidelines

Start with High-Risk Use Cases:

- Prioritize Zero Trust implementation for AI applications that access sensitive patient data

- Focus on clinical decision support systems and diagnostic AI applications

- Gradually expand to lower-risk applications and use cases

Implement Comprehensive Logging and Monitoring:

- Centralized logging for all AI application activities

- Real-time monitoring of model performance and security metrics

- Integration with Security Information and Event Management (SIEM) systems

Design for Compliance:

- Built-in audit trails for all AI system interactions

- Automated compliance reporting and documentation

- Regular compliance assessments and third-party audits

Organizational Change Management

Clinician Training and Adoption:

- Comprehensive training programs for healthcare professionals using AI systems

- Clear protocols for AI-assisted decision making

- Regular feedback collection and system improvement

Security Awareness and Culture:

- Healthcare-specific security awareness training

- Regular phishing simulations and security exercises

- Recognition programs for security best practices

Vendor and Third-Party Management

AI Vendor Security Assessment:

- Comprehensive security questionnaires for AI vendors

- Regular security audits and penetration testing

- Contractual requirements for security and compliance

Supply Chain Security:

- Security assessment of AI model training data sources

- Monitoring of third-party AI services and APIs

- Incident response procedures for vendor security breaches

The Future of Zero Trust Security in Healthcare AI

Emerging Technologies and Approaches

AI-Powered Security Operations: The future of healthcare security will increasingly rely on AI systems to defend against AI-powered attacks. Security Operations Centers will deploy machine learning models to detect anomalous behavior in AI applications, predict potential security incidents, and automate response procedures.

Federated Learning Security: As healthcare organizations adopt federated learning approaches to train AI models across multiple institutions while preserving data privacy, new security frameworks will emerge to protect distributed learning processes and ensure model integrity.

Quantum-Resistant Cryptography: The eventual development of quantum computing will require healthcare organizations to implement quantum-resistant encryption algorithms to protect long-term patient data and AI model intellectual property.

Homomorphic Encryption for Healthcare AI: Advanced cryptographic techniques will enable healthcare AI applications to perform computations on encrypted data without decryption, providing unprecedented privacy protection for patient information.

Regulatory Evolution

AI-Specific Healthcare Regulations: Regulatory frameworks will evolve to address AI-specific risks in healthcare, including requirements for AI model validation, bias detection, and security controls. The FDA’s proposed AI/ML guidance represents early steps in this evolution.

International Standards Development: Global standards organizations will develop specific frameworks for healthcare AI security, including ISO standards for AI risk management and security controls.

Cross-Border Data Protection: As healthcare AI applications increasingly operate across international boundaries, new frameworks will emerge to address cross-border data protection and regulatory compliance.

Technology Integration Trends

Cloud-Native Security Architectures: Healthcare AI applications will increasingly adopt cloud-native security approaches, including serverless computing, container security, and cloud access security brokers (CASBs).

Edge Computing Security: The deployment of AI applications at the network edge, including medical devices and point-of-care systems, will require new security frameworks for distributed AI workloads.

Blockchain for Healthcare AI: Distributed ledger technologies may play increasing roles in healthcare AI security, including model provenance tracking, audit trails, and decentralized identity management.

Threat Landscape Evolution

Nation-State Healthcare Targeting: Healthcare organizations will face increasing threats from nation-state actors seeking to disrupt critical infrastructure, steal intellectual property, or gain access to population health data.

AI-Powered Cyberattacks: Adversaries will increasingly use AI tools to launch sophisticated attacks against healthcare organizations, including automated vulnerability discovery and social engineering campaigns.

Insider Threat Sophistication: The complexity of healthcare AI systems will create new opportunities for insider threats, requiring advanced behavioral monitoring and anomaly detection capabilities.

Strategic Recommendations for Healthcare C-Suite Leaders

For Chief Executive Officers (CEOs)

Strategic Vision and Investment: Healthcare AI represents a fundamental transformation of care delivery, not just a technology upgrade. CEOs must develop comprehensive strategies that balance innovation with risk management, ensuring that security investments keep pace with AI adoption.

Board-Level Oversight: Establish board-level committees focused on AI governance and cybersecurity, with regular reporting on AI security metrics and incident response capabilities. Board members should receive training on AI risks and healthcare cybersecurity trends.

Cultural Transformation: Lead organizational culture change that embraces both innovation and security. Healthcare organizations must develop security-conscious cultures that view Zero Trust as an enabler of AI innovation rather than an impediment.

For Chief Information Officers (CIOs)

Technology Architecture Evolution: Transition from traditional perimeter-based security to Zero Trust architectures that can support dynamic AI workloads. This requires fundamental changes to network design, identity management, and data protection strategies.

Cloud Strategy Alignment: Develop cloud strategies that support AI innovation while maintaining security and compliance requirements. This includes selecting cloud providers with healthcare-specific security capabilities and implementing proper shared responsibility models.

Legacy System Integration: Create strategies for integrating AI applications with existing healthcare IT infrastructure while maintaining security boundaries and minimizing attack surface expansion.

Skills Development: Invest in training and hiring programs to develop organizational capabilities in AI security, Zero Trust implementation, and healthcare-specific cybersecurity practices.

For Chief Technology Officers (CTOs)

AI Security by Design: Implement security-by-design principles for all AI application development, including threat modeling, secure coding practices, and comprehensive testing procedures.

Technical Standards Development: Establish technical standards for AI security controls, including model validation, data protection, and monitoring requirements that align with Zero Trust principles.

Innovation Risk Management: Balance innovation speed with security requirements by implementing DevSecOps practices that integrate security controls into AI development pipelines without hindering deployment velocity.

Vendor Technology Assessment: Develop comprehensive technical evaluation criteria for AI vendors and cloud providers, focusing on security capabilities, compliance features, and integration requirements.

For Chief Information Security Officers (CISOs)

Zero Trust Implementation Roadmap: Develop phased implementation plans for Zero Trust security architectures that prioritize high-risk AI applications and gradually expand to comprehensive organizational coverage.

AI-Specific Security Program: Create specialized security programs for AI applications that address unique risks such as model poisoning, prompt injection, and adversarial attacks.

Threat Intelligence and Monitoring: Implement advanced threat intelligence programs focused on AI security threats and healthcare-specific attack vectors, with real-time monitoring and automated response capabilities.

Compliance and Audit: Establish comprehensive audit programs for AI security controls that demonstrate compliance with healthcare regulations and industry standards.

Incident Response Planning: Develop specialized incident response procedures for AI security incidents, including model compromise, data poisoning, and AI-enabled attacks.

For Chief Financial Officers (CFOs)

Security Investment ROI: Develop financial models that quantify the return on investment for Zero Trust security implementations, including risk reduction, compliance cost savings, and operational efficiency improvements.

Cyber Insurance Strategy: Work with insurance providers to understand coverage options for AI-related security incidents and ensure that Zero Trust implementations qualify for favorable insurance terms.

Budget Planning and Allocation: Allocate sufficient budget for comprehensive Zero Trust implementations, recognizing that security investments are essential for AI initiative success and regulatory compliance.

Cost-Benefit Analysis: Conduct thorough cost-benefit analyses for security technologies and services, considering both direct costs and potential incident impact on organizational operations and reputation.

Financial Risk Management: Integrate AI security risks into enterprise risk management frameworks, with appropriate financial reserves and contingency planning for potential security incidents.

Conclusion: Building Secure Healthcare AI for the Future

The healthcare industry stands at a transformative moment where Generative AI applications promise to revolutionize patient care, clinical decision-making, and operational efficiency. However, realizing these benefits requires healthcare organizations to fundamentally reimagine their security architectures around Zero Trust principles.

The stakes could not be higher. Healthcare organizations that fail to implement comprehensive Zero Trust security for their AI applications face existential risks including catastrophic data breaches, regulatory penalties, operational disruption, and most importantly, compromised patient safety. Conversely, organizations that successfully implement Zero Trust architectures will gain competitive advantages through secure innovation, regulatory compliance, and stakeholder trust.

Key Success Factors:

Executive Leadership and Commitment: Zero Trust implementation requires sustained executive sponsorship and cross-functional collaboration between clinical, technical, and business leaders.

Phased Implementation Approach: Organizations should start with high-risk AI applications and gradually expand Zero Trust coverage across the enterprise, learning and adapting as they progress.

Continuous Adaptation: The rapidly evolving threat landscape and AI technology developments require continuous monitoring, assessment, and adaptation of security strategies.

Stakeholder Engagement: Success requires engagement from clinicians, IT professionals, security teams, and business stakeholders who must understand both the opportunities and risks of healthcare AI.

Investment in Capabilities: Organizations must invest in both technology and human capabilities to implement and maintain effective Zero Trust security architectures.

The future of healthcare depends on our ability to harness AI technologies safely and securely. Zero Trust security provides the foundation for this transformation, enabling healthcare organizations to innovate confidently while protecting the patients and communities they serve.

Healthcare executives who act decisively to implement Zero Trust security for their AI initiatives will position their organizations for success in an AI-driven healthcare future. Those who delay face increasing risks that could jeopardize their ability to deliver safe, effective patient care in an increasingly digital world.

The time for action is now. The question is not whether healthcare organizations will adopt AI technologies, but whether they will do so securely and responsibly through comprehensive Zero Trust implementations.

References and Further Reading

Industry Reports and Analysis

- Healthcare Information and Management Systems Society (HIMSS)

- “2024 Healthcare Cybersecurity Report”

- “AI in Healthcare: Security and Privacy Considerations”

- ECRI Institute

- “Healthcare Supply Chain Security: Emerging Threats and Mitigation Strategies”

- “Top 10 Health Technology Safety Concerns for 2024”

- Ponemon Institute

- “Cost of a Data Breach Report 2024: Healthcare Industry Analysis”

- “The State of Healthcare Cybersecurity in 2024”

Regulatory Guidance and Standards

- U.S. Department of Health and Human Services (HHS)

- “HIPAA Security Rule Guidance Material”

- “Health Industry Cybersecurity Practices (HICP)”

- Food and Drug Administration (FDA)

- “Cybersecurity in Medical Devices: Quality System Considerations and Content of Premarket Submissions”

- “Artificial Intelligence/Machine Learning (AI/ML)-Based Medical Device Software as Medical Device (SaMD) Action Plan”

- National Institute of Standards and Technology (NIST)

- “AI Risk Management Framework (AI RMF 1.0)”

- “Zero Trust Architecture (SP 800-207)”

- “Framework for Improving Critical Infrastructure Cybersecurity”

Academic Research and Case Studies

- Mayo Clinic Proceedings

- “Artificial Intelligence in Healthcare: Past, Present and Future”

- “Cybersecurity in Healthcare: A Systematic Review”

- Journal of the American Medical Informatics Association (JAMIA)

- “Security and Privacy Challenges in Adoption of Health Information Technology”

- “Machine Learning in Healthcare: Review and Opportunities”

- Harvard Business Review

- “The AI Revolution in Healthcare Is Coming, Whether You’re Ready or Not”

- “Why Healthcare Organizations Need Zero Trust Security”

Industry Incident Reports and Breach Analysis

- Verizon Data Breach Investigations Report (DBIR)

- “2024 Healthcare Industry Analysis”

- “Healthcare Data Breach Trends and Patterns”

- IBM Security

- “Cost of a Data Breach Report 2024: Healthcare Findings”

- “X-Force Threat Intelligence Index 2024: Healthcare Sector”

- CrowdStrike

- “Healthcare Threat Landscape Report 2024”

- “Adversarial AI: Threats to Healthcare Organizations”

Technology Vendor Resources

- Microsoft Azure

- “Healthcare AI Security and Compliance Guide”

- “Zero Trust for Healthcare Organizations”

- Amazon Web Services (AWS)

- “Healthcare and Life Sciences Security and Compliance”

- “AI/ML Security Best Practices for Healthcare”

- Google Cloud

- “Healthcare AI Security Framework”

- “Zero Trust Security for Healthcare Workloads”

Professional Organizations and Frameworks

- Healthcare Financial Management Association (HFMA)

- “Cybersecurity in Healthcare: Financial Impact and Risk Management”

- American Hospital Association (AHA)

- “Cybersecurity: A Shared Responsibility Between Hospitals and Vendors”

- ISACA

- “Governance and Management of AI in Healthcare”

- “Zero Trust: A Strategic Approach”

Specific Incident Documentation

- Change Healthcare Incident Analysis

- UnitedHealth Group SEC Filings and Incident Reports

- Congressional Hearing Testimonies and Documentation

- Historical Healthcare Breaches

- Anthem Inc. Breach Settlement Documentation

- Premera Blue Cross Incident Analysis

- UCLA Health System Breach Reports

Emerging Research and Future Trends

- MIT Technology Review

- “The Future of AI Security in Healthcare”

- “Federated Learning and Privacy-Preserving AI”

- Nature Digital Medicine

- “Security and Privacy in Digital Health: A Review”

- “Adversarial Attacks on Medical AI Systems”

- IEEE Security & Privacy

- “Zero Trust Security for IoT in Healthcare”

- “Privacy-Preserving Machine Learning in Healthcare”

Implementation Guides and Best Practices

- SANS Institute

- “Securing Healthcare Organizations: A SANS Survey”

- “Zero Trust Implementation Guide for Healthcare”

- Center for Internet Security (CIS)

- “CIS Controls for Healthcare Organizations”

- “Healthcare Cybersecurity Best Practices”