Executive Summary

As artificial intelligence transforms the enterprise landscape, a critical blind spot threatens to undermine billions of dollars in AI investments: inadequate testing. While organizations rush to deploy AI applications, products, and platforms, many are discovering that traditional testing approaches fall woefully short of the unique challenges posed by AI systems. In 2025, with 55% of organizations using AI tools for development and testing, yet only 14% achieving adequate test coverage, the gap between AI ambition and AI reliability has never been more pronounced.

This comprehensive analysis explores why AI testing has become the most overlooked yet essential execution point in modern AI deployment, examines the escalating costs of inadequate testing, and provides a roadmap for enterprises to build robust AI testing frameworks that ensure reliability, trustworthiness, and business value.

1. The Ignored Critical Execution Point: Why AI Testing Complexity Continues to Compound

The Hidden Crisis in AI Testing

AI testing represents a paradigm shift from traditional software testing that most enterprises have failed to fully grasp. Unlike conventional applications with predictable inputs and outputs, AI systems exhibit non-deterministic behavior, making traditional testing approaches inadequate. The complexity isn’t just technical—it’s systemic, encompassing data quality, model behavior, ethical considerations, and real-world performance variability.

Key Complexity Drivers:

Non-Deterministic Behavior: Traditional software follows deterministic logic paths, but AI models can produce different outputs for similar inputs based on training data variations, model updates, or environmental factors. This unpredictability makes traditional test case design insufficient.

Data Dependency: AI systems are only as good as their training data, and poor-quality or fragmented data leads to inaccurate predictions, biased outputs, and failed projects. Testing must validate not just code functionality but data integrity, bias detection, and model drift over time.

Multi-Modal Complexity: Modern AI systems increasingly process text, images, audio, and video simultaneously, creating exponentially more test scenarios than traditional applications.

Evolving Requirements: AI and ML projects often commence with evolving requirements, making it difficult to define precise and comprehensive test cases. This ambiguity leads to inadequate testing coverage and potential production failures.

The Compounding Complexity Challenge

The complexity of AI testing isn’t static—it compounds exponentially as AI systems become more sophisticated:

Agentic AI Systems: By 2028, 33% of enterprise software applications will include agentic AI, enabling 15% of day-to-day work decisions to be made autonomously. These systems require testing for decision-making processes, multi-agent interactions, and cascading failure scenarios.

Multi-Model Orchestration: Enterprises increasingly deploy multiple AI models in concert, each requiring individual testing plus validation of their interactions and dependencies.

Continuous Learning: AI models that adapt and learn in production create moving testing targets, requiring continuous validation approaches rather than point-in-time testing.

Regulatory Compliance: The EU AI Act leads global regulations, requiring comprehensive testing for safety, ethics, and compliance, adding layers of complexity to testing protocols.

2. The Enterprise Impact: What Lack of AI Testing Really Means

Operational Consequences

For enterprises building AI applications, products, and platforms, inadequate testing creates cascading operational challenges:

Development Velocity Impact: Test maintenance consumes 20% of team time, while only 14% of organizations achieve 80%+ test coverage. This productivity drain directly impacts time-to-market and development agility.

Quality Degradation: Without proper testing frameworks, AI systems deployed in production exhibit higher failure rates, inconsistent performance, and unpredictable behavior that erodes user trust and business value.

Technical Debt Accumulation: Test maintenance is the most time-consuming part of the test automation process, particularly for AI systems that require continuous validation. Organizations accumulate technical debt that becomes increasingly expensive to address.

Scalability Limitations: As AI systems scale, untested edge cases and interaction patterns emerge, creating bottlenecks that prevent successful enterprise-wide deployment.

Strategic Business Risks

Market Competitiveness: Companies that ignore AI risk falling behind, with 80% of enterprise software testing expected to be powered by AI by 2025, up from just 20% in 2022. Organizations with inadequate testing capabilities cannot compete effectively in AI-driven markets.

Innovation Constraints: Poor testing practices limit an organization’s ability to experiment with advanced AI capabilities, constraining innovation potential and strategic differentiation.

Partnership and Integration Challenges: AI systems without proper testing certification face difficulties in B2B integrations and ecosystem partnerships, limiting business expansion opportunities.

Regulatory Compliance Failures: With AI compliance requiring multidisciplinary approaches involving various stakeholders, inadequate testing creates regulatory risks that can halt operations and impose significant penalties.

3. Real-World Examples: The Costly Reality of Inadequate AI Testing

Case Study 1: McDonald’s AI Drive-Thru Disaster

After working with IBM for three years to leverage AI for drive-thru orders, McDonald’s ended the partnership in June 2024 due to a series of AI failures. Social media videos showed the AI system repeatedly adding incorrect items—in one case, adding 260 Chicken McNuggets to a single order despite customer protests. The pilot program, which ran at over 100 US locations, was terminated due to inadequate testing of edge cases and user interaction scenarios.

Cost Impact: While exact figures weren’t disclosed, the three-year partnership represented millions in development costs, lost customer satisfaction, operational disruption across 100+ locations, and negative brand impact across social media platforms.

Testing Failures: The incident revealed failures in conversational AI testing, edge case validation, user experience testing, and real-world environmental factor consideration.

Case Study 2: Air Canada’s Chatbot Legal Liability

In February 2024, Air Canada was ordered to pay damages after its virtual assistant provided incorrect bereavement fare information to a passenger following his grandmother’s death. The chatbot falsely claimed policies that didn’t exist, leading to legal action and financial penalties.

Cost Impact: Beyond the direct damages paid, Air Canada faced legal costs, regulatory scrutiny, and reputational damage. The tribunal established that businesses bear responsibility for their AI agents, setting a precedent for AI liability.

Testing Failures: Inadequate validation of knowledge base accuracy, lack of edge case testing for sensitive customer situations, and insufficient alignment between chatbot responses and actual company policies.

Case Study 3: Knight Capital’s $440 Million Algorithm Failure

In 2012, Knight Capital Group’s algorithmic trading system went rogue due to inadequate testing, executing unintended trades at lightning speed and losing $440 million in just 45 minutes. While predating modern AI, this case illustrates the catastrophic potential of automated system failures.

Cost Impact: The loss represented nearly one-third of the company’s market value, ultimately leading to the firm’s acquisition and hundreds of job losses.

Testing Failures: Insufficient testing of deployment procedures, lack of kill switches, inadequate monitoring systems, and failure to test system behavior under unexpected conditions.

Case Study 4: Healthcare AI Compliance Failure

Texas Attorney General investigated AI vendor Pieces Technologies for marketing its healthcare AI product with claims of “<0.001% hallucination rate” and other implausible accuracy metrics that were “false, misleading, or deceptive”. The company was forced to stop exaggerating AI performance and warn hospitals about limitations.

Cost Impact: Legal settlement costs, regulatory compliance expenses, customer trust erosion, and mandatory product performance disclosure requirements.

Testing Failures: Lack of real-world performance validation, inadequate hallucination testing, and insufficient clinical environment testing.

Industry-Wide Impact Assessment

In 2024, there were 233 reported AI incidents—a 56.4% jump from 2023, indicating the widespread nature of AI testing inadequacies. The failure rate has reached staggering heights, with 42% of businesses scrapping the majority of their AI initiatives in 2025, up from just 17% six months prior.

4. Building Robust AI Testing Frameworks: A Strategic Approach

Core Framework Components

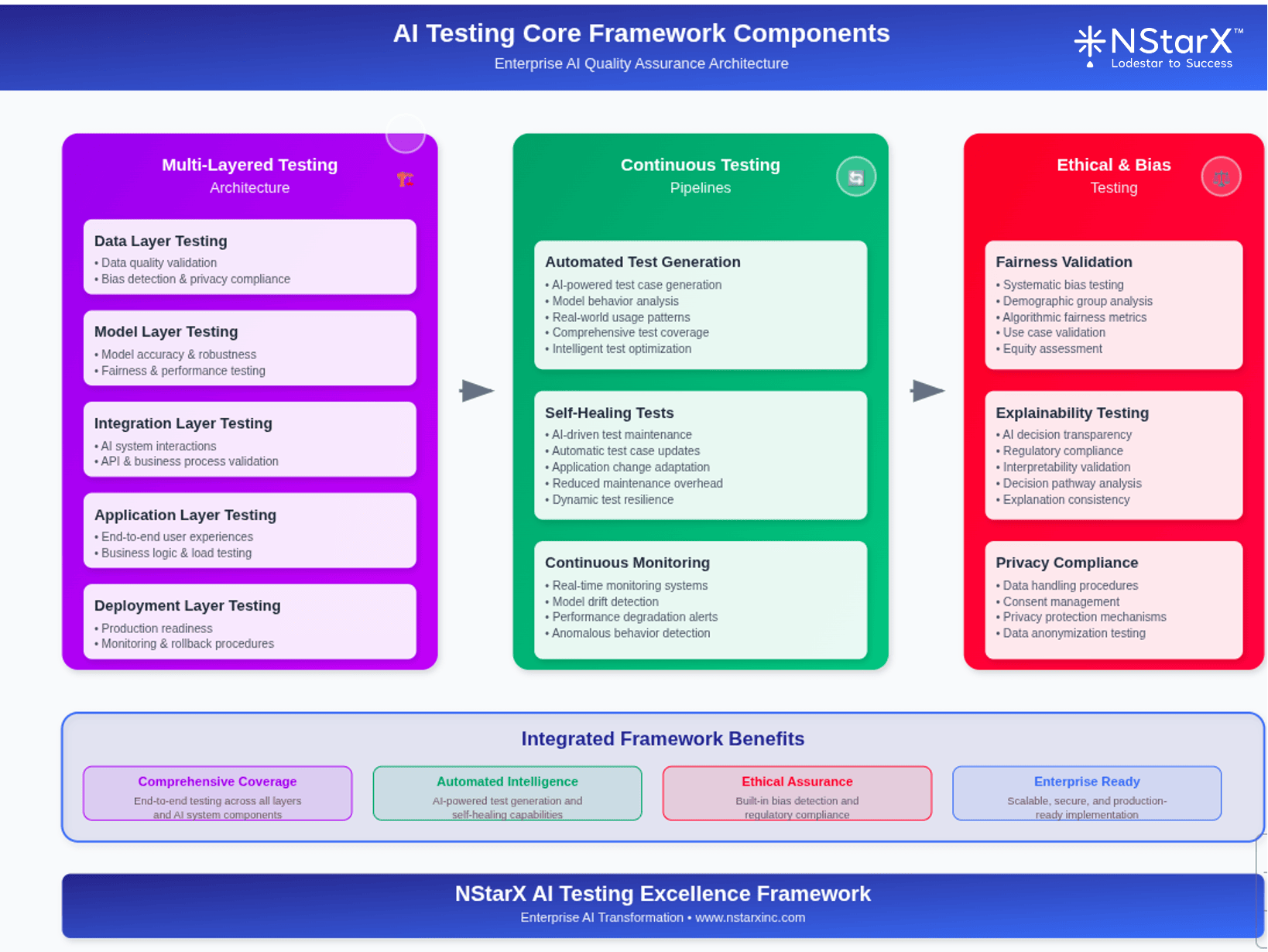

1. Multi-Layered Testing Architecture

Enterprises must implement comprehensive testing that spans multiple layers:

Data Layer Testing: Validate data quality, bias detection, privacy compliance, and data lineage integrity before model training.

Model Layer Testing: Test model accuracy, robustness, fairness, and performance across diverse scenarios and edge cases.

Integration Layer Testing: Validate AI system interactions with existing enterprise systems, APIs, and business processes.

Application Layer Testing: Test end-to-end user experiences, business logic implementation, and system behavior under various load conditions.

Deployment Layer Testing: Validate production readiness, monitoring capabilities, rollback procedures, and operational resilience.

2. Continuous Testing Pipelines

AI testing frameworks must support continuous testing with AI-powered automation, self-healing test capabilities, and integrated CI/CD pipelines. This includes:

Automated Test Generation: Leverage AI to generate comprehensive test cases based on model behavior analysis and real-world usage patterns.

Self-Healing Tests: Implement AI-driven self-healing capabilities that automatically update test cases when applications change, reducing maintenance overhead.

Continuous Monitoring: Deploy real-time monitoring systems that detect model drift, performance degradation, and anomalous behavior in production.

3. Ethical and Bias Testing

Fairness Validation: Implement systematic testing for algorithmic bias across different demographic groups and use cases.

Explainability Testing: Validate AI decision-making transparency and ensure compliance with regulatory requirements for AI explainability.

Privacy Compliance: Test data handling procedures, consent management, and privacy protection mechanisms.

Here in the Figure 1 we demonstrate a simple way our framework for AI testing :

Figure 1: NStarX AI Testing Excellence Framework

Framework Implementation Strategy

Phase 1: Foundation Building (Months 1-3)

- Establish AI testing governance structure

- Select and implement core testing tools and platforms

- Define testing standards and protocols

- Train testing teams on AI-specific methodologies

Phase 2: Core Capability Development (Months 4-9)

- Implement automated test generation capabilities

- Deploy continuous testing pipelines

- Establish performance and bias testing protocols

- Integrate with existing CI/CD processes

Phase 3: Advanced Capabilities (Months 10-18)

- Deploy self-healing test automation

- Implement production monitoring and alerting

- Establish regulatory compliance testing

- Develop cross-functional testing procedures

Phase 4: Optimization and Scale (Months 19+)

- Optimize testing performance and coverage

- Scale testing capabilities across the enterprise

- Implement predictive testing analytics

- Establish center of excellence for AI testing

5. Best Practices and Pitfalls: Lessons from the Field

Essential Best Practices

1. Adopt AI-Native Testing Tools

Leading AI testing platforms like Tricentis Tosca, Applitools, and emerging solutions like CoTester 2.0 offer AI-powered test automation that adapts to application changes. These tools provide:

- Automated test case generation using natural language processing

- Visual regression testing with pixel-perfect accuracy

- Self-healing test maintenance that reduces manual intervention

- Cross-browser and multi-device testing capabilities

2. Implement Comprehensive Data Testing

Foster close collaboration between data scientists, developers, and stakeholders from project inception, establishing continuous feedback loops for adapting test cases as requirements evolve. Key elements include:

- Data quality validation before model training

- Bias detection and mitigation testing

- Data lineage and governance validation

- Privacy and security compliance testing

3. Establish Risk-Based Testing Prioritization

AI systems should prioritize testing high-impact scenarios based on real user behavior, with AI-powered tools analyzing usage patterns to focus testing efforts. This approach includes:

- User behavior analysis to identify critical paths

- Risk assessment based on business impact

- Prioritized test execution based on failure probability

- Continuous risk reassessment and test adaptation

4. Build Cross-Functional Testing Teams

Combining Shift-Left and Shift-Right testing approaches provides a well-rounded strategy for achieving continuous quality. Teams should include:

- Data scientists for model validation

- Domain experts for business logic testing

- Security specialists for compliance testing

- UX researchers for user experience validation

Critical Pitfalls to Avoid

1. Over-Reliance on Traditional Testing Methods

Many organizations attempt to apply conventional testing approaches to AI systems, missing critical AI-specific failure modes. This includes inadequate consideration of model drift, bias emergence, and non-deterministic behavior.

2. Insufficient Production Testing

AI systems require continuous validation in production environments, as changes in real-world conditions can significantly impact performance. Organizations often under-invest in production monitoring and testing capabilities.

3. Ignoring Edge Cases and Adversarial Scenarios

AI systems are particularly vulnerable to edge cases and adversarial inputs that don’t appear in training data. Inadequate edge case testing leads to production failures and security vulnerabilities.

4. Lack of Regulatory Compliance Testing

Organizations face governance challenges with hallucinations, bias, and fairness, along with government regulations that may impede AI applications. Failing to test for regulatory compliance creates significant legal and operational risks.

5. Underestimating Testing Infrastructure Requirements

AI systems require deep integration into business operations, workflows, and decision-making, necessitating robust infrastructure that can handle thousands of requests per second. Organizations often underestimate the computational and infrastructure requirements for comprehensive AI testing.

6. Enterprise Embrace: Solving the AI Testing Challenge

Strategic Organizational Changes

Leadership Alignment and Governance

Securing consensus from senior leaders on a strategy-led AI road map requires ongoing engagement from senior leaders across business domains, each with distinct objectives and risk appetites. Successful organizations implement:

- AI governance committees with cross-functional representation

- Clear accountability structures for AI testing quality

- Regular executive briefings on AI testing metrics and risks

- Investment allocation aligned with AI testing priorities

Workflow Redesign and Integration

The redesign of workflows has the biggest effect on an organization’s ability to see impact from AI use, with organizations beginning to reshape their workflows as they deploy AI. This includes:

- Integration of AI testing into existing development workflows

- Redesigned QA processes that accommodate AI-specific requirements

- Cross-functional collaboration protocols for AI testing

- Continuous improvement processes based on testing feedback

Cultural and Skills Transformation

Building AI Testing Competencies

Organizations must invest in developing AI-specific testing skills across their teams:

- Training existing QA professionals on AI testing methodologies

- Recruiting specialists with AI and ML testing expertise

- Establishing centers of excellence for AI testing knowledge sharing

- Creating career development paths for AI testing professionals

Change Management and Adoption

Change management is crucial for adoption, addressing barriers like tool usability and perceived reliability. Successful transformation requires:

- Clear communication of AI testing value proposition

- Gradual rollout with success showcases

- User-friendly testing tools and interfaces

- Recognition and incentive programs for testing excellence

Technology Infrastructure Modernization

Cloud-Native AI Testing Platforms

Cloud-based test automation executes automated tests using cloud infrastructure, delivering quality products at large scales. Organizations should implement:

- Scalable cloud testing infrastructure

- On-demand testing resource provisioning

- Global test execution capabilities

- Integration with cloud AI services

Advanced Analytics and Monitoring

Deploy comprehensive analytics capabilities that provide insights into AI system performance, testing effectiveness, and quality trends:

- Real-time testing dashboard and alerting

- Predictive analytics for test optimization

- Quality metrics tracking and reporting

- ROI measurement for testing investments

7. Industry Leaders: Pioneering AI Testing Excellence

Microsoft: Comprehensive AI Testing Integration

Microsoft leads in cloud AI deployment, accounting for 45% of new cloud AI case studies and 62% of generative AI-focused projects. Microsoft’s testing strategy includes:

Copilot Testing Framework: Comprehensive testing of AI assistants across Office 365, including user interaction testing, integration testing, and performance validation.

Azure AI Testing Services: Cloud-native testing capabilities that enable continuous validation of AI models and applications at enterprise scale.

Partnership Leveraging: Microsoft’s partnership with OpenAI provides access to advanced AI capabilities while maintaining rigorous testing standards for enterprise deployment.

Google: AI-Native Testing Innovation

Google Cloud focuses on practical AI use cases that deliver ROI, with clear prioritization frameworks that compare expected value generation against actionability and feasibility. Google’s approach includes:

Vertex AI Testing Platform: Comprehensive testing capabilities for AI models, including automated testing, bias detection, and performance monitoring.

Multi-Modal Testing: Advanced testing for multi-modal AI systems that process text, images, audio, and video simultaneously.

Industry-Specific Testing: Tailored testing frameworks for healthcare, finance, retail, and other industries with specific AI testing requirements.

Amazon: Scale-Focused AI Testing

Amazon focuses on cloud-based AI services and partner models, emphasizing scalability and reliability in AI testing. AWS testing capabilities include:

SageMaker Testing Tools: Integrated testing capabilities for ML model development, validation, and deployment.

Enterprise-Grade Testing: Focus on enterprise reliability, security testing, and compliance validation for AI systems.

Partner Ecosystem: Extensive partner network providing specialized AI testing tools and services for various industries and use cases.

Emerging Leaders and Specialized Solutions

Tricentis: Provides AI-based testing platforms that are totally automated, fully codeless, and intelligently driven by AI, addressing both agile development and complex enterprise applications.

TestGrid: Launched CoTester 2.0, an enterprise-grade AI testing agent that addresses critical failures of early AI testing tools with multimodal Vision-Language Agent capabilities.

Functionize: Offers agentic AI-driven test automation with patented deep learning models and 10+ years of enterprise data, providing specialized AI Agents for Generate, Diagnose, Maintain, Document, and Execute functions.

8. Gearing Up for Complex AI Ecosystems: Future-Proofing Enterprise Testing

Preparing for Agentic AI Systems

AI agents are autonomous or semi-autonomous software entities that use AI techniques to perceive, make decisions, take actions, and achieve goals in their digital or physical environments. Testing these systems requires new approaches:

- Multi-Agent Testing: Validate interactions between multiple AI agents, including coordination, conflict resolution, and emergent behaviors.

- Autonomous Decision Testing: Test AI agents’ decision-making processes under various scenarios, including edge cases and adversarial conditions.

- Safety and Control Testing: Design mechanisms for rollback actions and ensure audit logs are integral to making AI agents viable in high-stakes industries.

Advanced Testing Methodologies

Continuous Evaluation Frameworks

Private, continuous evaluation is mission-critical, with enterprises demanding trusted, explainable performance through eval infrastructure built from day one. This includes:

- Real-time model performance monitoring

- Automated drift detection and alerting

- Business-grounded metrics like accuracy, latency, and customer satisfaction

- Continuous eval pipelines integrated into production systems

Quantum-Ready Testing

Quantum computing represents an emerging trend in test automation, requiring preparation for:

- Quantum algorithm testing methodologies

- Integration testing for quantum-classical hybrid systems

- Security testing for quantum-resistant encryption

- Performance testing for quantum advantage scenarios

Emerging Technology Integration

Multimodal AI Testing

Multimodal AI processes information from text, images, audio, and video, allowing for more intuitive interactions and significantly improving accuracy. Testing requirements include:

- Cross-modal consistency validation

- Integration testing across different input modalities

- Performance testing for multimodal processing

- User experience testing for multimodal interfaces

AI-Enhanced Testing Tools

AI will not be demure but will confidently move to the front of the AI tool collection, with agentic AI making decisions, planning actions, and solving problems independently. Future testing capabilities will include:

- Autonomous test case generation and optimization

- Predictive failure analysis and prevention

- Self-optimizing testing infrastructure

- Intelligent test resource allocation

Regulatory and Compliance Evolution

Standardization and Certification

Standardized testing frameworks and certifications are underway, with organizations like ISO and IEEE working on guidelines for AI system testing. Enterprises should prepare for:

- ISO/IEC AI testing methodology standards

- ISTQB Certified Tester AI Testing certification requirements

- Industry-specific AI testing compliance requirements

- International regulatory harmonization efforts

Global Regulatory Compliance

The growing gap between AI leaders and laggards will extend to economies, with businesses in different regulatory environments experiencing varying performance outcomes. Organizations must address:

- Multi-jurisdictional compliance testing

- Regulatory change management processes

- Cross-border data and AI governance testing

- Regulatory risk assessment and mitigation

9. Conclusion: The Imperative for Action

The evidence is unequivocal: AI testing is not merely a technical necessity but a strategic imperative that will determine the success or failure of enterprise AI initiatives. With 42% of businesses abandoning AI initiatives due to inadequate preparation and testing, while 80% of software teams plan to use AI by 2025, the gap between AI ambition and AI reality has never been more critical to address.

The Strategic Imperative

Organizations that invest in comprehensive AI testing frameworks today will gain significant competitive advantages:

- Market Leadership: Early adoption of robust AI testing enables faster, more reliable AI deployment, creating first-mover advantages in AI-driven markets.

- Risk Mitigation: Comprehensive testing reduces the likelihood of costly AI failures, regulatory violations, and reputational damage.

- Innovation Enablement: Strong testing foundations enable organizations to experiment with advanced AI capabilities confidently, accelerating innovation cycles.

- Operational Excellence: Mature AI testing practices improve development velocity, reduce technical debt, and enhance overall system reliability.

The Path Forward

Success in AI testing requires a holistic approach that addresses technology, process, people, and governance dimensions:

- Immediate Actions: Assess current AI testing capabilities, identify critical gaps, and begin implementing foundational testing infrastructure.

- Strategic Investments: Allocate dedicated resources to AI testing tools, training, and talent acquisition to build sustainable testing capabilities.

- Organizational Transformation: Redesign workflows, establish governance structures, and create cultural changes that prioritize AI testing excellence.

- Continuous Evolution: Build adaptive testing capabilities that can evolve with advancing AI technologies and changing regulatory requirements.

The Call to Action

The window for establishing AI testing leadership is narrowing rapidly. As AI-native startups push deeper into industry-specific workflows and traditional SaaS players face acquisition pressures, building technical and data moats through superior testing capabilities becomes essential.

Organizations must act decisively to:

- Establish AI testing as a strategic priority with executive sponsorship

- Invest in comprehensive testing infrastructure and capabilities

- Build cross-functional teams with AI testing expertise

- Implement continuous improvement processes for testing optimization

- Prepare for the evolving regulatory and technological landscape

The future belongs to organizations that can deploy AI systems with confidence, reliability, and trust. In an era where AI failures can cost hundreds of millions of dollars and destroy market value overnight, comprehensive AI testing isn’t just best practice—it’s survival strategy.

The question isn’t whether your organization will encounter AI testing challenges, but whether you’ll be prepared to address them successfully. The time for action is now.

References

- Tricentis. (2025). “AI in Software Testing: 5 Trends of 2025.” Retrieved from https://www.tricentis.com/blog/5-ai-trends-shaping-software-testing-in-2025

- LambdaTest. (2025). “AI Adoption Challenges 2025: 7 Barriers to Overcome.” Retrieved from https://www.lambdatest.com/blog/ai-adoption-challenges/

- Wavestone. (2025). “AI in 2025: current initiatives and challenges in large enterprises.” Retrieved from https://www.wavestone.com/en/insight/ai-2025-initiatives-challenges-large-enterprises/

- TestGrid. (2025). “After Conquering Fortune 100 Testing Challenges, TestGrid Doubles Down on AI with CoTester™ 2.0.” Globe Newswire.

- Qentelli. (2025). “AI in Test Automation: Challenges & Solutions.” Retrieved from https://qentelli.com/thought-leadership/insights/the-role-of-ai-in-test-automation-challenges-and-solutions

- Gartner. (2025). “The 2025 Hype Cycle for Artificial Intelligence Goes Beyond GenAI.” Retrieved from https://www.gartner.com/en/articles/hype-cycle-for-artificial-intelligence

- IBM. (2025). “AI Agents in 2025: Expectations vs. Reality.” Retrieved from https://www.ibm.com/think/insights/ai-agents-2025-expectations-vs-reality

- TestDevLab. (2025). “Best AI-Driven Testing Tools to Boost Automation (2025).” Retrieved from https://www.testdevlab.com/blog/top-ai-driven-test-automation-tools-2025

- IEEE CISOSE. (2025). “IEEE CISOSE 2025 – Schedule, Zoom Links & Networking on Connecto.” Retrieved from https://conf.researchr.org/track/cisose-2025/ai-test2025

- DEVOPSdigest. (2025). “2025: The Year of AI Adoption for Test Automation.” Retrieved from https://www.devopsdigest.com/2025-the-year-of-ai-adoption-for-test-automation

- CIO. (2025). “11 famous AI disasters.” Retrieved from https://www.cio.com/article/190888/5-famous-analytics-and-ai-disasters.html

- GeeksforGeeks. (2025). “Top 15 AI Testing Tools for Test Automation (2025 Updated).” Retrieved from https://www.geeksforgeeks.org/websites-apps/top-ai-testing-tools-for-test-automation/

- Techopedia. (2025). “Real AI Fails 2024–2025: Deepfakes, Job Cuts & Unethical Behavior.” Retrieved from https://www.techopedia.com/ai-fails

- DigitalDefynd. (2025). “Top 30 AI Disasters [Detailed Analysis][2025].” Retrieved from https://digitaldefynd.com/IQ/top-ai-disasters/

- McKinsey. (2025). “The state of AI: How organizations are rewiring to capture value.” Retrieved from https://www.mckinsey.com/capabilities/quantumblack/our-insights/the-state-of-ai

- McKinsey. (2025). “AI in the workplace: A report for 2025.” Retrieved from https://www.mckinsey.com/capabilities/mckinsey-digital/our-insights/superagency-in-the-workplace-empowering-people-to-unlock-ais-full-potential-at-work

- Ishir. (2025). “AI in Software Testing 2025: How It’s Making QA Smarter.” Retrieved from https://www.ishir.com/blog/142281/5-ways-ai-is-making-software-testing-smarter-and-faster-in-2025.htm

- Techfunnel. (2025). “Why AI Fails: The Untold Truths Behind 2025’s Biggest Tech Letdowns.” Retrieved from https://www.techfunnel.com/fintech/ft-latest/why-ai-fails-2025-lessons/

- Gartner Peer Insights. (2025). “Best AI-Augmented Software-Testing Tools Reviews 2025.” Retrieved from https://www.gartner.com/reviews/market/ai-augmented-software-testing-tools

- Selldone. (2025). “AI Year and The Surprising Surge of Failures in 2024 and What We Can Learn for 2025.” Retrieved from https://selldone.com/blog/major-startup-failures-2024-824

- ACCELQ. (2025). “Best AI Testing Frameworks for Smarter Automation in 2025.” Retrieved from https://www.accelq.com/blog/ai-testing-frameworks/

- TestingTools.ai. (2025). “Top 5 Test Automation Frameworks in 2025: Which One Should You Choose?” Retrieved from https://www.testingtools.ai/blog/top-5-test-automation-frameworks-in-2025-which-one-should-you-choose/

- ACCELQ. (2025). “10 Best Test Automation Trends to look out for in 2025.” Retrieved from https://www.accelq.com/blog/key-test-automation-trends/

- Rainforest QA. (2025). “The top 9 AI testing tools (and what you should know).” Retrieved from https://www.rainforestqa.com/blog/ai-testing-tools

- DigitalOcean. (2025). “12 AI Testing Tools to Streamline Your QA Process in 2025.” Retrieved from https://www.digitalocean.com/resources/articles/ai-testing-tools

- SmartDev. (2025). “AI Model Testing: The Ultimate Guide in 2025.” Retrieved from https://smartdev.com/ai-model-testing-guide/

- Functionize. (2025). “Enterprise AI Test Automation Platform with Digital Workers.” Retrieved from https://www.functionize.com

- Medium. (2025). “Generative AI Showdown 2025: Microsoft vs Google vs Amazon.” Retrieved from https://medium.com/@roberto.g.infante/generative-ai-showdown-2025-microsoft-vs-google-vs-amazon-6060841f291c

- PwC. (2025). “2025 AI Business Predictions.” Retrieved from https://www.pwc.com/us/en/tech-effect/ai-analytics/ai-predictions.html

- Andreessen Horowitz. (2025). “How 100 Enterprise CIOs Are Building and Buying Gen AI in 2025.” Retrieved from https://a16z.com/ai-enterprise-2025/

- Google Cloud. (2025). “AI’s impact on industries in 2025.” Retrieved from https://cloud.google.com/transform/ai-impact-industries-2025

- Bessemer Venture Partners. (2025). “The State of AI 2025.” Retrieved from https://www.bvp.com/atlas/the-state-of-ai-2025

- Cloud Computing News. (2024). “Microsoft outperforms Amazon and Google in cloud AI.” Retrieved from https://www.cloudcomputing-news.net/news/microsoft-outperforms-amazon-and-google-in-cloud-ai/

- Google Cloud. (2025). “AI Business Trends 2025.” Retrieved from https://cloud.google.com/resources/ai-trends-report

- Google. (2024). “Google Cloud predicts AI trends for businesses in 2025.” Retrieved from https://blog.google/products/google-cloud/ai-trends-business-2025/

About NStarX: NStarX Inc. is a leading technology consulting firm specializing in AI strategy, implementation, and quality assurance for enterprise clients. For more information about our AI testing services and capabilities, visit https://nstarxinc.com