AUTHOR: NStarX Engineering has been working on shipping products/platforms using Agents (building AI-Native products/platforms). One of the interesting challenges they are coming across is Project Management with AI agents and Humans across the Software Development Life Cycle. The 15-20 minutes reading below captures the view on how we are seeing the evolution of Project Management across new age SDLC with Humans and AI Agents

The project management profession stands at an inflection point. For six decades, Project Managers (PMs) have been the essential orchestrators of software delivery—coordinating teams, tracking dependencies, managing risks, and translating between business needs and technical execution. Now, autonomous AI agents are entering the SDLC at every stage, from requirements analysis to deployment optimization. The question isn’t whether AI will transform project management, but how PMs will evolve from task drivers into strategic orchestrators of human+agent collaboration.

Why Software Development Needed Project Management

The necessity of project management emerged from software’s fundamental complexity crisis. When NATO convened its famous 1968 Software Engineering Conference in Garmisch, Germany, they documented what became known as the “software crisis”—fewer than 2% of software projects delivered on time and within budget. The problem wasn’t poor technical skills but coordination failure at scale.

Fred Brooks codified the core challenge in “The Mythical Man-Month” (1975): communication overhead grows exponentially with team size. A 5-person team has 10 communication channels; a 50-person team has 1,225. Without structured coordination, organizations faced information fragmentation, conflicting assumptions, and catastrophic integration failures.

Early methodologies like Waterfall (1970) imposed order through sequential phases and comprehensive documentation. This worked tolerably for stable requirements—flight control systems, payroll processing—but failed spectacularly for business software where requirements evolved rapidly. The DoD’s $500 million CONFIRM travel system (1988-1992) was cancelled after years of Waterfall development because delivered features were obsolete before deployment.

The Spiral Model (1986), RUP (1990s), and eventually Agile (2001) each addressed different aspects of this complexity. But even in today’s Agile environments with sophisticated DevOps automation, PM remains essential for stakeholder alignment, cross-team coordination, risk forecasting, and the irreducible human work of negotiating trade-offs when priorities conflict and capacity constraints bite.

The Three Eras of PM Evolution

Pre-Agile Era (1960-2000): Documentation-Heavy Command

PMs were documentation architects and gate enforcers. A typical mainframe PM might spend 40% of their time creating Gantt charts in MS Project, requirements traceability matrices, and change control documentation. Authority derived from information centralization—only the PM had the complete view of schedule, resources, and dependencies.

When IBM developed OS/360 (1964-1966), Frederick Brooks managed thousands of programmers using military-style hierarchies and formal communication protocols. The project was late and over budget, but without this coordination structure it would have been impossible.

Agile & DevOps Era (2000-2020): Cross-Functional Facilitation

The PM role transformed from controller to facilitator. At companies like Etsy, where continuous deployment became standard (25+ times daily by 2011), traditional PMs disappeared for core teams. Engineering Managers and Product Managers shared coordination, with heavy reliance on JIRA workflows, Jenkins pipelines, and Slack-based real-time communication.

Yet as Agile scaled, coordination challenges re-emerged. Capital One and ING Bank discovered that pure team autonomy led to architectural fragmentation and integration nightmares. SAFe (2011) essentially reintroduced project management with different labels—Release Train Engineers, Solution Architects—acknowledging that coordination overhead doesn’t vanish just because you remove the PM title.

Cloud-Native/AI-Assisted Era (2020-2025): Real-Time Orchestration

Microservices and cloud-native architectures created exponential coordination complexity. A typical SaaS company now manages 50-500 independently deployable services. The PM role fragmented into specialized functions: Technical Program Managers own cross-service coordination, Product Managers own outcomes, Engineering Managers own team health.

AI-assisted tooling began augmenting PM capabilities. GitHub Copilot (2021) accelerated coding but created new coordination needs around code quality consistency. Tools like Linear.ai introduced predictive sprint planning and cycle time analysis. However, these remained assistive tools, not autonomous agents. The fundamental PM value—synthesizing technical constraints, business priorities, and human capabilities into coherent execution—remained irreducibly human.

Until now.

The Unresolved Gaps That Agents Will Address

Despite decades of process evolution and tool sophistication, modern SDLC project management faces persistent challenges:

Estimation remains systematically optimistic. The 2020 CHAOS Report found only 31% of projects delivered on time and budget—virtually unchanged from 1994. Story points were designed to avoid time estimates, yet teams immediately convert them to days, inheriting the same planning fallacy.

Dependencies hide until they break. Netflix manages over 1,000 microservices with dependency graphs so complex that full impact analysis for any change is effectively impossible. Knight Capital’s 2012 deployment misconfiguration caused $440 million in erroneous trades because hidden state dependencies existed that no analysis had captured.

Cross-team misalignment compounds. Target’s failed e-commerce platform (2011-2013) saw 20+ Agile teams make locally optimal decisions that created systemic incoherence—different caching strategies caused price inconsistencies, different session management lost shopping carts, and different data models made cross-category search impossible. After $1+ billion invested, they eventually partnered with Shopify.

Quality gaps emerge late. Healthcare.gov’s catastrophic 2013 launch resulted from late integration testing. Individual components tested fine, but the integrated system under realistic load with actual insurance data failed completely.

Communication friction persists. Despite abundant collaboration tools, engineers know technical constraints but not business priorities, product managers know customer needs but not implementation complexity, executives know strategic goals but not execution realities. PMs spend enormous effort facilitating shared understanding.

These gaps persist because they’re fundamentally coordination problems that scale non-linearly with system complexity. Traditional approaches—better tools, more process, additional headcount—provide diminishing returns. What’s needed is a qualitatively different coordination model.

Enter the AI Agents: A New Operating System for Software Delivery

Imagine a 2028 enterprise product team working alongside 30–50 specialized AI agents: Requirements agents extract structured user stories from customer feedback, competitive intelligence, and product specs. Estimation agents decompose features, analyze historical velocity, and produce probabilistic forecasts. Code generation agents implement across multiple services following architectural patterns. Test generation agents create comprehensive test suites. Security agents scan for vulnerabilities and enforce compliance. Deployment agents coordinate multi-service releases with automated canary analysis. Observability agents configure monitoring and correlate metrics. Cost optimization agents rightsize resources and optimize queries.

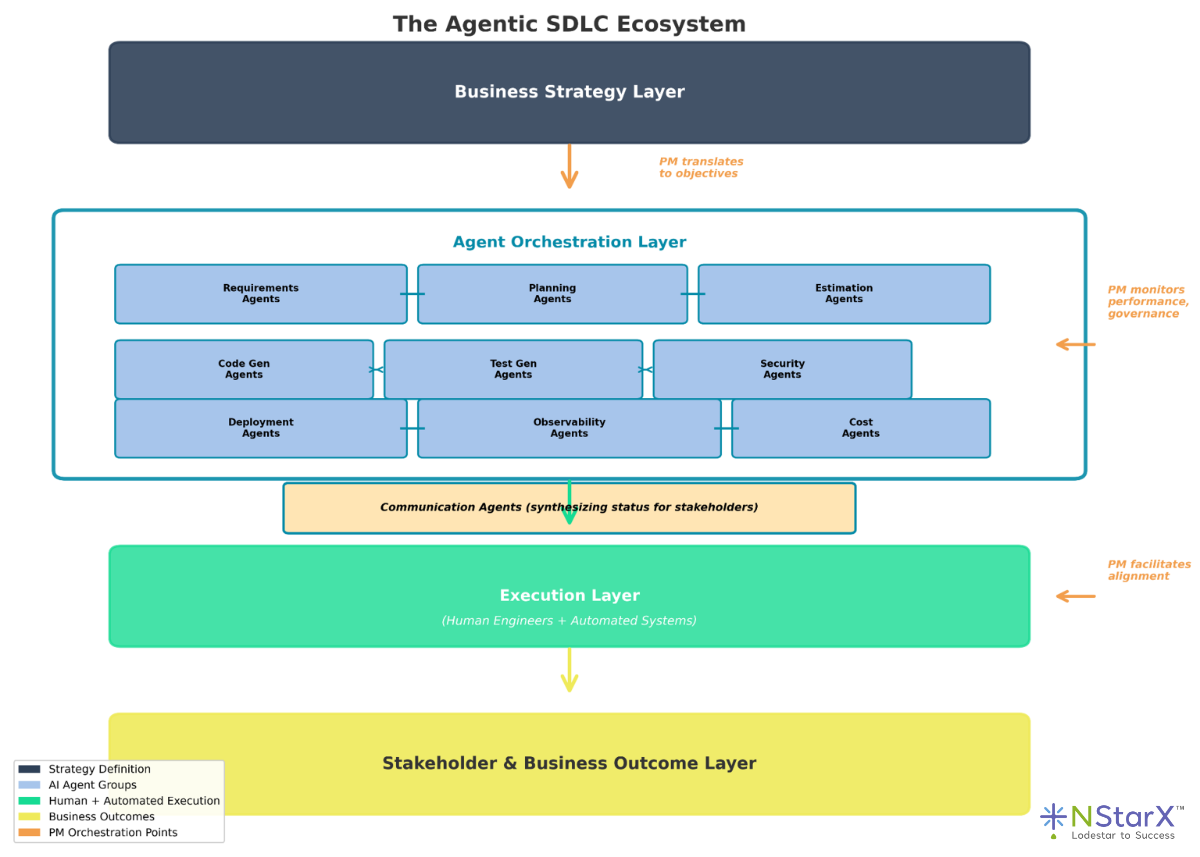

This isn’t science fiction—it’s the logical extension of current trajectories. What changes fundamentally is the PM’s role. See below the Figure 1 that represents the thinking of NStarX Project/Program Management view on how things will evolve with Human + AI Agents for Project Management:

Figure 1: NStarX view on Agentic SDLC

The New PM Operating Model: Four Specialized Roles

As organizations mature agentic capabilities, the monolithic PM role fragments:

1. Agent Supervisor Ensures individual agents perform effectively and safely. Monitors KPIs: code acceptance rates, forecast accuracy, rollback rates. Tunes agent configurations based on performance data. Implements guardrails: deployment agents never deploy during blackout windows, code agents never modify authentication without security review, security agents escalate when confidence drops below thresholds.

Real-world example: A fintech’s Agent Supervisor notices deployment agents have a 15% rollback rate versus 5% industry benchmark. Investigation reveals overly aggressive canary thresholds. After adjustment, rollback rate drops to 6%.

2. Multi-Agent Workflow Designer Designs end-to-end workflows coordinating multiple agents. Maps business objectives to multi-agent execution flows. Defines handoff protocols — exactly what each agent must provide to downstream agents. Identifies bottlenecks: when 40% of workflow time is code review waiting, implements auto-approval paths for low-risk routine changes, reducing bottlenecks 60% while maintaining quality gates.

3. Human-in-the-Loop Governance Lead Defines when human judgment is required. Establishes policy frameworks: routine dependency updates proceed autonomously, production database migrations require multi-stakeholder approval. Ensures regulatory compliance: HIPAA-compliant agents never log PHI, trading algorithm updates maintain required audit trails. Conducts ethics reviews: recommendation algorithms monitored for unintended harms.

4. AI-Augmented Delivery Manager Orchestrates end-to-end value delivery. Translates business objectives into outcome metrics that guide agent work. Conducts strategic capacity planning: allocating scarce human expertise to highest-value activities. Manages stakeholder psychology: helping executives adapt to autonomous deployments, coaching engineers on working productively with AI assistants.

New Risks and Governance Models

Hallucinated schedules: Estimation agents might produce confident forecasts based on flawed pattern matching. A retail company’s agent forecasted 4 weeks to integrate a payment provider based on past integrations, but the provider’s undocumented API limits and PCI complexity extended delivery to 8 weeks.

Mitigation: Require probability distributions rather than point estimates. Implement novelty detection—agents flag when work is outside training distribution. Use ensemble estimation from multiple approaches.

Incorrect assumptions: Agents make decisions with incomplete context. An architecture agent proposes synchronous REST APIs, violating an implicit principle (use async for user-facing features to prevent cascades) that exists only in human memory. The violation reaches production, causing exactly the cascading failure the team previously experienced.

Mitigation: Codify architectural principles in machine-readable “decision registries” that agents must query. Require agents to log assumptions explicitly. Mandate domain expert review for high-impact architectural decisions.

Dependency drift: Service A’s agent adds an event field, Service B’s agent starts consuming it without dependency tracking, Service A’s refactoring agent removes the “unused” field, Service B breaks in production.

Mitigation: Implement real-time dependency tracking analyzing runtime behavior, not just static code. Require cross-service impact analysis before interface changes. Use progressive rollouts with downstream dependency monitoring.

Compliance failures: Deployment agents optimizing for speed might skip required regulatory documentation. A financial services firm faced fines when trading algorithm updates lacked required change rationale and testing evidence.

Mitigation: Configure agents with explicit compliance requirements as hard-coded workflow gates. Implement compliance verification agents that review deployments against regulatory checklists.

The New Scorecard: KPIs for Agent-Driven Delivery

Traditional metrics (sprint velocity, on-time delivery %) become less meaningful. New KPIs emerge:

- Agent Effectiveness:

- Agent efficiency score: 60–80% faster cycle times at 40–60% lower cost

- Agent learning curve: Calibration improvement 15–20% quarter-over-quarter

- Risk Management:

- Hallucination risk index: <2% of agent outputs, trending to <1%

- Pre-deployment governance catch rate: >95% of violations

- Delivery Quality:

- Forecast accuracy: >80% releases within predicted windows

- Production defect rate: <5 per release, <3% rollback rate

- Coordination Efficiency:

- Dependency drift detection: <5% undocumented dependencies

- Cross-agent communication: <10% workflows requiring human escalation

- End-to-end cycle time: <5 days for typical features

- Strategic Outcomes:

- Features delivering impact: >70% move target business metrics

- PM strategic focus: >70% of time on strategic vs. operational work

Industry Implications: Who Moves First?

Banking & Financial Services: Short-term focus on automated compliance documentation and risk assessment. Long-term evolution toward algorithmic trading orchestration where agents develop, test, and deploy trading strategies under PM governance ensuring alignment with risk tolerance. PMs become “Compliance Orchestration Specialists.”

Healthcare: Cautious adoption due to life-critical reliability. Short-term gains in clinical documentation automation and privacy enforcement. Long-term potential in clinical decision support development and interoperability orchestration across complex EHR ecosystems. PMs become “Clinical-Technical Translators.”

Media & Entertainment: Aggressive early adoption for content delivery optimization and recommendation system evolution. Long-term autonomous platform evolution where agents continuously A/B test improvements under PM-defined strategic priorities. PMs become “Experience Optimization Strategists.”

Retail & E-Commerce: Immediate value in seasonal scaling preparation and A/B testing orchestration. Long-term autonomous personalization and omnichannel experience coordination. PMs become “Customer Experience Orchestrators.”

What Project Managers Must Do Next

The transition to agent-orchestrated delivery won’t happen overnight, but PMs should begin preparing now:

1. Develop Technical Literacy in AI Systems: Understand how agents are trained, what their failure modes are, how to diagnose performance issues. You don’t need to become an ML engineer, but you need enough understanding to supervise agents effectively.

2. Shift from Task Management to Outcome Definition: Practice framing work as objectives and success metrics rather than task lists. “Increase engagement 20% while maintaining satisfaction >4.2” gives agents and humans flexibility to optimize, unlike “implement features A, B, C.”

3. Build Skills in Workflow Design: Study how complex processes are orchestrated. Learn from manufacturing (lean production), logistics (supply chain optimization), and DevOps (CI/CD pipelines). Multi-agent workflow design will be a core PM competency.

4. Cultivate Human Skills That Agents Can’t Replicate: Deepen capabilities in negotiation, stakeholder psychology, ethical reasoning, creative problem reframing, and organizational navigation. These become differentiating PM value as tactical execution automates.

5. Experiment with Current AI-Assisted Tools: Start using GitHub Copilot, explore ChatGPT for documentation generation, try Linear.ai for intelligent work tracking. Build intuition for when to trust AI outputs versus when to override with human judgment.

6. Champion Governance Frameworks: Proactively establish policies for AI agent oversight in your organization. Define approval gates, audit requirements, and escalation protocols before agents are deployed broadly.

References and Further Reading

Historical Foundations

Brooks, F. P. (1975). The Mythical Man-Month: Essays on Software Engineering. Addison-Wesley.

- Seminal work establishing the non-linear scaling of communication overhead in software teams and the fundamental coordination challenges in software development.

Naur, P., & Randell, B. (Eds.). (1968). Software Engineering: Report on a conference sponsored by the NATO Science Committee. NATO Scientific Affairs Division.

- The Garmisch conference that coined “software engineering” and documented the software crisis, finding fewer than 2% of projects delivered on time and within budget.

Royce, W. W. (1970). “Managing the Development of Large Software Systems.” Proceedings of IEEE WESCON, 26(8), 1-9.

- Original paper that described the Waterfall model (while actually warning against using it too rigidly).

Boehm, B. W. (1986). “A Spiral Model of Software Development and Enhancement.” ACM SIGSOFT Software Engineering Notes, 11(4), 14-24.

- Introduced the risk-driven Spiral Model as an alternative to purely sequential development.

Industry Reports and Research

The Standish Group. (2020). CHAOS Report 2020: Beyond Infinity.

- Longitudinal study showing that only 31% of software projects were delivered on time and on budget—a figure essentially unchanged since 1994, demonstrating the persistence of project management challenges.

Schwaber, K., & Sutherland, J. (2001). “Agile Manifesto.” Agilemanifesto.org.

- The foundational document of the Agile movement that fundamentally challenged traditional project management approaches.

Leffingwell, D. (2011). Agile Software Requirements: Lean Requirements Practices for Teams, Programs, and the Enterprise. Addison-Wesley.

- Introduction of the Scaled Agile Framework (SAFe), acknowledging the need for coordination roles at scale.

Case Studies and Real-World Examples

Knight Capital Group Trading Loss (2012). SEC Investigation Report.

- $440 million loss in 45 minutes due to deployment configuration error and hidden dependencies, demonstrating the risks of insufficient dependency management and testing.

Healthcare.gov Launch Failure (2013). U.S. Government Accountability Office Report GAO-14-694.

- Analysis of the catastrophic launch failure due to late integration testing and insufficient coordination across contractors.

Target’s E-Commerce Platform Failure (2011-2013). Various business press reports.

- Case study of cross-team misalignment where 20+ Agile teams made locally optimal decisions that created systemic architectural incoherence, resulting in $1+ billion investment loss.

Fowler, M. (2015). “Microservices: A Definition of This New Architectural Term.” MartinFowler.com.

- Analysis of microservices architecture and the coordination complexity it introduces.

AI and Automation in Software Development

Chen, M., Tworek, J., Jun, H., et al. (2021). “Evaluating Large Language Models Trained on Code.” arXiv preprint arXiv:2107.03374.

- Research paper introducing GitHub Copilot’s underlying technology, marking the beginning of AI-assisted code generation at scale.

Vaswani, A., Shazeer, N., Parmar, N., et al. (2017). “Attention Is All You Need.” Advances in Neural Information Processing Systems, 30.

- The transformer architecture that enables modern large language models used in AI agents.

OpenAI. (2023). “GPT-4 Technical Report.” arXiv preprint arXiv:2303.08774.

- Technical details on capabilities of advanced language models that underpin autonomous agent systems.

Tools and Platforms Referenced

Atlassian JIRA (2002-present). atlassian.com/software/jira

- Industry-standard Agile project management and issue tracking platform.

Jenkins (2011-present). jenkins.io

- Open-source automation server for continuous integration and deployment.

GitHub Copilot (2021-present). github.com/features/copilot

- AI pair programmer representing early AI assistance in software development.

Linear (2019-present). linear.app

- Modern issue tracking with intelligent work tracking and cycle time analysis.

Backstage (Spotify, 2020). backstage.io

- Open platform for building developer portals, addressing dependency visibility and service management.

Regulatory and Compliance Frameworks

FDA. (2013). 21 CFR Part 11: Electronic Records; Electronic Signatures.

- Federal regulations governing software validation in medical device development.

HIPAA. Health Insurance Portability and Accountability Act (1996).

- Privacy and security requirements affecting healthcare software development.

PCI Security Standards Council. Payment Card Industry Data Security Standard (PCI DSS).

- Security standards for systems handling credit card transactions.

SEC. Securities and Exchange Commission Regulatory Requirements for Trading Systems.

- Compliance requirements for financial services algorithmic trading systems.

Methodology and Framework Documentation

Kniberg, H., & Ivarsson, A. (2012). “Scaling Agile @ Spotify with Tribes, Squads, Guilds & Chapters.” Spotify Engineering Blog.

- Documentation of Spotify’s engineering model (later revealed to be more aspirational than actual practice), influencing Agile scaling approaches.

Kim, G., Humble, J., Debois, P., & Willis, J. (2016). The DevOps Handbook: How to Create World-Class Agility, Reliability, and Security in Technology Organizations. IT Revolution Press.

- Comprehensive guide to DevOps practices that transformed software delivery and project management.

Scaled Agile Framework (SAFe). scaledagileframework.com

- Comprehensive framework for scaling Agile across large enterprises, reintroducing coordination roles.

Contemporary Perspectives on AI in Software Development

Anthropic. (2024). “Constitutional AI: Harmlessness from AI Feedback.” Anthropic.com/research.

- Research on aligning AI systems with human values and organizational policies, relevant to governance of AI agents.

Google. (2023). “AI in Software Engineering at Google: Progress and the Path Ahead.” Google Research Blog.

- Industry perspective on AI integration in software development workflows.

Microsoft. (2023). “The Impact of AI on Developer Productivity: Evidence from GitHub Copilot.” Microsoft Research.

- Empirical research on AI assistance in development, showing productivity gains while highlighting new coordination needs.

Industry Analysis and Future Trends

Gartner. (2024). “Hype Cycle for Software Engineering, 2024.”

- Technology maturity analysis positioning AI agents in software development lifecycle.

Forrester. (2023). “The Future of Software Delivery: AI-Augmented Development Teams.”

- Market research on adoption patterns and organizational impacts of AI in software development.

McKinsey & Company. (2023). “The Economic Potential of Generative AI: The Next Productivity Frontier.”

- Economic analysis of generative AI impact across industries, including software development productivity.

Online Resources and Community Knowledge

Stack Overflow Developer Survey (Annual). insights.stackoverflow.com/survey

- Annual survey of developer practices, tools, and trends.

State of DevOps Report (Annual, DORA/Google Cloud). cloud.google.com/devops/state-of-devops

- Annual research on DevOps practices and their impact on software delivery performance.

GitHub Octoverse (Annual). octoverse.github.com

- Annual report on global software development trends, languages, and practices.